4.1: Retrieval

- Last updated

- Save as PDF

- Page ID

- 81913

Broadly speaking, there are two ways of searching a corpus for a particular linguistic phenomenon: manually (i.e., by reading the texts contained in it, noting down each instance of the phenomenon in question) or automatically (i.e., by using a computer program to run a query on a machine-readable version of the texts). As discussed in Chapter 2, there may be cases where there is no readily apparent alternative to a fully manual search, and we will come back to such cases below.

However, as also discussed in Chapter 2, software-aided queries are the default in modern corpus linguistics, and so we take these as a starting point of our discussion.

4.1.1 Corpus queries

There is a range of more or less specialized commercial and non-commercial concordancing programs designed specifically for corpus linguistic research, and there are many other software packages that may be repurposed to the task of searching text corpora even though they are not specifically designed for corpus-linguistic research. Finally, there are scripting languages like Perl, Python and R, with a learning curve that is not forbiddingly steep, that can be used to write programs capable of searching text corpora (ranging from very simple two-liners to very complex solutions). Which of these solutions are available to you and suitable to your research project is not for me to say, so the following discussion will largely abstract away from such specifics.

The power of software-aided searches depends on two things: first, on the annotation contained in the corpus itself and second, on the pattern-matching capacities of the software used to access them. In the simplest case (which we assumed to hold in the examples discussed in the previous chapter), a corpus will contain plain text in a standard orthography and the software will be able to find passages matching a specific string of characters. Essentially, this is something every word processor is capable of.

Most concordancing programs can do more than this, however. For example, they typically allow the researcher to formulate queries that match not just one string, but a class of strings. One fairly standardized way of achieving this is by using so-called regular expressions – strings that may contain not just simple characters, but also symbols referring to classes of characters or symbols affecting the interpretation of characters. For example, the lexeme sidewalk, has (at least) six possible orthographic representations: sidewalk, side-walk, Sidewalk, Side-walk, sidewalks, side-walks, Sidewalks and Side-walks (in older texts, it is sometimes spelled as two separate words, which means that we have to add at least side walk, side walks, Side walk and Side walks when investigating such texts). In order to retrieve all occurrences of the lexeme, we could perform a separate query for each of these strings, but I actually queried the string in (1a); a second example of regular expressions is (1b), which represents one way of searching for all inflected forms and spelling variants of the verb synthesize (as long as they are in lower case):

(1) a. [Ss]ide[- ]?walks?

b. synthesi[sz]e?[ds]?(ing)?

Any group of characters in square brackets is treated as a class, which means that any one of them will be treated as a match, and the question mark means “zero or one of the preceding characters”. This means that the pattern in (1a) will match an upper- or lower-case S, followed by i, d, and e, followed by zero or one occurrence of a hyphen or a blank space, followed by w, a, l, and k, followed by zero or one occurrence of s. This matches all the variants of the word. For (1b), the [sz] ensures that both the British spelling (with an s) and the American spelling (with a z) are found. The question mark after e ensures that both the forms with an e (synthesize, synthesizes, synthesized) and that without one (synthesizing) are matched. Next, the string matches zero to one occurrence of a d or an s followed by zero or one occurrence of the string ing (because it is enclosed in parentheses, it is treated as a unit for the purposes of the following question mark.

Regular expressions allow us to formulate the kind of complex and abstract queries that we are likely to need when searching for words (rather than individual word forms) and even more so when searching for more complex expressions. But even the simple example in (1) demonstrates a problem with such queries: they quickly overgeneralize. The pattern would also, for example, match some non-existing forms, like synthesizding, and, more crucially, it will match existing forms that we may not want to include in our search results, like the noun synthesis (see further Section 4.1.2).

The benefits of being able to define complex queries become even more obvious if our corpus contains annotations in addition to the original text, as discussed in Section 2.1.4 of Chapter 2. If the corpus contains part-of-speech tags, for example, this will allow us to search (within limits) for grammatical structures. For example, assume that there is a part-of-speech tag attached to the end of every word by an underscore (as in the BROWN corpus, see Figure 2.4 in Chapter 2) and that the tags are as shown in (2) (following the sometimes rather nontransparent BROWN naming conventions). We could then search for prepositional phrases using a pattern like the one in (3):

(2) preposition _IN

articles _AT

adjectives _JJ (uninflected)

_JJR (comparative)

_JJT (superlative)

nouns _NN (common singular nouns)

_NNS (common plural nouns)

_NN$ (common nouns with possessive clitic)

_NP (proper names)

_NP$ (proper nouns with possessive clitic)

(3) \S+_IN (\S+_AT)? (\S+_JJ[RT]?)* (\S+_N[PN][S$]?)+

1 2 3 4

An asterisk means “zero or more”, a plus means “one or more”, and \S means “any non-whitespace character”, the meaning of the other symbols is as before. The pattern in (3) matches the following sequence:

- any word (i.e., sequence of non-whitespace characters) tagged as a preposition, followed by

- zero or one occurrence of a word tagged as an article that is preceded by a whitespace, followed by

- zero or more occurrences of a word tagged as an adjective (again preceded by a whitespace), including comparatives and superlatives – note that the JJ tag may be followed by zero or one occurrence of a T or an R), followed by

- one or more words (again, preceded by a whitespace) that are tagged as a noun or proper name (note the square bracket containing the N for common nouns and the P for proper nouns), including plural forms and possessive forms (note that NN or NP tags may be followed by zero or one occurrence of an S or a $).

The query in (3) makes use of the annotation in the corpus (in this case, the part-of-speech tagging), but it does so in a somewhat cumbersome way by treating word forms and the tags attached to them as strings. As shown in Figure 2.5 in Chapter 2, corpora often contain multiple annotations for each word form – part of speech, lemma, in some cases even grammatical structure. Some concordance programs, such as the widely-used open-source Corpus Workbench (including its web-based version CQPweb) (cf. Evert & Hardie 2011) or the Sketch Engine and its open-source variant NoSketch engine (cf. Kilgarriff et al. 2014) are able to “understand” the structure of such annotations and offer a query syntax that allows the researcher to refer to this structure directly.

The two programs just mentioned share a query syntax called CQP (for “Corpus Query Processor”) in the Corpus Workbench and CQL (for “Corpus Query Language”) in the (No)Sketch Engine. This syntax is very powerful, allowing us to query the corpus for tokens or sequences of tokens at any level of annotation. It is also very transparent: each token is represented as a value-attribute pair in square brackets, as shown in (4):

(4) [attribute="value"]

The attribute refers to the level of annotation (e.g. word, pos, lemma or whatever else the makers of a corpus have called their annotations), the value refers to what we are looking for. For example, a query for the different forms of the word synthesize (cf. (1) above) would look as shown in (5a), or, if the corpus contains information about the lemma of each word form, as shown in (5b), and the query for PPs in (3) would look as shown in (5c):

(5) a. [word="synthesi[sz]e?[ds]?(ing)?"]

b. [lemma="synthesize"]

c. [pos="IN"] [pos="AT"]? [pos="JJ[RT]"] [pos="N[PN][S$]?"]+

As you can see, we can use regular expressions inside the values for the attributes, and we can use the asterisk, question mark and plus outside the token to indicate that the query should match “zero or more”, “zero or one” and “one or more” tokens with the specified properties. Note that CQP syntax is case sensitive, so for example (5a) would only return hits that are in lower case. If we want the query to be case-insensitive, we have to attach %c to the relevant values.

We can also combine two or more attribute-value pairs inside a pair of square brackets to search for tokens satisfying particular conditions at different levels of annotation. For example, (6a) will find all instances of the word form walk tagged as a verb, while (6b) will find all instances tagged as a noun. We can also address different levels of annotation at different positions in a query. For example, (6c) will find all instances of the word form walk followed by a word tagged as a preposition, and (6d) corresponds to the query ⟨ through the NOUN of POSS.PRON car ⟩ mentioned in Section 3.1.1 of Chapter 3 (note the %c that makes all queries for words case-insensitive):

(6) a. [word="walk"%c & pos="VB"]

b. [word="walk"%c & pos="NN"]

c. [word="walk"%c] [pos="IN"]

d. [word="through"%c] [word="the"%c] [pos="NNS?"] [word="of"%c] [pos="PP$"] [word="car"%c]

This query syntax is so transparent and widely used that we will treat it as a standard in the remainder of the book and use it to describe queries. This is obviously useful if you are using one of the systems mentioned above, but if not, the transparency of the syntax should allow you to translate the query into whatever possibilities your concordancer offers you. When talking about a query in a particular corpus, I will use the annotation (e.g., the part-of-speech tags) used in that corpus, when talking about queries in general, I will use generic values like noun or prep., shown in lower case to indicate that they do not correspond to a particular corpus annotation.

Of course, even the most powerful query syntax can only work with what is there. Retrieving instances of phrasal categories based on part-of-speech annotation is only possible to a certain extent: even a complex query like that in (5c) or in (3) will not return all prepositional phrases. These queries will not, for example, match cases where the adjective is preceded by one or more quantifiers (tagged _QT in the BROWN corpus), adverbs (tagged _RB), or combinations of the two. It will also not return cases with pronouns instead of nouns. These and other issues can be fixed by augmenting the query accordingly, although the increasing complexity will bring problems of its own, to which we will return in the next subsection.

Other problems are impossible to fix; for example, if the noun phrase inside the PP contains another PP, the pattern will not recognize it as belonging to the NP but will treat it as a new match and there is nothing we can do about this, since there is no difference between the sequence of POS tags in a structures like (7a), where the PP off the kitchen is a complement of the noun room and as such is part of the NP inside the first PP, and (7b), where the PP at a party is an adjunct of the verb standing and as such is not part of the NP preceding it:

(7) a. A mill stands in a room off the kitchen. (BROWN F04)

b. He remembered the first time he saw her, standing across the room at a party. (BROWN P28)

In order to distinguish these cases in a query, we need a corpus annotated not just for parts of speech but also for syntactic structure (sometimes referred to as a treebank), like the SUSANNE corpus briefly discussed in Section 2.1.4 of Chapter 2 above.

4.1.2 Precision and recall

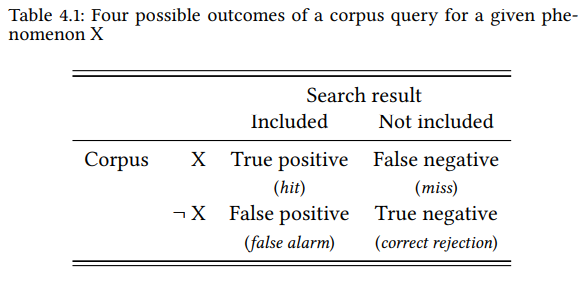

In arriving at the definition of corpus linguistics adopted in this book, we stressed the need to investigate linguistic phenomena exhaustively, which we took to mean “taking into account all examples of the phenomenon in question” (cf. Chapter 2). In order to take into account all examples of a phenomenon, we have to retrieve them first. However, as we saw in the preceding section and in Chapter 3, it is not always possible to define a corpus query in a way that will retrieve all and only the occurrences of a particular phenomenon. Instead, a query can have four possible outcomes: it may

- include hits that are instances of the phenomenon we are looking for (these are referred to as a true positives or hits, but note that we are using the word hit in a broader sense to mean “anything returned as a result of a query”);

- include hits that are not instances of our phenomenon (these are referred to as a false positives);

- fail to include instances of our phenomenon (these are referred to as a false negatives or misses); or

- fail to include strings that are not instances of our phenomenon (this is referred to as a true negative).

Table 4.1 summarizes these outcomes (¬ stands for “not”).

Obviously, the first case (true positive) and the fourth case (true negative) are desirable outcomes: we want our search results to include all instances of the phenomenon under investigation and exclude everything that is not such an instance. The second case (false negative) and the third case (false positive) are undesirable outcomes: we do not want our query to miss instances of the phenomenon in question and we do not want our search results to incorrectly include strings that are not instances of it.

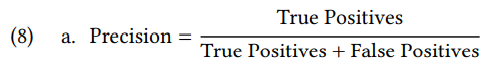

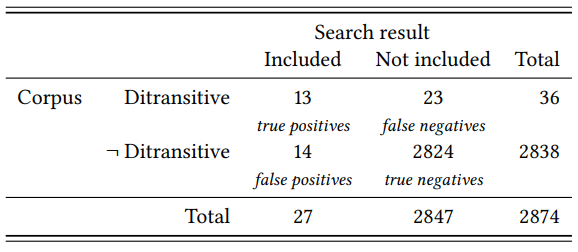

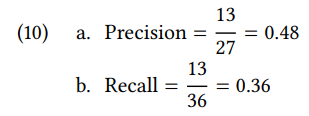

We can describe the quality of a data set that we have retrieved from a corpus in terms of two measures. First, the proportion of positives (i.e., strings returned by our query) that are true positives; this is referred to as precision, or as the positive predictive value, cf. (8a). Second, the proportion of all instances of our phenomenon that are true positives (i.e., that were actually returned by our query; this is referred to as recall, cf. (8b):1

Ideally, the value of both measures should be 1, i.e., our data should include all cases of the phenomenon under investigation (a recall rate of 100 percent) and it should include nothing that is not a case of this phenomenon (a precision of 100 percent). However, unless we carefully search our corpus manually (a possibility I will return to below), there is typically a trade-off between the two. Either we devise a query that matches only clear cases of the phenomenon we are interested in (high precision) but that will miss many less clear cases (low recall). Or we devise a query that matches as many potential cases as possible (high recall), but that will include many cases that are not instances of our phenomenon (low precision).

Let us look at a specific example, the English ditransitive construction, and let us assume that we have an untagged and unparsed corpus. How could we retrieve instances of the ditransitive? As the first object of a ditransitive is usually a pronoun (in the objective case) and the second a lexical NP (see, for example, Thompson & Koide 1987), one possibility would be to search for a pronoun followed by a determiner (i.e., for any member of the set of strings in (9a)), followed by any member of the set of strings in (9b)). This gives us the query in (9c), which is long, but not very complex:

(9) a. me, you, him, her, it, us, them

b. the, a, an, this, that, these, those, some, many, lots, my, your, his, her, its, our, their, something, anything

c. [word="(me|you|him|her|it|us|them)"%c] [word="(the|a|an|this|that|these|those|some|many|lots|my|your|his|her|its|our|their|something|anything)"%c]

Let us apply this query (which is actually used in Colleman & De Clerck 2011) to a freely available sample from the British Component of the International Corpus of English mentioned in Chapter 2 above (see the Study Notes to the current chapter for details). This corpus has been manually annotated, amongst other things, for argument structure, so that we can check the results of our query against this annotation.

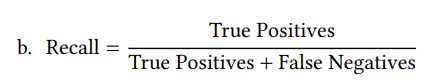

There are 36 ditransitive clauses in the sample, thirteen of which are returned by our query. There are also 2838 clauses that are not ditransitive, 14 of which are also returned by our query. Table 4.2 shows the results of the query in terms of true and false positives and negatives.

Table 4.2: Comparison of the search results

We can now calculate precision and recall rate of our query:

Clearly, neither precision nor recall are particularly impressive. Let us look at the reasons for this, beginning with precision.

While the sequence of a pronoun and a determiner is typical for (one type of) ditransitive clause, it is not unique to the ditransitive, as the following false positives of our query show:

(11) a. ... one of the experiences that went towards making me a Christian...

b. I still ring her a lot.

c. I told her that I’d had to take these tablets

d. It seems to me that they they tend to come from

e. Do you need your caffeine fix before you this

Two other typical structures characterized by the sequence pronoun-determiner are object-complement clauses (cf. 11a) and clauses with quantifying noun phrases (cf. 11b). In addition, some of the strings in (9b) above are ambiguous, i.e., they can represent parts of speech other than determiner; for example, that can be a conjunction, as in (9c), which otherwise fits the description of a ditransitive, and in (11d), which does not. Finally, especially in spoken corpora, there may be fragments that match particular search criteria only accidentally (cf. 11e). Obviously, a corpus tagged for parts of speech could improve the precision of our search results somewhat, by excluding cases like (9c–d), but others, like (9a), could never be excluded, since they are identical to the ditransitive as far as the sequence of parts-of-speech is concerned.

Of course, it is relatively trivial, in principle, to increase the precision of our search results: we can manually discard all false positives, which would increase precision to the maximum value of 1. Typically, our data will have to be manually annotated for various criteria anyway, allowing us to discard false positives in the process. However, the larger our data set, the more time consuming this will become, so precision should always be a consideration even at the stage of data retrieval.

Let us now look at the reasons for the recall rate, which is even worse than the precision. There are, roughly speaking, four types of ditransitive structures that our query misses, exemplified in (12a–e):

(12) a. How much money have they given you?

b. The centre [...] has also been granted a three-year repayment freeze.

c. He gave the young couple his blessing.

d. They have just given me enough to last this year.

e. He finds himself [...] offering Gradiva flowers.

The first group of cases are those where the second object does not appear in its canonical position, for example in interrogatives and other cases of left-dislocation (cf. 12a), or passives (12b). The second group of cases are those where word order is canonical, but either the first object (12c) or the second object (12d) or both (12e) do not correspond to the query.

Note that, unlike precision, the recall rate of a query cannot be increased after the data have been extracted from the corpus. Thus, an important aspect in constructing a query is to annotate a random sample of our corpus manual for the phenomenon we are interested in, and then to check our query against this manual annotation. This will not only tell us how good or bad the recall of our query is, it will also provide information about the most frequent cases we are missing. Once we know this, we can try to revise our query to take these cases into account. In a POS-tagged corpus, we could, for example, search for a sequence of a pronoun and a noun in addition to the sequence pronoun-determiner that we used above, which would give us cases like (12d), or we could search for forms of be followed by a past participle followed by a determiner or noun, which would give us passives like those in (12b).

In some cases, however, there may not be any additional patterns that we can reasonably search for. In the present example with an untagged corpus, for example, there is no additional pattern that seems in any way promising. In such cases, we have two options for dealing with low recall: First, we can check (in our manually annotated subcorpus) whether the data that were recalled differ from the data that were not recalled in any way that is relevant for our research question. If this is not the case, we might decide to continue working with a low recall and hope that our results are still generalizable – Colleman & De Clerck (2011), for example, are mainly interested in the question which classes of verbs were used ditransitively at what time in the history of English, a question that they were able to discuss insightfully based on the subset of ditransitives matching their query.

If our data do differ along one or more of the dimensions relevant to our research project, we might have to increase the recall at the expense of precision and spend more time weeding out false positives. In the most extreme case, this might entail extracting the data manually, so let us return to this possibility in light of the current example.

4.1.3 Manual, semi-manual and automatic searches

In theory, the highest quality search results would always be achieved by a kind of close reading, i.e. a careful word-by-word (or phrase-by-phrase, clause-by-clause) inspection of the corpus. As already discussed in Chapter 2, this may sometimes be the only feasible option, either because automatic retrieval is difficult (as in the case of searching for ditransitives in an untagged corpus), or because an automatic retrieval is impossible (e.g., because the phenomenon we are interested in does not have any consistent formal properties, a point we will return to presently).

As discussed above, in the case of words and in at least some cases of grammatical structures, the quality of automatic searches may be increased by using a corpus annotated automatically with part-of-speech tags, phrase tags or even grammatical structures. As discussed in Section 3.2.2.1 of Chapter 3, this brings with it its own problems, as automatic tagging and grammatical parsing are far from perfect. Still, an automatically annotated corpus will frequently allow us to define searches whose precision and recall are higher than in the example above.

In the case of many other phenomena, however, automatic annotation is simply not possible, or yields a quality so low that it simply does not make sense to base queries on it. For example, linguistic metaphors are almost impossible to identify automatically, as they have little or no properties that systematically set them apart from literal language. Consider the following examples of the metaphors ANGER IS HEAT and ANGER IS A (HOT) LIQUID (from Lakoff & Kövecses 1987: 203):

(13) a. Boy, am I burned up.

b. He’s just letting off steam.

c. I had reached the boiling point.

The first problem is that while the expressions in (13a–c) may refer to feelings of anger or rage, they can also occur in their literal meaning, as the corresponding authentic examples in (14a–c) show:

(14) a. “Now, after I am burned up,” he said, snatching my wrist, “and the fire is out, you must scatter the ashes. ...” (Anne Rice, The Vampire Lestat)

b. As soon as the driver saw the train which had been hidden by the curve, he let off steam and checked the engine... (Galignani, Accident on the Paris and Orleans Railway)

c. Heat water in saucepan on highest setting until you reach the boiling point and it starts to boil gently. (www.sugarfreestevia.net)

Obviously, there is no query that would find the examples in (13) but not those in (14). In contrast, it is very easy for a human to recognize the examples in (14) as literal. If we are explicitly interested in metaphors involving liquids and/or heat, we could choose a semi-manual approach, first extracting all instances of words from the field of liquids and/or heat and then discarding all cases that are not metaphorical. This kind of approach is used quite fruitfully, for example, by Deignan (2005), amongst others.

If we are interested in metaphors of anger in general, however, this approach will not work, since we have no way of knowing beforehand which semantic fields to include in our query. This is precisely the situation where exhaustive retrieval can only be achieved by a manual corpus search, i.e., by reading the entire corpus and deciding for each word, phrase or clause, whether it constitutes an example of the phenomenon we are looking for. Thus, it is not surprising that many corpus-linguistic studies on metaphor are based on manual searches (see, for example, Semino & Masci (1996) orJäkel (1997) for very thorough early studies of this kind).

However, as mentioned in Chapter 2, manual searches are very time-consuming and this limits their practical applicability: either we search large corpora, in which case manual searching is going to take more time and human resources than are realistically available, or we perform the search in a realistic time-frame and with the human resources realistically available, in which case we have to limit the size of our corpus so severely that the search results can no longer be considered representative of the language as a whole. Thus, manual searches are useful mainly in the context of research projects looking at a linguistic phenomenon in some clearly defined subtype of language (for example, metaphor in political speeches, see Charteris-Black 2005).

When searching corpora for such hard-to-retrieve phenomena, it may sometimes be possible to limit the analysis usefully to a subset of the available data, as shown in the previous subsection, where limiting the query for the ditransitive to active declarative clauses with canonical word order still yielded potentially useful results. It depends on the phenomenon and the imagination of the researcher to find such easier-to-retrieve subsets.

To take up the example of metaphors introduced above, consider the examples in (15), which are quite close in meaning to the corresponding examples in (13a–c) above (also from Lakoff & Kövecses 1987: 189, 203):

(15) a. He was consumed by his anger.

b. He was filled with anger.

c. She was brimming with rage.

In these cases, the PPs by/with anger/rage make it clear that consume, (be) filled and brimming are not used literally. If we limit ourselves just to metaphorical expressions of this type, i.e. expressions that explicitly mention both semantic fields involved in the metaphorical expression, it becomes possible to retrieve metaphors of anger semi-automatically. We could construct a query that would retrieve all instances of the lemmas ANGER, RAGE, FURY, and other synonyms of anger, and then select those results that also contain (within the same clause or within a window of a given number of words) vocabulary from domains like ‘liquids’, ‘heat’, ‘containers’, etc. This can be done manually by going through the concordance line by line (see, e.g., Tissari (2003) and Stefanowitsch (2004; 2006c), cf. also Section 11.2.2 of Chapter 11), or automatically by running a second query on the results of the first (or by running a complex query for words from both semantic fields at the same time, see Martin 2006). The first approach is more useful if we are interested in metaphors involving any semantic domain in addition to ‘anger’, the second approach is more useful (because more economical) in cases where we are interested in metaphors involving specific semantic domains.

Limiting the focus to a subset of cases sharing a particular formal feature is a feasible strategy in other areas of linguistics, too. For example, Heyd (2016) wants to investigate “narratives of belonging” – roughly, stretches of discourse in which members of a diaspora community talk about shared life experiences for the purpose of affirming their community membership. At first glance, this is the kind of potentially fuzzy concept that should give corpus linguists nightmares, even after Heyd (2016: 292) operationalizes it in terms of four relatively narrow criteria that the content of a stretch of discourse must fulfill in order to count as an example. Briefly, it must refer to experiences of the speaker themselves, it must mention actual specific events, it must contain language referring to some aspect of migration, and it must contain an evaluation of the events narrated. Obviously it is impossible to search a corpus based on these criteria. Therefore, Heyd chooses a two-step strategy (Heyd 2016: 294): first, she queries her corpus for the strings born in, moved to and grew up in, which are very basic, presumably wide-spread ways of mentioning central aspects of one’s personal migration biography, and second, she assesses the stretches of discourse within which these strings occur on the basis of her criteria, discarding those that do not fulfill all four of them (this step is somewhere between retrieval and annotation).

As in the example of the ditransitive construction discussed above, retrieval strategies like those used by Stefanowitsch (2006c) and Heyd (2016) are useful where we can plausibly argue – or better yet, show – that the results are comparable to the results we would get if we extracted the phenomenon completely.

In cases, where the phenomenon in question does not have any consistent formal features that would allow us to construct a query, and cannot plausibly be restricted to a subset that does have such features, a mixed strategy of elicitation and corpus query may be possible. For example, Levin (2014) is interested in what he calls the “Bathroom Formula”, which he defines as “clauses and phrases expressing speakers’ need to leave any ongoing activity in order to go to the bathroom” Levin (2014: 2), i.e. to the toilet (sorry to offend American sensitivities2 ). This speech act is realized by phrases as diverse as (16a–c):

(16) a. I need a pee. (BNC A74)

b. I have to go to the bathroom. (BNC CEX)

c. I’m off to powder my nose. (BNC FP6)

There is no way to search for these expressions (and others with the same function) unless you are willing to read through the entire BNC – or unless you already know what to look for. Levin (2014) chooses a strategy based on the latter: he first assembles a list of expressions from the research literature on euphemisms and complement this by asking five native speakers for additional examples. He then searches for these phrases and analyzes their distribution across varieties and demographic variables like gender and class/social stratum.

Of course, this query will miss any expressions that were not part of their initial list, but the conditional distribution of those expressions that are included may still yield interesting results – we can still learn something about which of these expressions are preferred in a particular variety, by a particular group of speakers, in a particular situation, etc.

If we assemble our initial list of expressions systematically, perhaps from a larger number of native speakers that are representative of the speech community in question in terms of regional origin, sex, age group, educational background, etc., we should end up with a representative sample of expressions to base our query on. If we make our query flexible enough, we will likely even capture additional variants of these expressions. If other strategies are not available, this is certainly a feasible approach. Of course, this approach only works with relatively routinized speech event categories like the Bathroom Formula – greetings and farewells, asking for the time, proposing marriage, etc. – which, while they do not have any invariable formal features, do not vary infinitely either.

To sum up, it depends on the phenomenon under investigation and on the research question whether we can take an automatic or at least a semi-automatic approach or whether we have to resort to manual data extraction. Obviously, the more completely we can extract our object of research from the corpus, the better.

1 There are two additional measures that are important in other areas of empirical research but do not play a central role in corpus-linguistic data retrieval. First, the specificity or true negative rate – the proportion of negatives that are incorrectly included in our data (i.e. false negatives); second, negative predictive value – the proportion of negatives (i.e., cases not included in our search) that are true negatives (i.e., that are correctly rejected). These measures play a role in situations where a negative outcome of a test is relevant (for example, with medical diagnoses); in corpus linguistics, this is generally not the case. There are also various scores that combine individual measures to give us an overall idea of the accuracy of a test, for example, the F1 score, defined as the harmonic mean of precision and recall. Such scores are useful in information retrieval or machine learning, but less so in corpus-linguistic research projects, where precision and recall must typically be assessed independently of, and weighed against, each other.

2 See Manning & Melchiori (1974), who show that the word toilet is very upsetting even to American college students.