10.2: Types of Learning and Biological Adaptation

- Page ID

- 118704

This page is a draft and under active development. Please forward any questions, comments, and/or feedback to the ASCCC OERI (oeri@asccc.org).

Learning Objectives

- Describe the various types of learning and how each contributes to adaptation

- Discuss in what ways both classical and operant conditioning involve the learning of predictive relations between different types of events in the world

- Discuss the learning of predictive relationships among events in the environment and the navigation of "the causal texture of the world"

- Describe specialized forms of learning and how each contributes to solution of a particular adaptive problem (problem domain) in a particular species

- Explain classical (Pavlovian) conditioning and instrumental (operant) conditioning

- Describe similarities and differences between the two types of conditioning

- Explain the concept of adaptively specialized learning and give examples

- Discuss the four aspects of observational learning according to Social Learning Theory.

- Describe how habituation conserves resources

- Describe research that suggests cognitive learning in animals

Overview

Learning brings to mind school, memorization, tests, and study. But these associations are related to a single type of learning only, human verbal learning. Actually, learning, as you may already know, is a much broader phenomenon. In this module, we examine learning in its various forms and the ways in which it advances adaptation to the environment. First of all, learning of various sorts occurs in a wide range of animal species. For example, experiments have shown that even an insect species, the honey bee, can learn (for example, where a food source is located). Furthermore, across species, a wide range of behaviors can be acquired or modified by learning, giving behavior in many species a great deal of plasticity. In addition, we now know that learning is not a single unitary capacity, but instead there are many different, but related, processes, ranging from imprinting to cognitive learning. As discussed previously, behavior is a type of adaptation. It is not random, but quite orderly, and as such it is dependent upon information for its organization. Remember that the information that organizes behavioral adaptations can come from genes, from learning, or from a combination of both. In this module, preliminary to discussion of the neural mechanisms of learning, we examine several types of learning and how they interact with genetic information to serve adaptation. Imprinting is a clear example of learning that is biologically (genetically) "prepared" (controlled and facilitated by innate factors as a consequence of evolution). Both forms of conditioning, classical and operant, involve learning the predictive relationships between events in the environment and both involve innate genetic facilitation (biological preparedness) and biological constraints just like imprinting does. However, in conditioning, genetic influences on learning involve much more abstract and relational features of the world than in more clearly specialized forms of learning such as imprinting and learned taste aversion. In every kind of learning, learning fills in informational details that are too variable, short-term, and individually experienced to be captured by natural selection and thereby encoded into genes. By contrast, more abstract and general features, common to particular learning situations or problem types across generations, are captured by natural selection, genetically encoded, and provide innate, genetic information about the problem type (problem domain) that guides and facilitates the learning.

Habituation and Adaptation

Habituation is a simple form of learning that produces a decrease in response to a repeated stimulus that has no adaptive significance. In other words, as an adaptively unimportant stimulus is repeatedly presented to an animal, it will gradually cease responding to the stimulus as it learns that the stimulus holds no information that might impact its biological fitness (survival and reproduction). Prairie dogs typically sound an alarm call when threatened by a predator, but they become habituated to the sound of human footsteps when no harm is associated with this sound; therefore, they no longer respond to the sound of human footsteps with an alarm call, nor do they run and hide, but instead they spend their time and energy on more productive behaviors. Habituation is a form of non-associative learning given that the stimulus is not associated with any punishment or reward.

Habituation is highly adaptive. It increases the efficient use of an animal's limited resources. Imagine birds feeding in a field along a highway. The first time a bird feeds in this situation, it will probably fly away when a car speeds past. However, over time, as more cars pass by and nothing harmful or otherwise adaptively significant happens to the bird, it learns to ignore the passing traffic. Instead of flying away, it simply continues to feed, saving time, metabolic energy, and the limited processing capacity of its brain. Habituation conserves these important biological resources of the organism, resources that would otherwise be wasted by responding to adaptively irrelevant stimuli. Without habituation, behavior would lose biological efficiency, decreasing biological fitness in the struggle for survival and reproduction.

Habituation occurs when your brain has already extracted all the adaptive information that a stimulus or an event holds. Once habituation has occurred, as long as the habituated stimulus or event is unchanging, it makes good adaptive sense for the animal to ignore it. Habituation has done its job by conserving the animal's biological resources. But habituation is only half the story. What happens when, after habituation, a change in the stimulus or situation occurs? Stimulus change might signal that something important has happened. If so, the animal must reengage the processing capacity of its brain, its attentional resources, to evaluate whether the stimulus change might signal something that is adaptively important that may require a behavioral response. This recovery of responding to a habituated stimulus is called dishabituation (the undoing of habituation as the organism now responds to the stimulus situation it had previously stopped responding to). For example, suppose there is a loud backfire from one of the passing cars. The birds fly off, at least temporarily.

Figure \(\PageIndex{1}\): Habituation is adaptive. Birds feeding along a road eventually habituate to passing traffic preventing them from wasting time and energy by flying away (escape behavior) unnecessarily in the absence of real threat, thereby increasing time and energy available for feeding (Image from Wikimedia Commons; File:Birds feeding on newly ploughed land with Ballymagreehan Hill in the background - geograph.org.uk - 2202208.jpg; https://commons.wikimedia.org/wiki/F..._-_2202208.jpg; by Eric Jones; licensed under the Creative Commons Attribution-Share Alike 2.0 Generic license.).

Another example comes from a former student of mine who had been in the Navy submarine corps. He said that when he was assigned to his first sub, he couldn't sleep for several nights because of the loud metallic noise coming from the engines. However, after several nights of hearing the racket, he finally "got used to it", he habituated to a repeated stimulus that had no adaptive significance, and he was then able to sleep soundly. Can you guess what would now wake him up? It was the absence of the noise, when the engines were shut down, that would immediately awaken him. Maybe the sub is under attack, or the engines are damaged and they are sinking, or perhaps the sub has docked in port and there could be some adaptive consequences for the sailor like a potential romantic opportunity on shore, or other adaptive resources such as preferred foods. Habituation is undone by stimulus change. Habituation and dishabituation are constantly at work efficiently conserving or deploying the organism's biological resources (metabolic energy, time, and brain processing capacity) as required by environmental circumstances. The net effect is efficient and adaptive distribution of the organism's biological resources, thereby increasing biological fitness.

Figure \(\PageIndex{2}\): After habituation to the loud sound of their submarine's engines, sailors sleep through the noise, but are awakened by the silence if the engines stop. Stimulus change reverses habituation. This is called dishabituation and is highly adaptive; see text (Image from Wikimedia Commons; File:Vladivostok Submarine S-56 Forward torpedo room P8050522 2475.jpg; https://commons.wikimedia.org/wiki/F...50522_2475.jpg; by Alexxx1979; licensed under the Creative Commons Attribution-Share Alike 4.0 International license).

One lesson is clear: that stimulus change is very potent in causing the brain to become alert and responsive. This implies that the brain must hold an ongoing representation or neural model of a current situation and must respond to any mismatch between that ongoing memory and the current stimulus situation (see chapter on Intelligence, Cognition, and Language). This is a very adaptive property of the brain's functioning. For example, imagine you are studying psychology late at night. Your window is open and a breeze gently bangs the blinds against the window frame as you read. You will probably habituate to the sound, and thus pay no attention to it, to the point where you don't even hear it as you continue to study. But then, if the lights in your house suddenly go out, you probably will dishabituate and pay attention to every little sound, including the sounds coming from the blinds. Your brain is programmed to respond to stimulus change, to novelty, because in stimulus change there may be information important for survival and reproduction, demanding an adaptive response. Could those sounds in the dark be an intruder who might harm you or your family (who, by the way, carry a portion of your genes; see discussion of kin selection in the chapter on evolution and genetics)?

If there is no danger or other adaptively significant event associated with the stimulus change, then habituation will again occur as the animal or human learns that the stimulus change can be safely ignored. As noted above, habituation prevents the animal or human from wasting time, energy, and processing capacity on things that have no adaptive importance, thereby increasing behavioral efficiency. Habituation is an elegant mechanism for conserving biological resources and thus contributes greatly to adaptation and biological fitness (survival and reproduction). Interestingly, habituation mechanisms appear to be highly conserved across a wide range of species, emphasizing the importance of habituation for survival (see Schmid et al., 2010).

Conditioning and Biological Adaptation

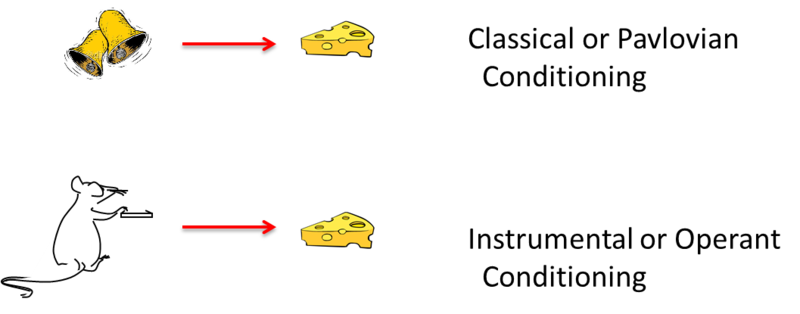

Basic principles of learning are always operating and always influencing human and animal behavior. This section continues by discussing two fundamental forms of associative learning: classical (Pavlovian) and operant (instrumental) conditioning. Through them, we respectively learn to associate 1) stimuli in the environment, or 2) our own behaviors, with adaptively significant events, such as rewards and punishments or other stimuli. The two types of learning have been intensively studied because they have powerful effects on behavior, and because they provide methods that allow scientists to rigorously analyze learning processes in detail, an endeavor important to biological psychologists searching for the physical basis of learning and memory in the brain. This module describes how both classical and operant conditioning involve the learning of predictive relationships between events (if event A occurs, then event B is likely to follow), and how this contributes to adaptation. The module concludes by discussion of adaptively specialized forms of learning and observational learning, which are forms of learning that are largely distinct from classical and operant conditioning.

Classical Conditioning

Many people are familiar with the classic study of “Pavlov’s dog,” but rarely do they understand the significance of Pavlov's discovery. In fact, Pavlov’s work helps explain why some people get anxious just looking at a crowded bus, why the sound of a morning alarm is so hated, and even why we swear off certain foods we’ve only tried once. Classical (or Pavlovian) conditioning is one of the fundamental ways we learn about the world, specifically its predictive relationships between events. This involves learning what leads to what in an organism's environment. This is extremely valuable adaptive information that animals and humans appear to incorporate into brain-mediated cognitive models of how the world works--information which allows prediction and therefore improved organization of behavior into adaptive patterns (a topic to be discussed more in the chapter on Intelligence, Cognition, and Language). But classical conditioning is far more than just a theory of learning; it is also arguably a theory of identity. Your favorite music, clothes, even political candidate, might all be a result of the same process that makes a dog drool at the sound of bell.

Figure \(\PageIndex{3}\): Does your dog learn to beg for food because you reinforce her by feeding her from the table? Classical conditioning signals to your dog when food reward may be available and operant conditioning (see below) occurs when begging is reinforced by a food reward [Image: David Mease, https://nobaproject.com/modules/cond...g-and-learning, CC BY-NC 2.0]

In his famous experiment, Pavlov rang a bell and then gave a dog some food. After repeating this pairing multiple times, the dog eventually treated the bell as a signal for food, and began salivating in anticipation of the treat. This kind of result has been reproduced in the lab using a wide range of signals (e.g., tones, light, tastes, settings) paired with many different events besides food (e.g., drugs, shocks, illness; see below).

We now believe that this same learning process, classical conditioning, is engaged, for example, when humans associate a drug they’ve taken with the environment in which they’ve taken it; when they associate a stimulus (e.g., a symbol for vacation, like a big beach towel) with an emotional event (like a burst of happiness); and when a cat associates the sound of an electric can opener with feeding time. Classical conditioning is strongest if the conditioned stimulus (CS) and unconditioned stimulus (US) are intense or salient. It is also best if the CS and US are relatively new and the organism hasn’t been frequently exposed to them before. And it is especially strong if the organism’s biology (its genetic evolution) has prepared it to associate a particular CS and US. For example, rats, coyotes, and humans are naturally inclined by natural selection and the resulting evolution of their brain circuitry to associate an illness with a flavor, rather than with a light or tone. Although classical conditioning may seem “old” or “too simple” a theory, it is still widely studied today because it is a straightforward test of associative learning that can be used to study other, more complex behaviors, and biological psychologists can use it to study how at least some forms of learning occur in the brain.

Conditioning Involves Learning Predictive Relations

Pavlov was studying the salivation reflex, reflexive drooling in response to food placed in the mouth. A reflex is an innate, adaptive, genetically built-in stimulus-response (S-R) relationship; in this case, the stimulus is food in the mouth, the unconditioned or unconditional stimulus (a stimulus unconditional, i.e. not dependent upon prior learning; US), and the unconditional response (unconditional, not dependent, upon prior learning; UR) is salivation to food in the mouth which lubricates the food and starts to break it down, facilitating mastication, swallowing, and digestion--the adaptive function of the salivation reflex (US--UR).

Recall that Pavlov found that if he rang a bell just before feeding his dogs, the dogs came to associate the sound of the bell with the coming presentation of food. Thus, after this classical conditioning had occurred, the bell alone (the conditioned or conditional stimulus--conditional upon prior learning; CS) caused the dog to salivate, before the presentation of food. The conditional stimulus (Pavlov's original terminology which was mistranslated from Russian as "conditioned") is a signal that has no importance to the organism until it is paired with something that does have adaptive significance, in this case, food. Reliable pairing of CS and US close together in time (temporal contiguity) is important to the processes of classical conditioning. However, temporal contiguity alone is not sufficient for classical conditioning. The pairing of stimuli must be reliable so that a predictive relationship is maintained between CS and US. Predictiveness between CS and US determines whether or not an association is formed. If the CS does not predict occurrence of the US, no conditioning occurs (Gallistel, et al., 1991).

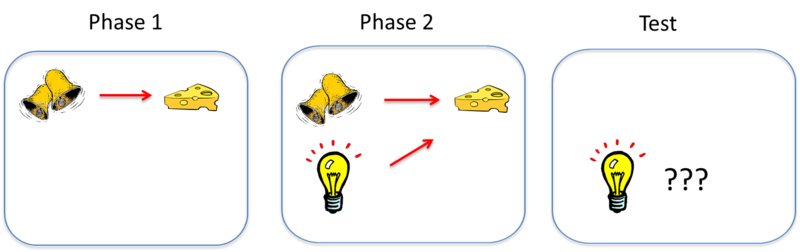

Evidence that classical conditioning involves the learning of predictive relations between stimuli comes from a phenomenon known as blocking (see Kamin, 1969). In blocking, if a CS already predicts the US, and if a new CS is added, no association is formed between the new CS and the US, no matter how many times or how closely in time they are paired together. The reason? The original CS already predicts the US, so no new or additional predictive information about occurrence of the US is added by the new CS. Thus, the new CS is ignored as a source of predictive information and is therefore "blocked" from becoming a signal for the US, and no conditioning between the new CS and US occurs. Furthermore, other studies show that if the predictive relation between CS and US is "diluted" or weakened by any presentations of the US without the CS preceding it, conditioning between CS and US is impeded. This is because if the US can occur without the CS preceding the US, then this fact reduces the predictive power of the CS and violates the contingency between CS and US, impairing conditioning. This shows that a predictive contingency between CS and US is what is learned in classical conditioning and that contingency is the necessary condition for conditioning to take place (Rescorla, 1966, 1968).

Both of these important research findings emphasize that conditioning is about learning to predict what leads to what in the environment--classical conditioning is the learning of predictive relations between stimuli, leading to the learned emission of responses that prepare for the coming US. Blocking and other related effects indicate that the learning process tends to take in the most valid predictors of adaptively significant events and ignore the less useful ones. This is an exceedingly important process that allows the animal or human to learn important adaptive information about its specific environment.

For example, as a result of classical conditioning, Pavlov's dogs learned to anticipate, to predict, one event (US) signaled by earlier occurrence of another (CS). In a sense, the dogs use the bell as a signal to predict that food is on its way; therefore they salivate when the bell is rung, because they now expect that food is coming next. After conditioning, when Pavlov presented the bell, the dogs salivated to the bell alone (conditional response, CR), whereas before conditioning they did not. Thus, we have a change in behavior as a result of experience--learning. The animal has acquired information about its environment--i.e. predictive information. The world is full of predictive and causal relations. If the organism is to effectively organize its behavior to maximize adaptation, it must be able to learn these relations and put that information to adaptive use. Classical conditioning is a mechanism for learning these predictive (sometimes causal) relationships among things in its specific environment.

Figure \(\PageIndex{4}\): Illustration of blocking. Phase 1: Conditioning where bell is CS, food is US. Phase 2: after Phase 1 conditioning has been completed a second CS, light, is added. Test: When light CS is tested, no CR occurs to it; no conditioning to the light CS occurs. Reason: Bell CS already predicts food; therefore light does not add any additional predictive information so no conditioning to light CS occurs. Conclusion: Classical conditioning involves learning of predictive relations between stimuli. An animal first learns to associate one CS—call it stimulus A—with a US. In the illustration above, the sound of a bell (stimulus A) is paired with the presentation of food. Once this association is learned, in a second phase, a second stimulus—stimulus B—is presented alongside stimulus A, such that the two stimuli are paired with the US together. In the illustration, a light is added and turned on at the same time the bell is rung. However, because the animal has already learned the association between stimulus A (the bell) and the food, the animal doesn’t learn an association between stimulus B (the light) and the food. That is, the conditioned response only occurs during the presentation of stimulus A, because the earlier conditioning of A “blocks” the conditioning of B when B is added to A. (Image from M. Boulton, NOBA, https://nobaproject.com/modules/cond...g-and-learning; courtesy of Bernard W. Balleine).

Classical conditioning is anticipatory, and preparatory for future appearance of the US. A classical CS (e.g., the bell) does not merely elicit a simple, unitary reflex. Pavlov emphasized salivation because that was the only response he measured. But his bell almost certainly elicited a whole system of responses that functioned to get the organism ready for the upcoming US (food). For example, in addition to salivation, CSs (such as the bell) that signal that food is coming also elicit the secretion of gastric acid, pancreatic enzymes, and insulin (which gets blood glucose into cells). All of these responses anticipate coming food and prepare the body for efficient digestion, improving adaptation.

Classical conditioning is also involved in other aspects of eating. Flavors associated with certain nutrients (such as sugar or fat) can become preferred without arousing any awareness of the pairing (an example of implicit or unconscious learning). For example, protein is a US that your body automatically craves more of once you start to consume it (UR): since proteins are highly concentrated in meat, the flavor of meat becomes a CS (or cue, that proteins are on the way), which perpetuates the cycle of craving for yet more meat (this automatic bodily reaction now a CR).

In a general way, classical conditioning occurs whenever neutral stimuli are associated with adaptively significant events. Classical conditioning is of great adaptive importance in a wide range of circumstances across species. Significantly, Gallistel (1992) points out that classical conditioning permits an animal to map what Tolman and Brunswik (1935) called the "causal texture of the environment." Understanding what causes what in the environment is highly adaptive because it allows successful, accurate prediction about events and makes effective manipulation of the environment possible. In a way, in classical conditioning, the animal learns an if-then contingency or if-then predictive relationship between two events in its environment. If event A occurs, then event B is likely to follow. If bell rings, then food is likely coming next. Learning what leads to what in the world permits prediction and therefore much more effective organization of behavioral adaptation than would otherwise be possible. In the evolution of animal brains, we can speculate that animals who had the capacity in their brains for classical conditioning would certainly have had an adaptive advantage, and thus a selective (as in natural selection) advantage, over other members of their species which lacked that ability or possessed it to a lesser degree (Koenigshofer, 2011, 2016). If you can predict that something is coming, then you can better prepare for it and increase the chances of an adaptive outcome for you (and your genes). Classical conditioning has apparently been conserved during evolution given that classical conditioning is found in an enormous variety of animals from humans to sea slugs.

Modern studies of classical conditioning use a very wide range of CSs and USs and measure a wide range of conditioned responses including emotional responses. If an experimenter sounds a tone just before applying a mild shock to a rat’s feet, the tone will elicit fear or anxiety after one or two pairings. Similar fear conditioning plays a role in creating many anxiety disorders in humans, such as phobias and panic disorders, where people associate cues (such as closed spaces, or a shopping mall) with panic or other emotional trauma (Mineka & Zinbarg, 2006). Here, rather than a response like salivation, the CS triggers an emotion. Have you experienced conditioned emotional responses to formerly emotionally neutral stimuli? How about the emotional response you might have to a particular song, or a particular place, that once was your and your ex-partner's favorite song or the place you would go to meet one another? Or after you break up with someone, you seem to see their car (or cars like theirs) everywhere and you have a brief moment of anticipation that you might see them. Classical conditioning plays a large role in our emotional lives, and in the emotional lives of other animals as well.

Figure \(\PageIndex{5}\): Intense emotions can be classically conditioned to originally neutral stimuli such as places or songs associated with a special person. (Image from Wikimedia Commons; File:A couple looking at the sea.jpg; https://commons.wikimedia.org/wiki/F...at_the_sea.jpg; by Joydip dutt; https://commons.wikimedia.org/wiki/F...at_the_sea.jpg; licensed under the Creative Commons Attribution-Share Alike 4.0 International license).

Where classical conditioning takes place in the nervous systems varies with the nature of the stimuli involved. For example, clearly an auditory CS such as a bell will involve auditory pathways including the auditory system's medial geniculate nucleus of the thalamus (Fanselow & Poulos, 2005) and auditory cortex in the temporal lobe, while a visual CS will involve visual pathways including the visual system's lateral geniculate nucleus of the thalamus and visual areas of cortex. A US such as food will involve taste pathways, whereas presentation of a painful shock US will involve pain and fear pathways. Researchers have identified a number of brain areas that become active during fear conditioning. In response to a painful shock during conditioning, there is increased neural activity in the red nucleus, amygdala, dorsal striatum, the brainstem, the insula, and parts of the anterior cingulate cortices, whereas anticipation of a shock that was not delivered increased neural activity in only the red nucleus, the anterior insular and dorsal anterior cingulate cortices (Linnman, et al., 2011). Other biological psychologists found that the cerebellum has a special role in simpler forms such as the conditioning of the eye blink reflex in rabbits (Thompson & Steinmetz, 2009), whereas more complex conditioning involves the hippocampus and hippocampal-cerebellar interactions (Schmajuk & DiCarlo, 1991). Research by scientists such as Eric Kandell (1976) on the neural mechanisms of learning suggests that at the level of the synapse the mechanisms for learning may be very similar for all types of learning, across species, involving changes in synaptic conductance. We will consider mechanisms of learning at the synaptic level later in this chapter.

Pavlov discovered a number of phenomena of classical conditioning. He found that once his dogs were classically conditioned to one bell (one CS), they would also respond (produce a CR, salivation to a bell in this case) to other similar stimuli (other bells). You may recall from Introductory Psychology that this is called stimulus generalization. The more similar a new stimulus was to the original CS, the stronger the CR to the new stimulus. This is called stimulus generalization gradient. For example, if you get bitten by a German Shepherd dog, you will probably be a little afraid of all dogs (stimulus generalization) and you will be more afraid of all big dogs and even more afraid of all German Shepherd dogs (stimulus generalization gradient). Clearly, stimulus generalization is very adaptive, because it permits you to make predictions not only about the original stimulus but also about similar stimuli--stimuli of the same category. Inferences and predictions based on generalization from one member of a category to other members of the same category is one prominent feature of cognition in intelligent creatures, including humans (see chapter on Intelligence, Cognition, and Language).

Pavlov also discovered extinction. Recall from Introductory Psychology that if the CS is not followed by the US at least some of the time, then eventually the CR will no longer occur in response to the presentation of the CS. When the animal stops producing the CR in response to the CS, we say that extinction of the CR has occurred. After conditioning of the salivation response, when the dog salivates to the bell, it is really salivating in anticipation of coming food. Even though the food may not now occur, the dog will still salivate to the bell for awhile, but eventually it learns to stop responding to the bell (extinction of the conditioned response) once the bell no longer reliably predicts food. In extinction, the animal hasn't forgotten its previous experience of a predictive relationship between stimuli, it simply learns that the predictive relation no longer holds, so it uses this new information and stops responding to the old contingency between previously conditioned events. This is demonstrated by the fact that a single pairing of the old predictive contingency between CS and US is often sufficient to reinstate the extinguished response. In conditioning the dog learned the rule that bell means coming food; in extinction the dog learns that the rule has changed and bell no longer predicts food, so naturally it stops salivating to the bell--that's extinction of the CR.

This makes good adaptive sense. Remember that in classical conditioning, the animal is learning a predictive relationship between two events in its environment (i.e. If CS occurs, then US is likely to follow, so the animal responds to the CS in anticipation of the expected US). However, if in fact the predictive relationship between those events in the environment no longer exists (that is, occurrence of CS no longer reliably predicts the future occurrence of the US), then it makes good (adaptive) sense that the animal will no longer respond to the CS since the CS no longer signals that the US will follow.

In fact, what is happening during conditioning and extinction is that the animal is tracking (or adjusting its mental model of) the predictive relationships among events in its world as those relationships dynamically change. Conditioning is really part of an animal's neural representation of its environment (not necessarily explicit or conscious), including the things in that environment and the relationships among them. Any individual instance of classical conditioning is just a slice in time in a continuous; ongoing, dynamic process, within the animal's brain, of modeling or representing the world and its changing contingencies--contingencies or predictive relations between stimuli which the animal must successfully "navigate" in order to successfully adapt. Extinction, like acquisition of conditioned responses, is just one component in the animal's ability to track the predictive relationships between events in its environment and then to put that information to work guiding behavior. Similar dynamics apply to the second major type of conditioning, operant (or instrumental) conditioning.

Operant Conditioning

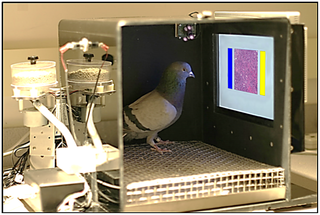

Operant conditioning, as you probably recall from your course in Introductory Psychology, is a second kind of conditioning in which the organism actively operates on the environment. Like classical conditioning, it also involves learning a predictive relationship between events, but the events are different than in classical conditioning. In operant or instrumental conditioning, the animal or human learns a predictive relationship between its own voluntary behavior and the outcome or effect of that behavior. In the best-known example, a rat in a laboratory learns to press a lever or a bird pecks a display in a cage (called a “Skinner box”) to receive food.

Figure \(\PageIndex{6}\): An operant conditioning chamber. Pecking on the correct color will deliver food reinforcement to the pigeon (Image from Wikimedia Commons; File:The pigeons’ training environment.png; https://commons.wikimedia.org/wiki/F...nvironment.png; Creative Commons CC0 1.0 Universal Public Domain Dedication).

Operant conditioning research studies how the effects of a behavior influence the probability that it will occur again. For example, the effects of the rat’s lever-pressing behavior (i.e., receiving a food pellet) influences the probability that it will keep pressing the lever. According to Thorndike’s law of effect, when a behavior has a positive (satisfying) effect or consequence, it is likely to be repeated in the future. However, when a behavior has a negative (painful/unpleasant) consequence, it is less likely to be repeated in the future. In other words, the effect of a response, determines its future probability. Effects that increase the frequency of behaviors are referred to as reinforcers, and effects that decrease their frequency are referred to as punishers.

In general, operant conditioning involves an animal or human tracking the reinforcement contingencies or dependencies in its environment and exploiting them to its advantage. Clearly, operant conditioning is highly adaptive. Operant conditioning shapes the voluntary behavior of the organism to maximize reinforcement and minimize punishment, just as we would expect from the law of effect. The law of effect in turn depends upon reward circuits in the mesolimbic system (see Chapter on Psychoactive Drugs) and on circuits for pain and the emotional response to pain which are distributed in many regions of the brain including the somatosensory cortex, insula, amygdala, anterior cingulate cortex, and the prefrontal cortex (see chapter on Sensory processes). Reinforcers activate mesolimbic pleasure circuitry in the brain or reduce activity in pain circuits. This gives the organism feedback about its actions. Reinforcers and activation of pleasure circuits tend to be associated with enhanced adaptation (food, a reinforcer to an animal deprived of food, enhances chances of survival). Punishers activate circuitry for physical or emotional pain and tend to be associated with reduced adaptation and biological fitness (i.e. reduced chances of survival and reproduction; physical pain is associated with potential tissue damage, while emotional pain is often associated with loss of things or persons upon which one depends or highly values, including romantic partners, financial or social status, etc.). Voluntary behaviors by the animal which lead to positive consequences (and increased pleasure; reinforcement) for the animal tend to be repeated (increase in probability in the future). Voluntary behaviors which the animal produces that lead to nothing or lead to negative outcomes for the animal (a reduction in pleasure or the occurrence of pain) tend not to be repeated, but avoided by the animal in the future. In this way, by the law of effect, in psychologically healthy individuals, voluntary behavior becomes molded into more and more adaptive patterns so that animals and humans spend most of their time engaged in activities that improve adaptation and avoid activities that reduce it. By this means, learned voluntary behavior tends to serve successful behavioral adaptation.

This is a wonderful mechanism for assuring the adaptive organization of behavior in species which have behavioral capacities beyond rigidly genetically programmed behavior. In species such as fish, reptiles, amphibians, and many invertebrate species, the larger portion of behavioral adaptation is organized by information in the genes, honed over millions of years of evolution by natural selection. These reflexes and "instincts" generally do not rely very much, if at all, upon information acquired during the lifetime of the individual animal (i.e. learning), but instead upon information acquired over the evolutionary history of the species and stored in DNA.

Learned voluntary behavior is flexible but must also be directed into adaptive patterns by some principle, and that principle is the law of effect. The law of effect allows behavioral flexibility but also provides a mechanism for assuring that the animal (or human) learns adaptive behavior, behavior good for it and its genes, most of the time. "Voluntary" behaviors are not rigidly pre-formed by genetic information, but are organized in a more general way which allows for their modification by information gathered from the animal's current environment (experienced day to day and even moment to moment). This information gathering, moment to moment, continuously modifying "voluntary" behavior into more and more adaptive patterns is the essence of operant conditioning and its evolutionary significance. Without the law of effect, or some similar principle provided by natural selection, voluntary behavior would be chaotic, without direction, and could not be an instrument for adaptation to the environment. Behavior would degenerate into maladaptive form leading to the rapid demise of such creatures with brains so ill-equipped for survival. The law of effect, built into the circuitry of human and animal brains by eons of natural selection, permits behavioral flexibility with an overall adaptive direction--an exquisite evolved mechanism for regulation of behavior that is not under rigid genetic control.

Like classical conditioning, operant conditioning is part of the way an animal forms a mental model or neural representation of its environment and the predictive relationships among events in that environment, but, as noted above, the events are different than in the case of classical conditioning. In classical conditioning, it is the predictive relationships between two stimulus events that is learned. In operant conditioning, it is the predictive relationships between a voluntary response and its outcome or consequence that is learned. Prediction allows preparation for future, even if the future is moments away, and preparation for what is coming next improves chances of survival and reproduction (for example, classical conditioning of sexual reflexes gives a reproductive advantage in male chimpanzees competing for mating opportunities; operant conditioning of courtship behaviors in humans can improve reproductive success).

When we examine operant conditioning, we see that it bears some similarities to evolution by natural selection. In operant conditioning, voluntary responses by an animal that are successful usually are reproduced (repeated) and those that are unsuccessful (not reinforced) get weeded out (they are not repeated). In evolution by natural selection, genetic alternatives that are successful (lead to better adaptation) get reproduced (and appear in future generations) and those that are unsuccessful (do not lead to improved adaptation or are maladaptive) get weeded out (they are not replicated in future generations). In each case, a selection mechanism (natural selection or the law of effect) preserves some alternatives (genetic or behavioral, respectively) into the future, while eliminating others. In both cases, the result is improved adaptation.

Instrumental Responses Come Under Stimulus Control

As you know, the classic operant response in the laboratory is lever-pressing in rats, reinforced by food. However, things can be arranged so that lever-pressing only produces pellets when a particular stimulus is present. For example, lever-pressing can be reinforced only when a light in the Skinner box is turned on; when the light is off, no food is released from lever-pressing. The rat soon learns to discriminate between the light-on and light-off conditions, and presses the lever only in the presence of the light (responses in light-off are extinguished). In everyday life, think about waiting in the turn lane at a traffic light. Although you know that green means go, only when you have the green arrow do you turn. In this regard, the operant behavior is now said to be under stimulus control. And, as is the case with the traffic light, in the real world, stimulus control is probably the rule. We constantly monitor the environment for signals that tell us that a certain voluntary behavior is now called for, when at other times it is not. Will approaching someone to ask for a date be successful? We look for signs telling us whether the voluntary response of asking for a date is likely or unlikely to lead to reinforcement. If positive signs are not present, we may look for a better opportunity.

The stimulus controlling the operant response is called a discriminative stimulus. The stimulus can “set the occasion” for the operant response: It sets the occasion for the response-reinforcer relationship. For example, a person who is reinforced for drinking alcohol or eating excessively learns these behaviors in the presence of certain stimuli—a pub, a set of friends, a restaurant, or possibly the couch in front of the TV. These stimuli can be associated with the reinforcer. In this way, classical and operant conditioning are always intertwined.

Stimulus-control techniques are widely used in the laboratory to study perception and other psychological processes in animals. For example, the rat would not be able to respond appropriately to light-on and light-off conditions if it could not see the light. Following this logic, experiments using stimulus-control methods have tested how well animals see colors, hear ultrasounds, and detect magnetic fields. That is, researchers pair these discriminative stimuli with responses they know the animals already understand (such as pressing the lever). In this way, the researchers can test if the animals can learn to press the lever only when an ultrasound is played, for example.

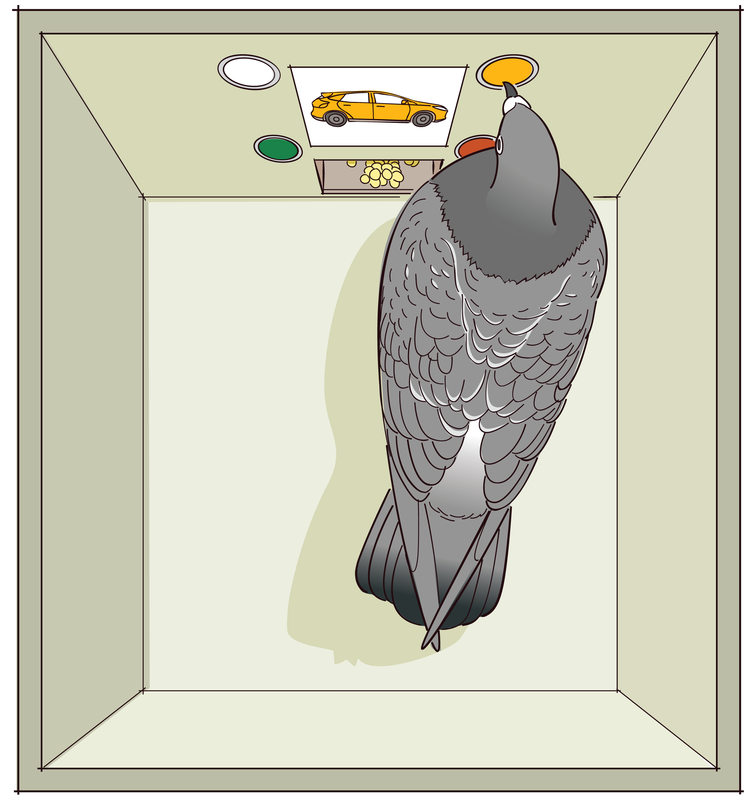

These methods can also be used to study “higher” cognitive processes. For example, pigeons can learn to peck at different buttons in a Skinner box when pictures of flowers, cars, chairs, or people are shown on a miniature TV screen (Wasserman, 1995). Pecking button 1 (and no other) is reinforced in the presence of a flower image, button 2 in the presence of a chair image, and so on. Pigeons can learn the discrimination readily, and, under the right conditions, will even peck the correct buttons associated with pictures of new flowers, cars, chairs, and people they have never seen before. The birds have learned to categorize the sets of stimuli. Stimulus-control methods can be used to study how such categorization is learned, and for biological psychologists these methods can be used along with specific brain lesions to investigate the brain areas involved in categorization in animals.

Operant Conditioning Involves Choice

Another thing to know about operant conditioning is that the response always requires choosing one behavior over others. The student who goes to the bar on Thursday night chooses to drink instead of staying at home and studying. The rat chooses to press the lever instead of sleeping or scratching its ear in the back of the box. The alternative behaviors are each associated with their own reinforcers. And the tendency to perform a particular action depends on both the reinforcers earned for it and the reinforcers earned for its alternatives.

Figure \(\PageIndex{8}\): Pigeon in Skinner Box.

To investigate this idea, choice has been studied in the Skinner box by making two levers available for the rat (or two buttons available for the pigeon), each of which has its own reinforcement or payoff rate. A thorough study of choice in situations like this has led to a rule called the quantitative law of effect (Herrnstein, 1970), which can be understood without going into quantitative detail: The law acknowledges the fact that the effects of reinforcing one behavior depend crucially on how much reinforcement is earned for the behavior’s alternatives. For example, if a pigeon learns that pecking one light will reward two food pellets, whereas the other light only rewards one, the pigeon will only peck the first light. However, what happens if the first light is more strenuous to reach than the second one? Will the cost of energy outweigh the bonus of food? Or will the extra food be worth the work? In general, a given reinforcer will be less reinforcing if there are many alternative reinforcers in the environment. For this reason, alcohol, sex, or drugs may be less powerful reinforcers if the person’s environment is full of other sources of reinforcement, such as achievement at work or love from family members. (Image: from NOBA, Conditioning and Learning; https://nobaproject.com/modules/cond...g-and-learning)

An important distinction of operant conditioning is that it provides a method for studying how consequences influence “voluntary” behavior. As discussed above, the rat’s decision to press the lever is voluntary, in the sense that the rat is free to make and repeat that response whenever it wants. Classical conditioning, on the other hand, is just the opposite—depending instead on “involuntary” behavior (e.g., the dog doesn’t choose to drool; it just does). So, whereas the rat must actively participate and perform some kind of behavior to attain its reward, the dog in Pavlov’s experiment is a passive participant. One of the lessons of operant conditioning research, then, is that voluntary behavior is strongly influenced by its consequences (recall the law of effect).

Figure \(\PageIndex{9}\): Basic elements of classical and instrumental conditioning. The two types of learning differ in what is learned. In classical conditioning, the animal has learned to associate a stimulus with an adaptively significant event, food in this case. In operant conditioning, the animal has learned to associate a voluntary behavior, pressing the lever, with an adaptively significant event, food. (Image and caption from M. Boulton, NOBA, Conditioning and Learning; courtesy of Bernard W. Balleine; https://nobaproject.com/modules/cond...g-and-learning).

The two types of conditioning occur continuously throughout our lives. It has been said that “much like the laws of gravity, the laws of learning are always in effect” (Spreat & Spreat, 1982).

Cognition in Instrumental Learning

Modern research also indicates that reinforcers do more than merely strengthen or “stamp in” the behaviors they are a consequence of, as was Thorndike’s original view. Instead, animals learn about the specific consequences of each behavior, and will perform a behavior depending on how much they currently want—or “value”—its consequence. This idea is best illustrated by a phenomenon called the reinforcer devaluation effect (see Colwill & Rescorla, 1986). A rat is first trained to perform two instrumental actions (e.g., pressing a lever on the left, and on the right), each paired with a different reinforcer (e.g., a sweet sucrose solution, and a food pellet). At the end of this training, the rat tends to press both levers, alternating between the sucrose solution and the food pellet. In a second phase, one of the reinforcers (e.g., the sucrose) is then separately paired with illness. This conditions a taste aversion to the sucrose. In a final test, the rat is returned to the Skinner box and allowed to press either lever freely. No reinforcers are presented during this test (i.e., no sucrose or food comes from pressing the levers), so behavior during testing can only result from the rat’s memory of what it has learned earlier. Importantly here, the rat chooses not to perform the response that once produced the reinforcer that it now has an aversion to (e.g., it won’t press the sucrose lever). This means that the rat has learned and remembered the reinforcer associated with each response, and can combine that knowledge with the knowledge that the reinforcer is now “bad.” Reinforcers do not merely stamp in responses; response varies with how much the rat wants/doesn’t want a reinforcer. As described above, in operant conditioning, the animal tracks the changing reinforcement and punishment contingencies in its environment, as part of a dynamic mental model or neural representation of its world, and it adjusts its behavior accordingly.

Habituation, classical conditioning, and operant conditioning are just three types of learning. Each contributes to adaptation and increases biological fitness (chances of solving the problems associated with survival and reproduction). There are many other types of learning as well, often quite specialized to perform a particular biological function. These specialized forms of learning, also known as adaptive specializations of learning, have been studied mostly by ethologists and behavioral biologists, but biological psychologists are becoming increasingly interested in such forms of learning and their importance. For instance, psychologists have studied one of these specialized forms of learning, taste aversion learning, extensively. In addition to this form, we will now also examine adaptive specializations of learning involved in bird navigation by the stars during migration, bee navigation by the sun, and acquisition of bird song, which some researchers have compared to human language acquisition.

Specialized Forms of Learning (Adaptive Specializations of Learning)

"Biological mechanisms are adapted to the exigencies of the functions they serve. The function of memory is to carry information forward in time. The function of learning is to extract from experience properties of the environment likely to be useful in the determination of future behavior." (Gallistel, 2003, p. 259).

"One cannot use a hemoglobin molecule as the first stage in light transduction and one cannot use a rhodopsin molecule as an oxygen carrier, any more than one can see with an ear or hear with an eye. Adaptive specialization of mechanism is so ubiquitous and so obvious in biology, . . . it is odd but true that most past and contemporary theorizing about learning does not assume that learning mechanisms are adaptively specialized for the solution of particular kinds of problems. Most theorizing assumes that there is a general purpose learning process in the brain, a process adapted only to solving the problem of learning. . . . , this is equivalent to assuming that there is a general purpose sensory organ, which solves the problem of sensing." (Gallistel, 2000, p. 1179).

As the quotes above imply, learning, the acquisition of information during the lifetime of the individual animal, comes in many different forms. Many specialized forms of learning are highly specific for the solution of specific adaptive problems (problem domains) often found in only one or a few species. These types of learning probably involve specialized neural circuits, organized for their particular specialized form of learning by natural selection.

A familiar sight is ducklings walking or swimming after their mothers. Hatchling ducks recognize the first adult they see, usually their mother, and make a bond with her which induces them to follow her. This type of non-associative learning is known as imprinting. Imprinting is a form of learning occurring at a particular age or a particular life stage that is very important in the maturation process of these animals as it encourages them to stay near their mother in order to be protected, greatly increasing their chances of survival. Imprinting provides a powerful example of biologically prepared learning in response to particular genetically determined cues. In the case of imprinting, the duckling becomes imprinted on the first moving object larger than itself that it sees after hatching. Because of this, if newborn ducks see a human before they see their mother, they will imprint on the human and follow him or her in just the same manner as they would follow their real mother. Because this form of learning is biologically prepared, some cues that trigger the duckling to learn to follow its mother (or a person) are innately programmed into the duckling's genetically controlled brain circuitry while the details of the imprinting object (usually their real mother) are learned from the duckling's initial experience with a moving object larger than itself (again, most likely its real mother). Though this learning is very rapid because it is genetically (biologically) facilitated, it is also very resilient, its effects on behavior lasting well into adulthood. This form of learning illustrates well the general principle that all learning relies upon an underpinning of genetic information about some features of the learning situation while details are filled in by learning through experience. For example, genetic information programs the duckling's brain to follow the first moving thing it sees after hatching that is larger than itself, the details about what that object looks like are added into the bird's memory by learning during imprinting.

Taste Aversion Learning

Taste aversion learning is a specialized form of learning that helps omnivorous animals (those that eat a wide range of foods) to quickly learn to avoid eating substances that might be poisonous. In rats, coyotes, and humans, for example, eating a new food that is later followed by sickness causes avoidance of that food in the future. With food poisoning, although having fish for dinner may not normally be something to be concerned about (i.e., a “neutral stimulus”), if it causes you to get sick, you will now likely associate that neutral stimulus (the fish) with the adaptively significant event of getting sick.

This specialized form of learning is genetically or "biologically prepared" so that taste-illness associations are easily formed by a single pairing of a taste with illness, even if taste and illness are separated by extended periods of time from 10-15 minutes up to several hours. Colors of food, or sounds present when the food is consumed, cannot be associated with illness, only taste and illness can be associated. This is known as "belongingness," an example of "biological preparedness," in which learning has specialized properties as a result of genetic evolution--in this case, only taste and illness can be readily associated, not visual or auditory stimuli and illness (Garcia and Koelling, 1966; Seligman, 1971). A second difference is that taste aversion learning requires only a single pairing of taste and illness, whereas classical conditioning usually requires many pairings of the CS and US. The usual requirements for multiple pairings and close temporal contiguity between stimuli don't apply in learned taste aversion. This makes adaptive sense, because in the wild, sickness from a new food which is toxic won't occur immediately, but only after it has had time to pass into the digestive system and be absorbed. And when it comes to poisons, you may not get a second chance to learn to avoid that substance in the future.

This genetically prepared form of learning evolved over generations of natural selection in omnivorous species which consume a large variety of foods. Species that are very specialized feeders, such as Koala bears which eat eucalyptus leaves exclusively or baleen whales which filter ocean water for microscopic organisms, have not evolved taste aversion learning. They simply don't need it because they never experience novel foods that could possibly pose a threat.

Adaptively Specialized Learning for Navigation and Song Acquisition

Another example of a specialized form of learning evolved for the solution of a specific adaptive problem is the learning of the night sky by a species of bird called Indigo Buntings. Have you ever noticed that the stars appear to rotate throughout the night around an area of the sky, the celestial pole, close to the north star? Indigo buntings migrate south for the winter and then return north when temperatures warm. Experiments have shown that they use the stars, specifically the Big Dipper containing the pole star, Polaris, and the circumpolar stars within about 35 degrees of the center of rotation of the night sky to guide them on their journey of several thousand kilometers. But there is a special problem presented by the Earth's environment. Because of a wobble of the Earth's axis, the celestial pole and the north star change every few thousand years. A different star and star pattern will then indicate north and become the beacon that guides the migration of the Indigo Buntings.

These birds are genetically programmed to migrate and to use the night sky to guide them. But the specific constellation and star patterns that guide them must be learned because celestial north and the stars that mark it change too frequently in evolutionary time to be genetically encoded into their brains by evolution. The north star, whether Polaris, or another star thousands of years from now, is the only star that appears approximately stationary throughout the night, while all the other stars appear to turn around it as the night progresses. Indigo Bunting nestlings essentially sit up at night and watch the night sky from their nests. Because of a genetically programmed set of brain circuits, they note the star which barely moves, picking it out from all the others, which appear to move throughout the night (the movement of course is just apparent; the stars are relatively fixed, what's moving is the Earth rotating under them). By learning which star is essentially stationary in the night sky and the patterns of stars around it, they learn the correct star and star patterns to use for navigation when it comes time to migrate. The time for buntings to learn the night sky is limited. If deprived of exposure to the night sky until later in life they cannot learn the night sky as adults (Gallistel, et al., 1991, p. 16).

Two other examples of specialized forms of learning are of interest here. Bees learn to navigate by the position of the sun which changes with the date, time, and place on the Earth's surface. When moved to a new location, they learn to update their navigation to compensate for the change in location. This learning is strongly guided by innate, genetically evolved information stored in the bee brain (Dyer & Dickson, 1994; Towne, 2008).

One other example is of special interest, song learning in song birds. Birds of a specific species can only learn the song of their own species illustrating genetic constraints on what songs they can learn (Gallistel, et al., 1991, p. 21). White-crowned sparrows show variations in their song depending upon their geographical location, akin to dialects in human language. Experiments have shown that young white-crowned sparrows learn the specific dialect by exposure to it during a critical period for song learning in the species. A critical period during which learning must occur indicates another genetic constraint on learning and is similar to critical periods evident in imprinting, learning the night sky by Indigo Buntings, and a sensitive period in humans prior to adolescence for language acquisition.

The learning involved in the above examples is not classical or operant conditioning, but each is a very specialized form of learning in one particular species for solution to a specific adaptive problem (a specific domain). Note that the learned information supplements and interacts with information in the genes, guiding the animal to attend to and to readily learn highly specific information. In the case of the buntings, in a sense, the birds "imprint" on the correct stars for navigation later in life.

These examples illustrate a general principle that learning does not occur without genetically evolved information guiding and facilitating the learning in various ways. Note that in each of the examples above, genetically internalized, implicit “knowledge” about invariants in the learning situation pre-structures the learning, facilitating the capture of the problem-relevant details too variable and short-term to be captured directly by natural selection in genetic mechanisms (Koenigshofer, 2017).

This principle applies not only to highly specialized forms of learning such as that in Indigo buntings or the other examples above, but even in more general forms of learning such as classical conditioning (Chiappe & MacDonald, 2005; Gallistel, 1992; Koenigshofer, 2017) and causal learning in children (Koenigshofer, 2017; Walker and Gopnik, 2014), each of which involves innate knowledge about general features of each learning situation. For example, in the cause of causal learning, children have an innate predisposition to understand cause-effect as a general property of the world that guides their learning of specific cause-effect relations in their particular environment (Koenigshofer, 2017). Furthermore, several genetically internalized “default assumptions” built into conditioning and causal learning mechanisms by natural selection are that “causes are reliable predictors of their effects, that causes precede their effects, . . . that in general, causes tend to occur in close temporal proximity to their effects (Revulsky, 1985; Staddon, 1988) . . . and the temporal contiguity of cause and effect is a general feature of the world” (Chiappe and MacDonald, 2005).

In addition, from this perspective, the fact that conditioning (except in taste aversion learning) generally requires multiple pairings of CS and US, or of operant response and its effect, is not a shortcoming or a “weakness” of conditioning processes but rather may be an evolved adaptive feature of conditioning fashioned by natural selection to prevent formation of potentially spurious (and therefore, maladaptive) associations (Koenigshofer, 2017).

Gallistel (1992) argues that even classical conditioning is a specialized form of learning that performs important biological functions. According to Gallistel, classical conditioning was shaped by natural selection to discover "what predicts what" in the environment--in mathematical terms, according to Gallistel, a problem in multivariate non-stationary time series analysis. Time series because what is being learned is the temporal dependence or contingency of one event on another; multivariate time series because many events/variables may or may not predict the US; and non-stationary because the contingencies between CS and US often change over time (Gallistel, 2000, p. 1186).

Observation learning

Observation learning is learning by watching the behavior of others. It is obviously an extremely important form of learning in us, but it is also "an ability common to primates, birds, rodents, and insects" (Dawson et al., 2013). It plays a crucial role in human social learning. Imagine a child walking up to a group of children playing a game on the playground. The game looks fun, but it is new and unfamiliar. Rather than joining the game immediately, the child opts to sit back and watch the other children play a round or two. Observing the others, the child takes note of the ways in which they behave while playing the game. By watching the behavior of the other kids, the child can figure out the rules of the game and even some strategies for doing well at the game.

Observational learning is a component of Albert Bandura’s Social Learning Theory (Bandura, 1977), which posits that individuals can learn novel responses via observation of key others’ behaviors. Observational learning does not necessarily require reinforcement, but instead hinges on the presence of others, referred to as social models. Social models in humans are typically of higher status or authority compared to the observer, examples of which include parents, teachers, and older siblings. In the example above, the children who already know how to play the game could be thought of as being authorities—and are therefore social models—even though they are the same age as the observer. By observing how the social models behave, an individual is able to learn how to act in a certain situation. Other examples of observational learning might include a child learning to place her napkin in her lap by watching her parents at the dinner table, or a customer learning where to find the ketchup and mustard after observing other customers at a hot dog stand.

Bandura theorizes that the observational learning process consists of four parts. The first is attention—as, quite simply, one must pay attention to what s/he is observing in order to learn. The second part is retention: to learn one must be able to retain the behavior s/he is observing in memory. The third part of observational learning, initiation, acknowledges that the learner must be able to execute (or initiate) the learned behavior. Lastly, the observer must possess the motivation to engage in observational learning. In our vignette, the child must want to learn how to play the game in order to properly engage in observational learning. Bandura, Ross, & Ross (1963) demonstrated that children who observed aggression in adults showed less aggressive behavior if they witnessed the adult model receive punishment for their aggression. Bandura referred to this process as vicarious reinforcement, as the children did not experience the reinforcement or punishment directly, yet were still influenced by observing it.

Observation Learning and Cultural Transmission Improve Biological Fitness in Non-human Animals

Orangutans in protected preserves have been seen copying humans washing clothes in a river. After watching humans engage in this behavior, one of the animals took pieces of clothing from a pile of clothes to be washed and engaged in clothes washing behavior in the river, imitating behavior it had recently observed in humans. Orangutans also use observation learning to copy behaviors of other orangutans. Observation learning has also been reported in wild and captive chimpanzees and in other primates such as Japanese macaque monkeys. One of the most thoroughly studied examples of observation learning in animals is in Japanese macaques.

There is a large troop of macaques (Old World monkeys; see Chapter 3) which lives near the beaches on an island in Japan, Koshima Island. Researchers were interested to see how these animals would respond if the researchers scattered novel foods such as wheat grain on the sand. The animals meticulously picked out the grain from the sand one grain at a time, laboriously cleaning it a grain at a time. However, researchers reported that after a while one Japanese macaque in the troop invented an efficient method for cleaning the grain by scooping up handfuls of wheat grain and sand and throwing the mixture into the water. The wheat grains floated while the sand sank. This macaque monkey then scooped up quantities of clean grain floating on the surface of the water and ate its fill, repeating its novel grain cleaning behavior again and again. Although this showed impressive intelligence and inventiveness on the part of this monkey, just as significant was the fact that other members of the troop observed this behavior and copied it. By observation learning, most of the troop learned this innovative method of cleaning and separating grain from sand. As time passed, youngsters observed older members engaging in this learned behavior and copied it so that the behavior was passed over several generations (Schofield et al., 2018). This example of observation learning illustrates one of its most important biological functions--observation learning is a primary mechanism of cultural transmission of learned behavior across generations, not only in animals like the macaques, but even more so in humans. The effect of the cultural transmission of learned behavioral adaptations from generation to generation produces "cumulative culture . . . characterized as a ‘ratchet,’ yielding progressive innovation and improvement over generations (Tomasello et al. 1993). The process can be seen as repeated inventiveness that leads to incrementally better adaptation; that is, more efficient, secure, . . . survival and reproduction" (Schofield et al., 2018, p. 113). Efficient cultural transmission of successful learned behavior is enormously powerful in boosting biological fitness, and it accounts for those features of human life, such as science, technology, governments, and so on, that distinguish us most from all other species on the planet (Koenigshofer, 2011, 2016).

Cognitive Learning

Classical and operant conditioning are only two of the ways for humans and other intelligent animals to learn. Some primates, including humans, are able to learn by imitating the behavior of others and by taking instructions. The development of complex language by humans has made cognitive learning, a change in knowledge as a result of experience or mental manipulation of existing knowledge, the most prominent method of human learning. In fact, that is how you are learning right now by reading this information, you are experiencing a change in your knowledge. Humans, and probably some non-human animals, can make mental images of objects or organisms, imagining changes to them or behaviors by them as they anticipate the consequences. Cognitive learning is so powerful that it can be used to understand conditioning (discussed in the previous modules). In the reverse scenario, conditioning cannot help someone learn about cognition.

Classic work on cognitive learning was done by Wolfgang Köhler with chimpanzees. He demonstrated that these animals were capable of abstract thought by showing that they could learn how to solve a puzzle. When a banana was hung in their cage too high for them to reach, along with several boxes placed randomly on the floor, some of the chimps were able to stack the boxes one on top of the other, climb on top of them, and get the banana. This implies that they could visualize the result of stacking the boxes even before they had performed the action. This type of learning is much more powerful and versatile than conditioning.

Cognitive learning is not limited to primates, although they are the most efficient in using it. Maze-running experiments done with rats in the 1920s were the first to show cognitive skills in a simple mammal, the rat. The motivation for the animals to work their way through the maze was the presence of a piece of food at its end. In these studies, the animals in Group I were run in one trial per day and had food available to them each day on completion of the run. Group II rats were not fed in the maze for the first six days and then subsequent runs were done with food for several days after. Group III rats had food available on the third day and every day thereafter. The results were that the control rats, Group I, learned quickly, figuring out how to run the maze in seven days. Group III did not learn much during the three days without food, but rapidly caught up to the control group when given the food reward. Group II learned very slowly for the six days with no reward to motivate them. They did not begin to catch up to the control group until the day food was given; it then took two days longer to learn the maze. Results suggested that although there was no reward for the rats in groups II and III during several days at the beginning of the experiment, the rats were still learning. This is evidenced particularly by the performance of group III. Although not given any food reward in the maze for the first three days of the experiment, nevertheless, once food reward was added on day 4, the maze learning performance of rats in this group rapidly caught up with the control group (Group I) that had received food reward in the maze every day from the start of the experiment. This showed that even in the absence of food reward, the rats were learning about the maze. This was important because at that time many psychologists believed that learning can only occur in the presence of reinforcement. This experiment showed that learning information about a maze, gaining knowledge about it, can occur even in the absence of reinforcement. Some have referred to this as "latent learning." The learning that has taken place remains hidden or latent in behavior until a motivating factor such as food reward stimulates action that reveals the learning that had previously not been apparent in observable behavior. In this case, the latent learning of the maze in groups II and III became apparent once food reward was made available for the rats in these groups on day 6 and day 3 respectively. Cognitive learning involves acquisition of knowledge, in this case, about a maze; in this example, it took place in rats without food reward, as evidenced by their performance in the maze once food reward was presented as a motivator. This procedure revealed the learning that had taken place in the first few days of the experiment.

Clearly this type of learning is different from conditioning. Although one might be tempted to believe that the rats simply learned how to find their way through a conditioned series of right and left turns, Edward C. Tolman proved a decade later that the rats were making a representation of the maze in their minds, which he called a “cognitive map.” This was an early demonstration of the power of cognitive learning and how these abilities were not limited just to humans. Research discussed more fully in Chapter 14 indicates that the visual, motor, and parietal cortical areas as well as the hippocampus are involved in ability to form cognitive maps and engage in cognitive learning.

Key Points

- Cognitive learning involves change in knowledge as a result of experience and the mental manipulation of information; it is a great deal more powerful and flexible than either operant or classical conditioning.

- The development of complex language by humans has made cognitive learning the most prominent method of human learning.

- Cognitive learning is not limited to primates; rats have demonstrated the ability to build cognitive maps, as well, which are mental representations used to acquire, code, store, recall, and decode information about the environment.

Key Terms

- cognitive map: a mental representation which serves an organism to acquire, code, store, recall, and decode information about the relative locations and attributes of phenomena in their everyday environment

- cognitive learning: the process by which one acquires knowledge or skill in cognitive processes, which include reasoning, abstract thinking, and problem solving

Outside Resources

- Article: Rescorla, R. A. (1988). Pavlovian conditioning: It’s not what you think it is. American Psychologist, 43, 151–160.

- Book: Bouton, M. E. (2007). Learning and behavior: A contemporary synthesis. Sunderland, MA: Sinauer Associates.

- Book: Bouton, M. E. (2009). Learning theory. In B. J. Sadock, V. A. Sadock, & P. Ruiz (Eds.), Kaplan & Sadock’s comprehensive textbook of psychiatry (9th ed., Vol. 1, pp. 647–658). New York, NY: Lippincott Williams & Wilkins.

- Book: Domjan, M. (2010). The principles of learning and behavior (6th ed.). Belmont, CA: Wadsworth.

- Video: Albert Bandura discusses the Bobo Doll Experiment.

Vocabulary (click on terms for definitions)

- Blocking

- In classical conditioning, the finding that no conditioning occurs to a stimulus if it is combined with a previously conditioned stimulus during conditioning trials. Suggests that information, surprise value, or prediction error is important in conditioning.

- Categorize

- To sort or arrange different items into classes or categories.

- Classical conditioning

- The procedure in which an initially neutral stimulus (the conditioned stimulus, or CS) is paired with an unconditioned stimulus (or US). The result is that the conditioned stimulus begins to elicit a conditioned response (CR). Classical conditioning is nowadays considered important as both a behavioral phenomenon and as a method to study simple associative learning. Same as Pavlovian conditioning. Classical conditioning involves learning the predictive relationship between two stimulus events.

- Conditioned compensatory response

- In classical conditioning, a conditioned response that opposes, rather than is the same as, the unconditioned response. It functions to reduce the strength of the unconditioned response. Often seen in conditioning when drugs are used as unconditioned stimuli.

- Conditioned or conditional response (CR)

- The response that is elicited by the conditioned stimulus after classical conditioning has taken place. Pavlov used the term conditional response, the response conditional upon prior learning.

- Conditioned or conditional stimulus (CS)

- An initially neutral stimulus (like a bell, light, or tone) that elicits a conditioned/conditional response after it has been associated with an unconditioned/unconditional stimulus. The CS comes to act as a signal predicting the coming occurrence of the US (the unconditioned stimulus; the stimulus unconditional upon prior learning and which naturally leads to the unconditioned response, a response unconditional upon prior learning; often a reflex response such as salivation, as in Pavlov's original experiments with dogs).

- Context

- Stimuli that are in the background whenever learning occurs. For instance, the Skinner box or room in which learning takes place is the classic example of a context. However, “context” can also be provided by internal stimuli, such as the sensory effects of drugs (e.g., being under the influence of alcohol has stimulus properties that provide a context) and mood states (e.g., being happy or sad). It can also be provided by a specific period in time—the passage of time is sometimes said to change the “temporal context.”

- Discriminative stimulus

- In operant conditioning, a stimulus that signals whether the response will be reinforced. It is said to “set the occasion” for the operant response.

- Extinction