7.3: Hearing

- Page ID

- 133853

This page is a draft and under active development. Please forward any questions, comments, and/or feedback to the ASCCC OERI (oeri@asccc.org).

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)Hearing

Learning Objectives

- Label key structures of the ear, identify their functions, and describe the role they play in hearing.

- Explain how we encode and perceive pitch.

- Explain how we localize sound.

- Describe how hearing and the vestibular system are associated.

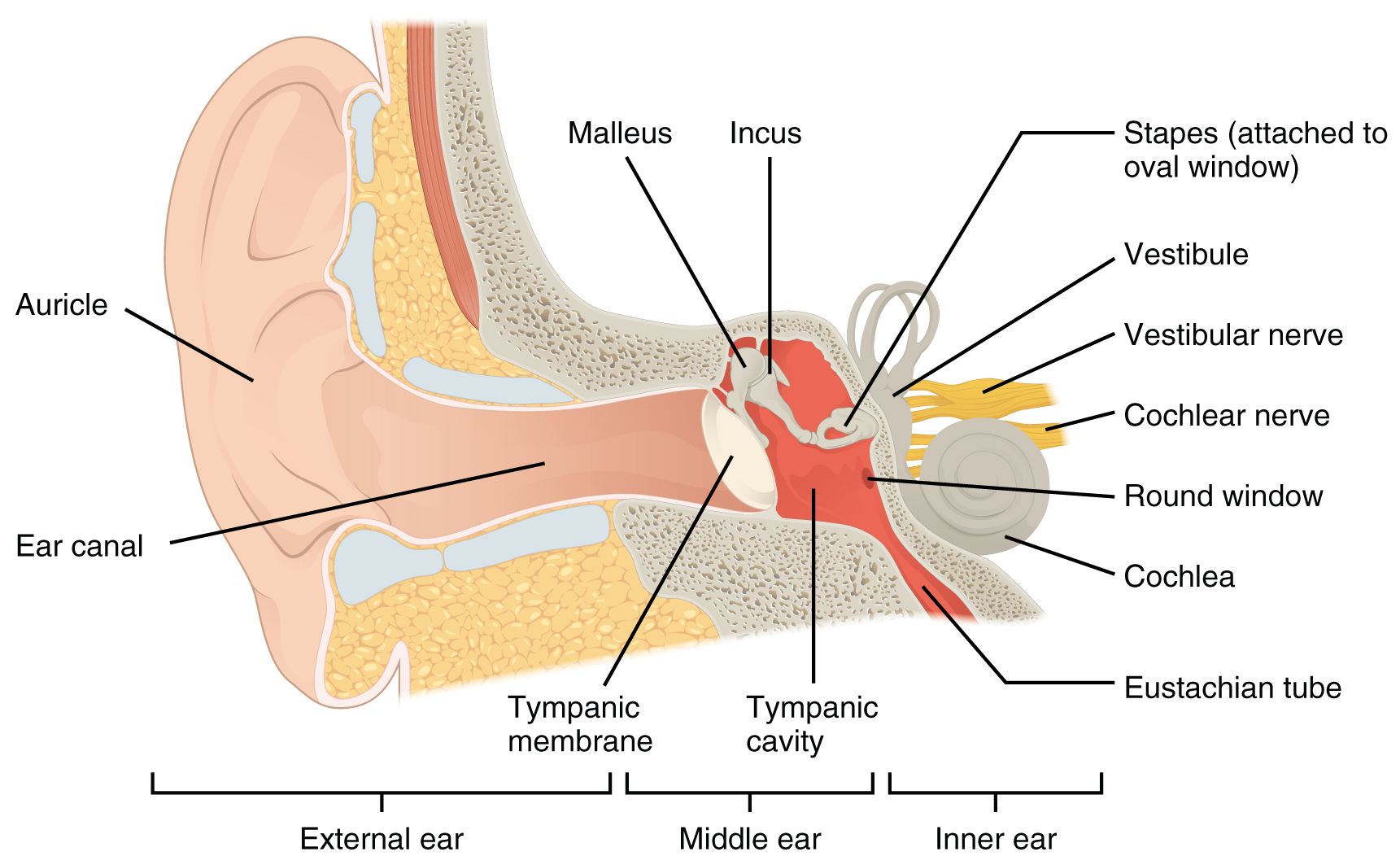

This section will provide an overview of the basic anatomy and function of the auditory system, how we perceive pitch, and how we know where sound is coming from. It has been argued that the human ear can hear a variety of sounds and can distinguish between a large variation of these sounds. The complex anatomy of the ear in humans can be divided into three sections (the three sections are shown in the image below). The neural impulses of these sound waves are sent to the brain and our past experiences integrate with those sound waves to help us make sense of the sounds we are hearing. The auditory system converts the sound waves into electrical signals that the brain interprets. The illustration below shows the complex system of the ear and how the anatomical structures lead to the ability to hear the sounds of nature, appreciate beauty of music and utilize language to communicate with others who speak the same language (see Figure \(\PageIndex{1}\)).

In particular, the human ear is most responsive to sounds that are in the same frequency as the human voice. This is why parents and mothers in particular are able to pick out the sound of their children’s voice amongst other children’s voices and we are often able to identify another person from the sound of their voice without having to see them physically. The complex system of the ear allows us to process sounds almost instantly.

Unlike light waves which travel in a vacuum, sound waves are transferred when molecules bump into each other in the air and produce sound waves that allow us to identify the source of the sounds that we encounter. These sound waves create different frequencies, with low frequency sounds being lower pitched and higher frequency sounds being higher pitched. There are a few theories that have been proposed to help account as to why individuals can distinguish between pitch perception and frequencies.

The temporal theory of pitch perception asserts that frequency is coded by the activity level of a sensory neuron. This would mean that a given hair cell would fire action potentials related to the frequency of the sound wave. While this is a very intuitive explanation, we detect such a broad range of frequencies (20–20,000 Hz) that the frequency of action potentials fired by hair cells cannot account for the entire range. Because of properties related to sodium channels on the neuronal membrane that are involved in action potentials, there is a point at which a cell cannot fire any faster (Shamma, 2001).

The place theory of pitch perception suggests that different portions of the basilar membrane (a stiff structural element within the cochlea of the inner ear) moves up and down in response to incoming sound waves. More specifically, the base of the basilar membrane responds best to high frequencies and the tip of the basilar membrane responds best to low frequencies. Therefore, hair cells that are in the base portion would be labeled as high-pitch receptors, while those in the tip of basilar membrane would be labeled as low-pitch receptors (Shamma, 2001). In reality, both theories explain different aspects of pitch perception. At frequencies up to about 4000 Hz, it is clear that both the rate of action potentials and place contribute to our perception of pitch. However, much higher frequency sounds can only be encoded using place cues (Shamma, 2001).

Similar to the need for recognizing different pitches and frequencies, knowing where particular sounds are coming from (sound localization) is an important part of navigating the environment around us. The auditory system has the ability to use monaural (one ear) and binaural (two ears) cues to locate where a particular sound might be coming from. Each pinna interacts with incoming sound waves differently, depending on the sound’s source relative to our bodies. This interaction provides a monaural cue that is helpful in locating sounds that occur above or below and in front or behind us. The sound waves received by your two ears from sounds that come from directly above, below, in front, or behind you would be identical; therefore, monaural cues are essential (Grothe, Pecka, & McAlpine, 2010).

Binaural cues, on the other hand, provide information on the location of a sound along a horizontal axis by relying on differences in patterns of vibration of the eardrum between our two ears. If a sound comes from an off-center location, it creates two types of binaural cues: interaural level differences and interaural timing differences. Interaural level difference refers to the fact that a sound coming from the right side of your body is more intense at your right ear than at your left ear because of the attenuation of the sound wave as it passes through your head. Interaural timing difference refers to the small difference in the time at which a given sound wave arrives at each ear, illustrated in Figure \(\PageIndex{2}\). Certain brain areas monitor these differences to construct where along a horizontal axis a sound originates (Grothe et al., 2010).

Figure \(\PageIndex{2}\): Localizing sound involves the use of both monaural and binaural cues. (credit "plane": modification of work by Max Pfandl)

Hearing Loss

Hearing Loss

- About 2 to 3 out of every 1,000 children in the United States are born with a detectable level of hearing loss in one or both ears (https://www.nidcd.nih.gov/health/sta...istics-hearing).

- More than 90 percent of deaf children are born to hearing parents (https://www.nidcd.nih.gov/health/sta...istics-hearing).

- Approximately 15% of American adults (37.5 million) aged 18 and over report some trouble hearing (https://www.nidcd.nih.gov/health/statistics/quick-statistics-hearing)

Conductive hearing loss can be caused by physical damage to the ear (such as to the eardrums); this condition reduces the ability of the ear to transfer vibrations from the outer ear to the inner ear. Conductive hearing loss can also be a result of fusion of the ossicles (three bones in the middle ear). Sensorineural hearing loss, which is caused by damage to the cilia (of the hair cells) or to the auditory nerve, is not as common as conductive hearing loss but the likelihood of this condition increases with age (Tennesen, 2007). As we continue to get older damage to the cilia increases; by the age of 65 years old 40% of individuals will have had damage to the cilia (Chisolm, Willott, & Lister, 2003).

Individuals who have experienced sensorineural hearing loss may benefit from a cochlear implant. Data From the National Institutes of Health shows that as of December 2019, approximately 736,900 cochlear implants have been implanted worldwide. In the United States, roughly 118,100 devices have been implanted in adults and 65,000 in children The following video explains the process of a cochlear implant.

Cochlear implant surgeries and how they work:

https://www.youtube.com/watch?v=AqXBrKwB96E

Deafness and Deaf Culture

In most modern nations people who are born or become deaf at an early age have developed their own system of communication and culture amongst themselves and people close to them. It has been argued that encouraging deaf people to sign is a more appropriate adjustment as opposed to encouraging them to speak, read lips or have cochlear implant surgeries. However, more recent studies suggest that due to advancements in technology, cochlear implants increase the likelihood of a person being able to have and engage in some auditory and speaking activities if implanted early enough (Dettman, Pinder, Briggs, Dowell, & Leigh, 2007; Dorman & Wilson, 2004). As a result parents often face the difficult decision of whether to take advantage of new technologies and approaches of providing support to deaf students in mainstream classroom settings or utilizing American Sign Language (ASL) schools and encouraging more immersion in those settings.

Hearing and the Vestibular System

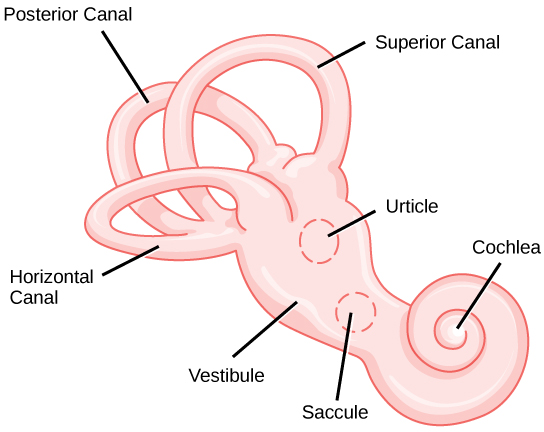

The vestibular system has some similarities with the auditory system. It utilizes hair cells just like the auditory system, but it excites them in different ways. There are five vestibular receptor organs in the inner ear: the utricle, the saccule, and three semicircular canals. Together, they make up what’s known as the vestibular labyrinth that is shown in Figure \(\PageIndex{3}\). The utricle and saccule respond to acceleration in a straight line, such as gravity. The roughly 30,000 hair cells in the utricle and 16,000 hair cells in the saccule lie below a gelatinous layer, with their stereocilia projecting into the gelatin. Embedded in this gelatin are calcium carbonate crystals—like tiny rocks. When the head is tilted, the crystals continue to be pulled straight down by gravity, but the new angle of the head causes the gelatin to shift, thereby bending the stereocilia. The bending of the stereocilia stimulates the neurons, and they signal to the brain that the head is tilted, allowing the maintenance of balance. It is the vestibular branch of the vestibulocochlear cranial nerve that deals with balance.

Figure \(\PageIndex{3}\) The structure of the vestibular labyrinth is shown. (credit: modification of work by NIH)

Attributions

- Hearing sections adapted by Isaias Hernandez from "Anatomy and Physiology from Oregon State University"; https://open.oregonstate.education/aandp/chapter/15-3-hearing/

- Hearing sections adapted by Isaias Hernandez from "Psychology 2e from OpenStax"; https://openstax.org/books/psychology-2e/pages/5-4-hearing