4.2: Hearing

- Last updated

- Save as PDF

- Page ID

- 92668

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\(\newcommand{\longvect}{\overrightarrow}\)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)University of Minnesota

Hearing allows us to perceive the world of acoustic vibrations all around us, and provides us with our most important channels of communication. This module reviews the basic mechanisms of hearing, beginning with the anatomy and physiology of the ear and a brief review of the auditory pathways up to the auditory cortex. An outline of the basic perceptual attributes of sound, including loudness, pitch, and timbre, is followed by a review of the principles of tonotopic organization, established in the cochlea. An overview of masking and frequency selectivity is followed by a review of the perception and neural mechanisms underlying spatial hearing. Finally, an overview is provided of auditory scene analysis, which tackles the important question of how the auditory system is able to make sense of the complex mixtures of sounds that are encountered in everyday acoustic environments.

learning objectives

- Describe the basic auditory attributes of sound.

- Describe the structure and general function of the auditory pathways from the outer ear to the auditory cortex.

- Discuss ways in which we are able to locate sounds in space.

- Describe various acoustic cues that contribute to our ability to perceptually segregate simultaneously arriving sounds.

Hearing forms a crucial part of our everyday life. Most of our communication with others, via speech or music, reaches us through the ears. Indeed, a saying, often attributed to Helen Keller, is that blindness separates us from things, but deafness separates us from people. The ears respond to acoustic information, or sound—tiny and rapid variations in air pressure. Sound waves travel from the source and produce pressure variations in the listener’s ear canals, causing the eardrums (or tympanic membranes) to vibrate. This module provides an overview of the events that follow, which convert these simple mechanical vibrations into our rich experience known as hearing, or auditory perception.

Perceptual Attributes of Sound

There are many ways to describe a sound, but the perceptual attributes of a sound can typically be divided into three main categories—namely, loudness, pitch, and timbre. Although all three refer to perception, and not to the physical sounds themselves, they are strongly related to various physical variables.

Loudness

The most direct physical correlate of loudness is sound intensity (or sound pressure) measured close to the eardrum. However, many other factors also influence the loudness of a sound, including its frequency content, its duration, and the context in which it is presented. Some of the earliest psychophysical studies of auditory perception, going back more than a century, were aimed at examining the relationships between perceived loudness, the physical sound intensity, and the just-noticeable differences in loudness (Fechner, 1860; Stevens, 1957). A great deal of time and effort has been spent refining various measurement methods. These methods involve techniques such as magnitude estimation, where a series of sounds (often sinusoids, or pure tones of single frequency) are presented sequentially at different sound levels, and subjects are asked to assign numbers to each tone, corresponding to the perceived loudness. Other studies have examined how loudness changes as a function of the frequency of a tone, resulting in the international standard iso-loudness-level contours (ISO, 2003), which are used in many areas of industry to assess noise and annoyance issues. Such studies have led to the development of computational models that are designed to predict the loudness of arbitrary sounds (e.g., Moore, Glasberg, & Baer, 1997).

Pitch

Pitch plays a crucial role in acoustic communication. Pitch variations over time provide the basis of melody for most types of music; pitch contours in speech provide us with important prosodic information in non-tone languages, such as English, and help define the meaning of words in tone languages, such as Mandarin Chinese. Pitch is essentially the perceptual correlate of waveform periodicity, or repetition rate: The faster a waveform repeats over time, the higher is its perceived pitch. The most common pitch-evoking sounds are known as harmonic complex tones. They are complex because they consist of more than one frequency, and they are harmonic because the frequencies are all integer multiples of a common fundamental frequency (F0). For instance, a harmonic complex tone with a F0 of 100 Hz would also contain energy at frequencies of 200, 300, 400 Hz, and so on. These higher frequencies are known as harmonics or overtones, and they also play an important role in determining the pitch of a sound. In fact, even if the energy at the F0 is absent or masked, we generally still perceive the remaining sound to have a pitch corresponding to the F0. This phenomenon is known as the “pitch of the missing fundamental,” and it has played an important role in the formation of theories and models about pitch (de Cheveigné, 2005). We hear pitch with sufficient accuracy to perceive melodies over a range of F0s from about 30 Hz (Pressnitzer, Patterson, & Krumbholz, 2001) up to about 4–5 kHz (Attneave & Olson, 1971; Oxenham, Micheyl, Keebler, Loper, & Santurette, 2011). This range also corresponds quite well to the range covered by musical instruments; for instance, the modern grand piano has notes that extend from 27.5 Hz to 4,186 Hz. We are able to discriminate changes in frequency above 5,000 Hz, but we are no longer very accurate in recognizing melodies or judging musical intervals.

Timbre

Timbre refers to the quality of sound, and is often described using words such as bright, dull, harsh, and hollow. Technically, timbre includes anything that allows us to distinguish two sounds that have the same loudness, pitch, and duration. For instance, a violin and a piano playing the same note sound very different, based on their sound quality or timbre.

An important aspect of timbre is the spectral content of a sound. Sounds with more high-frequency energy tend to sound brighter, tinnier, or harsher than sounds with more low-frequency content, which might be described as deep, rich, or dull. Other important aspects of timbre include the temporal envelope (or outline) of the sound, especially how it begins and ends. For instance, a piano has a rapid onset, or attack, produced by the hammer striking the string, whereas the attack of a clarinet note can be much more gradual. Artificially changing the onset of a piano note by, for instance, playing a recording backwards, can dramatically alter its character so that it is no longer recognizable as a piano note. In general, the overall spectral content and the temporal envelope can provide a good first approximation to any sound, but it turns out that subtle changes in the spectrum over time (or spectro-temporal variations) are crucial in creating plausible imitations of natural musical instruments (Risset & Wessel, 1999).

An Overview of the Auditory System

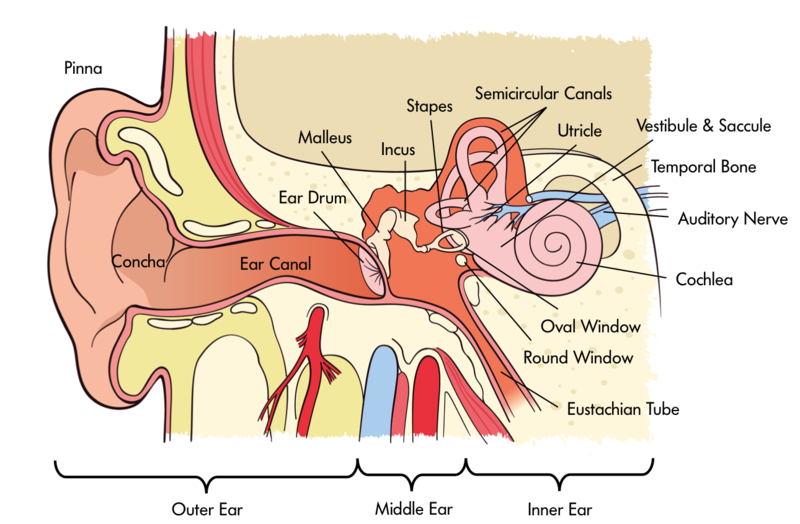

Our auditory perception depends on how sound is processed through the ear. The ear can be divided into three main parts—the outer, middle, and inner ear (see Figure 8.4.1). The outer ear consists of the pinna (the visible part of the ear, with all its unique folds and bumps), the ear canal (or auditory meatus), and the tympanic membrane. Of course, most of us have two functioning ears, which turn out to be particularly useful when we are trying to figure out where a sound is coming from. As discussed below in the section on spatial hearing, our brain can compare the subtle differences in the signals at the two ears to localize sounds in space. However, this trick does not always help: for instance, a sound directly in front or directly behind you will not produce a difference between the ears. In these cases, the filtering produced by the pinnae helps us localize sounds and resolve potential front-back and up-down confusions. More generally, the folds and bumps of the pinna produce distinct peaks and dips in the frequency response that depend on the location of the sound source. The brain then learns to associate certain patterns of spectral peaks and dips with certain spatial locations. Interestingly, this learned association remains malleable, or plastic, even in adulthood. For instance, a study that altered the pinnae using molds found that people could learn to use their “new” ears accurately within a matter of a few weeks (Hofman, Van Riswick, & Van Opstal, 1998). Because of the small size of the pinna, these kinds of acoustic cues are only found at high frequencies, above about 2 kHz. At lower frequencies, the sound is basically unchanged whether it comes from above, in front, or below. The ear canal itself is a tube that helps to amplify sound in the region from about 1 to 4 kHz—a region particularly important for speech communication.

The middle ear consists of an air-filled cavity, which contains the middle-ear bones, known as the incus, malleus, and stapes, or anvil, hammer, and stirrup, because of their respective shapes. They have the distinction of being the smallest bones in the body. Their primary function is to transmit the vibrations from the tympanic membrane to the oval window of the cochlea and, via a form of lever action, to better match the impedance of the air surrounding the tympanic membrane with that of the fluid within the cochlea.

The inner ear includes the cochlea, encased in the temporal bone of the skull, in which the mechanical vibrations of sound are transduced into neural signals that are processed by the brain. The cochlea is a spiral-shaped structure that is filled with fluid. Along the length of the spiral runs the basilar membrane, which vibrates in response to the pressure differences produced by vibrations of the oval window. Sitting on the basilar membrane is the organ of Corti, which runs the entire length of the basilar membrane from the base (by the oval window) to the apex (the “tip” of the spiral). The organ of Corti includes three rows of outer hair cells and one row of inner hair cells. The hair cells sense the vibrations by way of their tiny hairs, or stereocillia. The outer hair cells seem to function to mechanically amplify the sound-induced vibrations, whereas the inner hair cells form synapses with the auditory nerve and transduce those vibrations into action potentials, or neural spikes, which are transmitted along the auditory nerve to higher centers of the auditory pathways.

One of the most important principles of hearing—frequency analysis—is established in the cochlea. In a way, the action of the cochlea can be likened to that of a prism: the many frequencies that make up a complex sound are broken down into their constituent frequencies, with low frequencies creating maximal basilar-membrane vibrations near the apex of the cochlea and high frequencies creating maximal basilar-membrane vibrations nearer the base of the cochlea. This decomposition of sound into its constituent frequencies, and the frequency-to-place mapping, or “tonotopic” representation, is a major organizational principle of the auditory system, and is maintained in the neural representation of sounds all the way from the cochlea to the primary auditory cortex. The decomposition of sound into its constituent frequency components is part of what allows us to hear more than one sound at a time. In addition to representing frequency by place of excitation within the cochlea, frequencies are also represented by the timing of spikes within the auditory nerve. This property, known as “phase locking,” is crucial in comparing time-of-arrival differences of waveforms between the two ears (see the section on spatial hearing, below).

Unlike vision, where the primary visual cortex (or V1) is considered an early stage of processing, auditory signals go through many stages of processing before they reach the primary auditory cortex, located in the temporal lobe. Although we have a fairly good understanding of the electromechanical properties of the cochlea and its various structures, our understanding of the processing accomplished by higher stages of the auditory pathways remains somewhat sketchy. With the possible exception of spatial localization and neurons tuned to certain locations in space (Harper & McAlpine, 2004; Knudsen & Konishi, 1978), there is very little consensus on the how, what, and where of auditory feature extraction and representation. There is evidence for a “pitch center” in the auditory cortex from both human neuroimaging studies (e.g., Griffiths, Buchel, Frackowiak, & Patterson, 1998; Penagos, Melcher, & Oxenham, 2004) and single-unit physiology studies (Bendor & Wang, 2005), but even here there remain some questions regarding whether a single area of cortex is responsible for coding single features, such as pitch, or whether the code is more distributed (Walker, Bizley, King, & Schnupp, 2011).

Audibility, Masking, and Frequency Selectivity

Overall, the human cochlea provides us with hearing over a very wide range of frequencies. Young people with normal hearing are able to perceive sounds with frequencies ranging from about 20 Hz all the way up to 20 kHz. The range of intensities we can perceive is also impressive: the quietest sounds we can hear in the medium-frequency range (between about 1 and 4 kHz) have a sound intensity that is about a factor of 1,000,000,000,000 less intense than the loudest sound we can listen to without incurring rapid and permanent hearing loss. In part because of this enormous dynamic range, we tend to use a logarithmic scale, known as decibels (dB), to describe sound pressure or intensity. On this scale, 0 dB sound pressure level (SPL) is defined as 20 micro-Pascals (μPa), which corresponds roughly to the quietest perceptible sound level, and 120 dB SPL is considered dangerously loud.

Masking is the process by which the presence of one sound makes another sound more difficult to hear. We all encounter masking in our everyday lives, when we fail to hear the phone ring while we are taking a shower, or when we struggle to follow a conversation in a noisy restaurant. In general, a more intense sound will mask a less intense sound, provided certain conditions are met. The most important condition is that the frequency content of the sounds overlap, such that the activity in the cochlea produced by a masking sound “swamps” that produced by the target sound. Another type of masking, known as “suppression,” occurs when the response to the masker reduces the neural (and in some cases, the mechanical) response to the target sound. Because of the way that filtering in the cochlea functions, low-frequency sounds are more likely to mask high frequencies than vice versa, particularly at high sound intensities. This asymmetric aspect of masking is known as the “upward spread of masking.” The loss of sharp cochlear tuning that often accompanies cochlear damage leads to broader filtering and more masking—a physiological phenomenon that is likely to contribute to the difficulties experienced by people with hearing loss in noisy environments (Moore, 2007).

Although much masking can be explained in terms of interactions within the cochlea, there are other forms that cannot be accounted for so easily, and that can occur even when interactions within the cochlea are unlikely. These more central forms of masking come in different forms, but have often been categorized together under the term “informational masking” (Durlach et al., 2003; Watson & Kelly, 1978). Relatively little is known about the causes of informational masking, although most forms can be ascribed to a perceptual “fusion” of the masker and target sounds, or at least a failure to segregate the target from the masking sounds. Also relatively little is known about the physiological locus of informational masking, except that at least some forms seem to originate in the auditory cortex and not before (Gutschalk, Micheyl, & Oxenham, 2008).

Spatial Hearing

In contrast to vision, we have a 360° field of hearing. Our auditory acuity is, however, at least an order of magnitude poorer than vision in locating an object in space. Consequently, our auditory localization abilities are most useful in alerting us and allowing us to orient towards sources, with our visual sense generally providing the finer-grained analysis. Of course, there are differences between species, and some, such as barn owls and echolocating bats, have developed highly specialized sound localization systems.

Our ability to locate sound sources in space is an impressive feat of neural computation. The two main sources of information both come from a comparison of the sounds at the two ears. The first is based on interaural time differences (ITD) and relies on the fact that a sound source on the left will generate sound that will reach the left ear slightly before it reaches the right ear. Although sound is much slower than light, its speed still means that the time of arrival differences between the two ears is a fraction of a millisecond. The largest ITD we encounter in the real world (when sounds are directly to the left or right of us) are only a little over half a millisecond. With some practice, humans can learn to detect an ITD of between 10 and 20 μs (i.e., 20 millionths of a second) (Klump & Eady, 1956).

The second source of information is based in interaural level differences (ILDs). At higher frequencies (higher than about 1 kHz), the head casts an acoustic “shadow,” so that when a sound is presented from the left, the sound level at the left ear is somewhat higher than the sound level at the right ear. At very high frequencies, the ILD can be as much as 20 dB, and we are sensitive to differences as small as 1 dB.

As mentioned briefly in the discussion of the outer ear, information regarding the elevation of a sound source, or whether it comes from in front or behind, is contained in high-frequency spectral details that result from the filtering effects of the pinnae.

In general, we are most sensitive to ITDs at low frequencies (below about 1.5 kHz). At higher frequencies we can still perceive changes in timing based on the slowly varying temporal envelope of the sound but not the temporal fine structure (Bernstein & Trahiotis, 2002; Smith, Delgutte, & Oxenham, 2002), perhaps because of a loss of neural phase-locking to the temporal fine structure at high frequencies. In contrast, ILDs are most useful at high frequencies, where the head shadow is greatest. This use of different acoustic cues in different frequency regions led to the classic and very early “duplex theory” of sound localization (Rayleigh, 1907). For everyday sounds with a broad frequency spectrum, it seems that our perception of spatial location is dominated by interaural time differences in the low-frequency temporal fine structure (Macpherson & Middlebrooks, 2002).

As with vision, our perception of distance depends to a large degree on context. If we hear someone shouting at a very low sound level, we infer that the shouter must be far away, based on our knowledge of the sound properties of shouting. In rooms and other enclosed locations, the reverberation can also provide information about distance: As a speaker moves further away, the direct sound level decreases but the sound level of the reverberation remains about the same; therefore, the ratio of direct-to-reverberant energy decreases (Zahorik & Wightman, 2001).

Auditory Scene Analysis

There is usually more than one sound source in the environment at any one time—imagine talking with a friend at a café, with some background music playing, the rattling of coffee mugs behind the counter, traffic outside, and a conversation going on at the table next to yours. All these sources produce sound waves that combine to form a single complex waveform at the eardrum, the shape of which may bear very little relationship to any of the waves produced by the individual sound sources. Somehow the auditory system is able to break down, or decompose, these complex waveforms and allow us to make sense of our acoustic environment by forming separate auditory “objects” or “streams,” which we can follow as the sounds unfold over time (Bregman, 1990).

A number of heuristic principles have been formulated to describe how sound elements are grouped to form a single object or segregated to form multiple objects. Many of these originate from the early ideas proposed in vision by the so-called Gestalt psychologists, such as Max Wertheimer. According to these rules of thumb, sounds that are in close proximity, in time or frequency, tend to be grouped together. Also, sounds that begin and end at the same time tend to form a single auditory object. Interestingly, spatial location is not always a strong or reliable grouping cue, perhaps because the location information from individual frequency components is often ambiguous due to the effects of reverberation. Several studies have looked into the relative importance of different cues by “trading off” one cue against another. In some cases, this has led to the discovery of interesting auditory illusions, where melodies that are not present in the sounds presented to either ear emerge in the perception (Deutsch, 1979), or where a sound element is perceptually “lost” in competing perceptual organizations (Shinn-Cunningham, Lee, & Oxenham, 2007).

More recent attempts have used computational and neutrally based approaches to uncover the mechanisms of auditory scene analysis (e.g., Elhilali, Ma, Micheyl, Oxenham, & Shamma, 2009), and the field of computational auditory scene analysis (CASA) has emerged in part as an effort to move towards more principled, and less heuristic, approaches to understanding the parsing and perception of complex auditory scenes (e.g., Wang & Brown, 2006). Solving this problem will not only provide us with a better understanding of human auditory perception, but may provide new approaches to “smart” hearing aids and cochlear implants, as well as automatic speech recognition systems that are more robust to background noise.

Conclusion

Hearing provides us with our most important connection to the people around us. The intricate physiology of the auditory system transforms the tiny variations in air pressure that reach our ear into the vast array of auditory experiences that we perceive as speech, music, and sounds from the environment around us. We are only beginning to understand the basic principles of neural coding in higher stages of the auditory system, and how they relate to perception. However, even our rudimentary understanding has improved the lives of hundreds of thousands through devices such as cochlear implants, which re-create some of the ear’s functions for people with profound hearing loss.

Outside Resources

- Audio: Auditory Demonstrations from Richard Warren’s lab at the University of Wisconsin, Milwaukee

- www4.uwm.edu/APL/demonstrations.html

- Audio: Auditory Demonstrations. CD published by the Acoustical Society of America (ASA). You can listen to the demonstrations here

- www.feilding.net/sfuad/musi30...1/demos/audio/

- Web: Demonstrations and illustrations of cochlear mechanics can be found here

- http://lab.rockefeller.edu/hudspeth/...calSimulations

- Web: More demonstrations and illustrations of cochlear mechanics

- www.neurophys.wisc.edu/animations/

Discussion Questions

- Based on the available acoustic cues, how good do you think we are at judging whether a low-frequency sound is coming from in front of us or behind us? How might we solve this problem in the real world?

- Outer hair cells contribute not only to amplification but also to the frequency tuning in the cochlea. What are some of the difficulties that might arise for people with cochlear hearing loss, due to these two factors? Why do hearing aids not solve all these problems?

- Why do you think the auditory system has so many stages of processing before the signals reach the auditory cortex, compared to the visual system? Is there a difference in the speed of processing required?

Vocabulary

- Cochlea

- Snail-shell-shaped organ that transduces mechanical vibrations into neural signals.

- Interaural differences

- Differences (usually in time or intensity) between the two ears.

- Pinna

- Visible part of the outer ear.

- Tympanic membrane

- Ear drum, which separates the outer ear from the middle ear.

References

- Attneave, F., & Olson, R. K. (1971). Pitch as a medium: A new approach to psychophysical scaling. American Journal of Psychology, 84, 147–166.

- Bendor, D., & Wang, X. (2005). The neuronal representation of pitch in primate auditory cortex. Nature, 436, 1161–1165.

- Bernstein, L. R., & Trahiotis, C. (2002). Enhancing sensitivity to interaural delays at high frequencies by using "transposed stimuli." Journal of the Acoustical Society of America, 112, 1026–1036.

- Bregman, A. S. (1990). Auditory scene analysis: The perceptual organization of sound. Cambridge, MA: MIT Press.

- Deutsch, D. (1979). Binaural integration of melodic patterns. Perception & Psychophysics, 25, 399–405.

- Durlach, N. I., Mason, C. R., Kidd, G., Jr., Arbogast, T. L., Colburn, H. S., & Shinn-Cunningham, B. G. (2003). Note on informational masking. Journal of the Acoustical Society of America, 113, 2984–2987.

- Elhilali, M., Ma, L., Micheyl, C., Oxenham, A. J., & Shamma, S. (2009). Temporal coherence in the perceptual organization and cortical representation of auditory scenes. Neuron, 61, 317–329.

- Fechner, G. T. (1860). Elemente der Psychophysik (Vol. 1). Leipzig, Germany: Breitkopf und Haertl.

- Griffiths, T. D., Buchel, C., Frackowiak, R. S., & Patterson, R. D. (1998). Analysis of temporal structure in sound by the human brain. Nature Neuroscience, 1, 422–427.

- Gutschalk, A., Micheyl, C., & Oxenham, A. J. (2008). Neural correlates of auditory perceptual awareness under informational masking. PLoS Biology, 6, 1156–1165 (e1138).

- Harper, N. S., & McAlpine, D. (2004). Optimal neural population coding of an auditory spatial cue. Nature, 430, 682–686.

- Hofman, P. M., Van Riswick, J. G. A., & Van Opstal, A. J. (1998). Relearning sound localization with new ears. Nature Neuroscience, 1, 417–421.

- ISO. (2003). ISO:226 Acoustics - Normal equal-loudness-level contours. Geneva, Switzerland: International Organization for Standardization.

- Klump, R. G., & Eady, H. R. (1956). Some measurements of interaural time difference thresholds. Journal of the Acoustical Society of America, 28, 859–860.

- Knudsen, E. I., & Konishi, M. (1978). A neural map of auditory space in the owl. Science, 200, 795–797.

- Macpherson, E. A., & Middlebrooks, J. C. (2002). Listener weighting of cues for lateral angle: The duplex theory of sound localization revisited. Journal of the Acoustical Society of America, 111, 2219–2236.

- Moore, B. C. J. (2007). Cochlear hearing loss: Physiological, psychological, and technical issues. Chichester: Wiley.

- Moore, B. C. J., Glasberg, B. R., & Baer, T. (1997). A model for the prediction of thresholds, loudness, and partial loudness. Journal of the Audio Engineering Society, 45, 224–240.

- Oxenham, A. J., Micheyl, C., Keebler, M. V., Loper, A., & Santurette, S. (2011). Pitch perception beyond the traditional existence region of pitch. Proceedings of the National Academy of Sciences USA, 108, 7629–7634.

- Penagos, H., Melcher, J. R., & Oxenham, A. J. (2004). A neural representation of pitch salience in non-primary human auditory cortex revealed with fMRI. Journal of Neuroscience, 24, 6810–6815.

- Pressnitzer, D., Patterson, R. D., & Krumbholz, K. (2001). The lower limit of melodic pitch. Journal of the Acoustical Society of America, 109, 2074–2084.

- Rayleigh, L. (1907). On our perception of sound direction. Philosophical Magazine, 13, 214–232.

- Risset, J. C., & Wessel, D. L. (1999). Exploration of timbre by analysis and synthesis. In D. Deutsch (Ed.), The psychology of music (2nd ed., pp. 113–168): Academic Press.

- Shinn-Cunningham, B. G., Lee, A. K., & Oxenham, A. J. (2007). A sound element gets lost in perceptual competition. Proceedings of the National Academy of Sciences USA, 104, 12223–12227.

- Smith, Z. M., Delgutte, B., & Oxenham, A. J. (2002). Chimaeric sounds reveal dichotomies in auditory perception. Nature, 416, 87–90.

- Stevens, S. S. (1957). On the psychophysical law. Psychological Review, 64, 153–181.

- Walker, K. M., Bizley, J. K., King, A. J., & Schnupp, J. W. (2011). Multiplexed and robust representations of sound features in auditory cortex. Journal of Neuroscience, 31, 14565–14576.

- Wang, D., & Brown, G. J. (Eds.). (2006). Computational auditory scene analysis: Principles, algorithms, and applications. Hoboken, NJ: Wiley.

- Watson, C. S., & Kelly, W. J. (1978). Informational masking in auditory patterns. Journal of the Acoustical Society of America, 64, S39.

- Zahorik, P., & Wightman, F. L. (2001). Loudness constancy with varying sound source distance. Nature Neuroscience, 4, 78–83.

- de Cheveigné, A. (2005). Pitch perception models. In C. J. Plack, A. J. Oxenham, A. N. Popper, & R. Fay (Eds.), Pitch: Neural coding and perception (pp. 169–233). New York, NY: Springer Verlag.