Standard 7.6: Analyzing Editorials, Editorial Cartoons, or Op-Ed Commentaries

Analyze the point of view and evaluate the claims of an editorial, editorial cartoon, or op-ed commentary on a public issue at the local, state or national level. (Massachusetts Curriculum Framework for History and Social Studies) [8.T7.6]

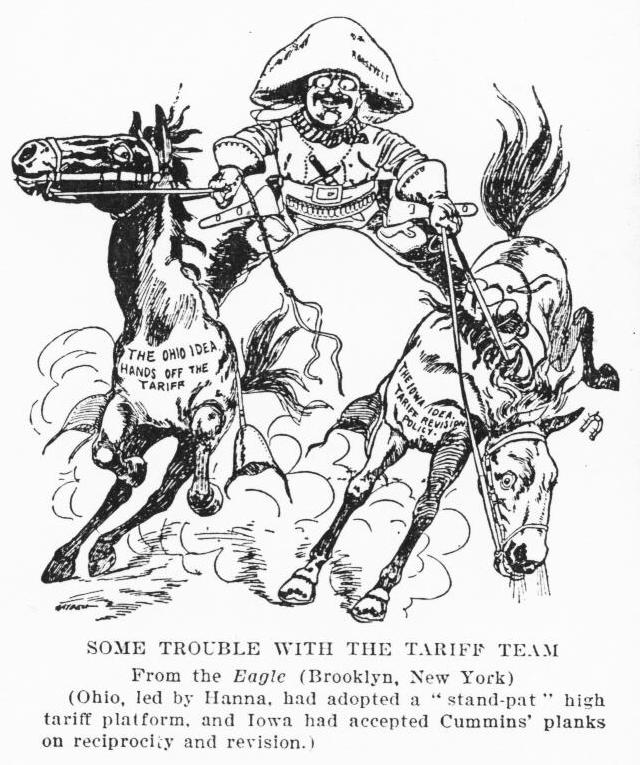

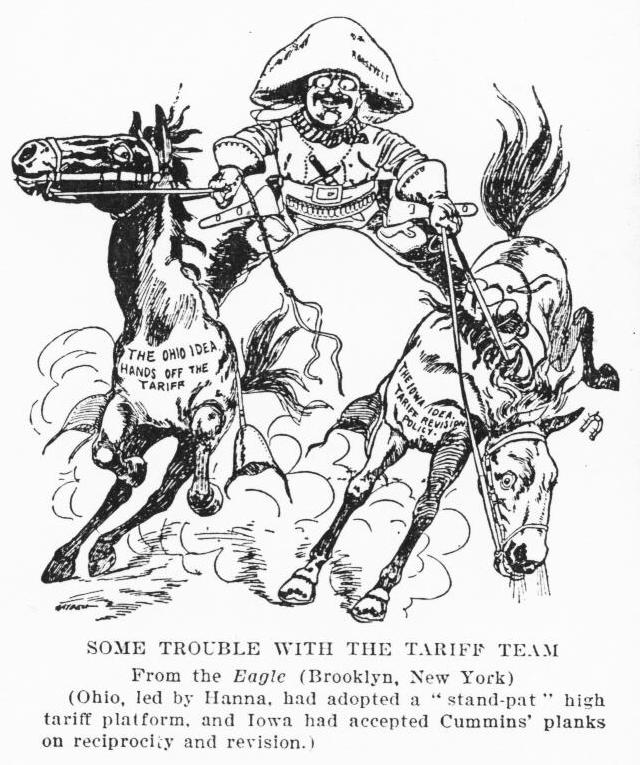

Figure \(\PageIndex{1}\): US editorial cartoon 1901. President Teddy Roosevelt watches GOP team pull apart on tariff issue | Public domain

Standard 7.6 asks students to become critical readers of editorials, editorial cartoons, and Op-Ed commentaries. Critical readers explore what is being said or shown, examine how information is being conveyed, evaluate the language and imagery used, and investigate how much truth and accuracy is being maintained by the author(s). Then, they draw their own informed conclusions.

7.6.1 INVESTIGATE: Evaluating Editorials, Editorial Cartoons, and Op-Ed Commentaries

Teaching students how to critically evaluate editorials, editorial cartoons, and Op-Ed commentaries begins by explaining that all three are forms of persuasive writing. Writers use these genres (forms of writing) to influence how readers think and act about a topic or an issue. Editorials and op-ed commentaries rely mainly on words, while editorial cartoons combine limited text with memorable visual images. But the intent is the same for all three - to motivate, persuade, and convince readers.

Many times, writers use editorials, editorial cartoons, and op-ed commentaries to argue for progressive social and political change. Fighting for the Vote with Cartoons shows how cartoonists used the genre to build support for women's suffrage (The New York Times, August 19, 2020).

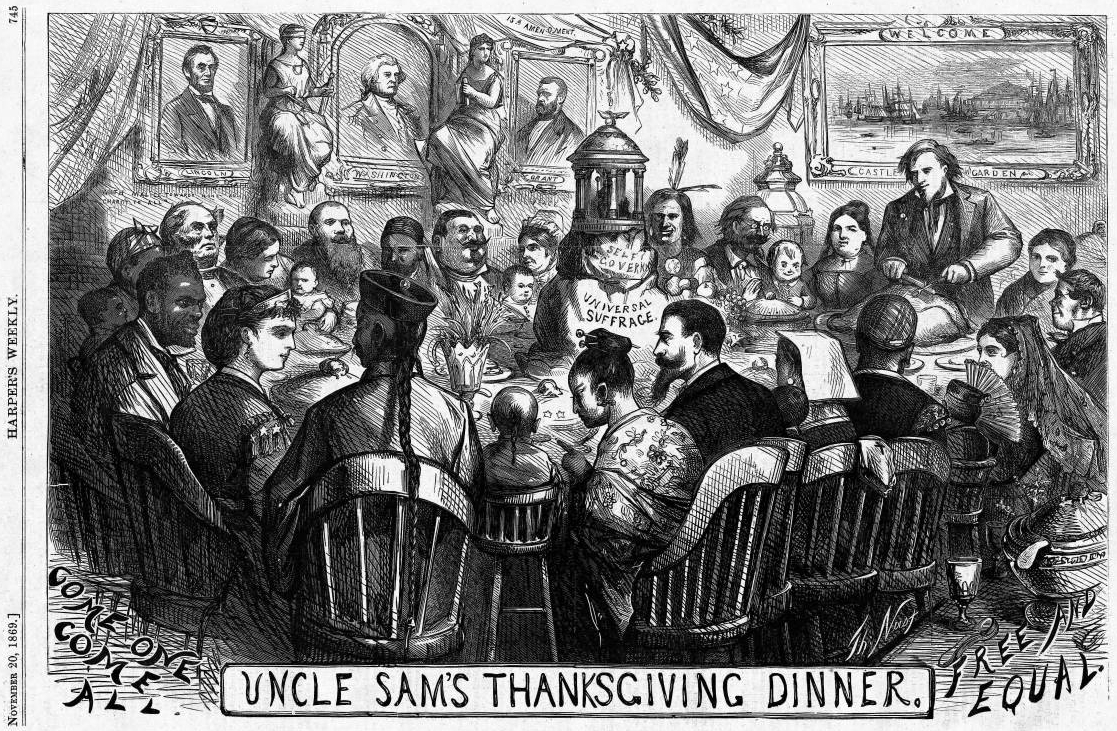

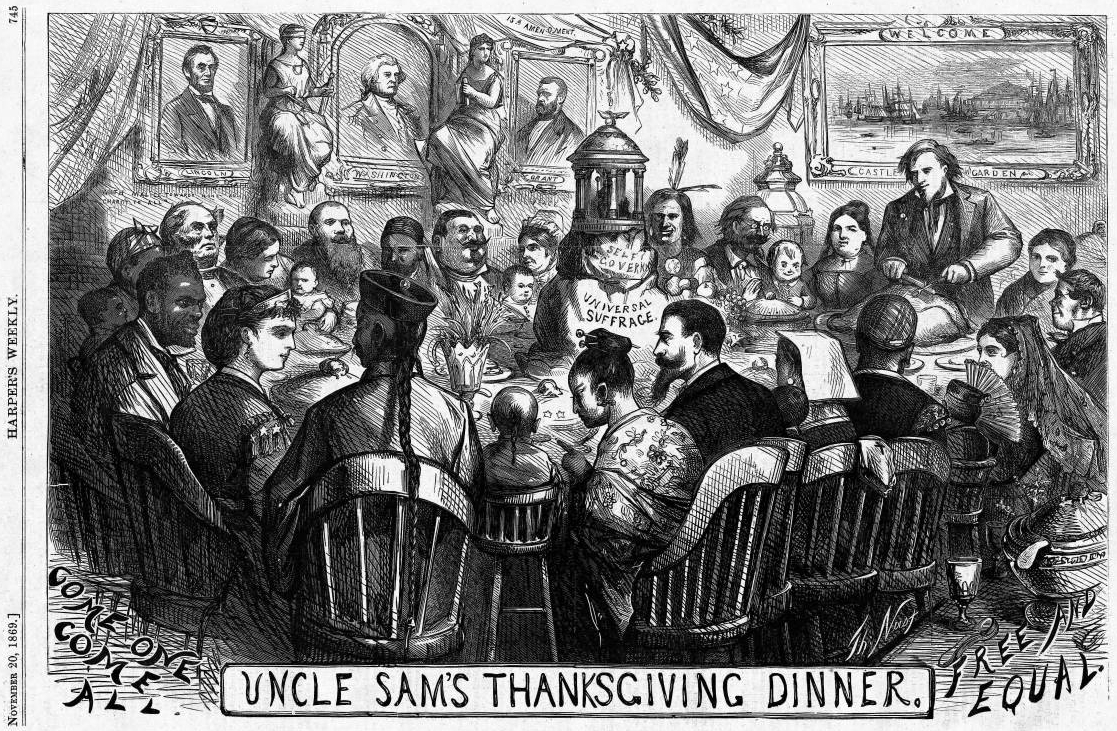

Figure \(\PageIndex{2}\): Uncle Sam's Thanksgiving Dinner (November 1869), by Thomas Nast | Public domain

But these same forms of writing can be used by individuals and groups who seek to spread disinformation and untruths.

Large numbers of teens and tweens tend to trust what they find on the web as accurate and unbiased (NPR, 2016). They are unskilled in separating sponsored content or political commentary from actual news when viewing a webpage or a print publication. In online settings, they can be easily drawn off-topic by clickbait links and deliberately misrepresented information.

The writing of op-ed commentaries achieved national prominence at the beginning of June 2020 when the New York Times published an opinion piece written by Arkansas Senator Tom Cotton, in which he urged the President to send in armed regular duty American military troops to break up street protests across the nation that followed the death of George Floyd while in the custody of Minneapolis police officers.

Many staffers at the Times publicly dissented about publishing Cotton's piece entitled "Send in the Troops," citing that the views expressed by the Senator put journalists, especially journalists of color, in danger. James Bennett, the Times Editorial Page editor, defended the decision to publish, stating if editors only published views that editors agreed with, it would "undermine the integrity and independence of the New York Times." The editor reaffirmed that the fundamental purpose of newspapers and their editorial pages is "not to tell you what to think, but help you to think for yourself."

The situation raised unresolved questions about the place of op-ed commentaries in newspapers and other media outlets in a digital age when the material can be accessed online around the country and the world. Should any viewpoint, no matter how extreme or inflammatory, be given a forum for publication such as that provided by the op-ed section of a major newspaper's editorial page?

Many journalists as well as James Bennett urge newspapers to not only publish wide viewpoints, but provide context and clarification about the issues being discussed. Readers and viewers need to have links to multiple resources so they can more fully understand what is being said while assessing for themselves the accuracy and appropriateness of the remarks.

Media Literacy Connections: Memes and TikToks as Political Cartoons

Political cartoons and comics, as well as memes and TikToks, are pictures with a purpose. Writers and artists use these genres to entertain, persuade, inform, and express fiction and nonfiction ideas creatively and imaginatively.

Like political cartoons and comics, memes and TikToks have the potential to provide engaging and memorable messages that can influence the political thinking and actions of voters regarding local, state, and national issues.

In this activity, you will evaluate the design and impact of political memes, TikToks, editorial cartoons, and political comics and then create your own to influence others about a public issue.

Suggested Learning Activities

- Write a commentary

- Review the articles Op-Ed? Editorial? & Op Ed Elements. What do all these terms really mean?

- Have students write two editorial commentaries about a public issue - one with accurate and truthful information; the other using deliberate misinformation and exaggeration.

- Students review their peers' work to examine how information is being conveyed, evaluate the language and imagery used, and investigate how much truth and accuracy is being maintained by the author(s).

- As a class, discuss and vote on which commentaries are "fake news."

- Draw a political cartoon for an issue or a cause

- Have students draw editorial cartoons about a school, community or national issue.

- Post the cartoons on the walls around the classroom and host a gallery walk.

- Ask the class to evaluate the accuracy and truthfulness of each cartoon.

- Analyze a political cartoon as a primary source

- Choose a political cartoon from a newspaper or online source.

- Use the Cartoon Analysis Guide from the Library of Congress or a Cartoon Analysis Checklist from TeachingHistory.org to examine its point of view.

Online Resources for Evaluating Information and Analyzing Online Claims

7.6.2 UNCOVER: Deepfakes, Fake Profiles, and Political Messaging

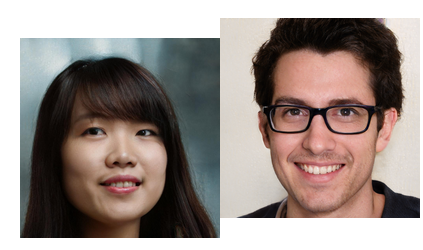

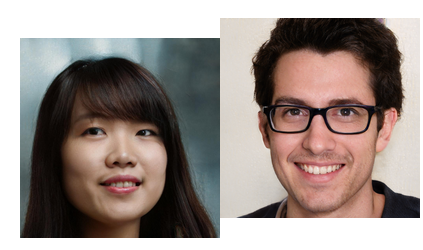

Deepfakes, fake profiles, and fake images are a new dimension of political messaging on social media. In December 2019, Facebook announced it was removing 900 accounts from its network because the accounts were using fake profile photos of people who did not exist. Pictures of people were generated by an AI (artificial intelligence) software program (Graphika & the Atlantic Council's Digital Forensics Lab, 2019). All of the accounts were associated with a politically conservative, pro-Donald Trump news publisher, The Epoch Times.

Figure \(\PageIndex{3}\): The people in these photos do not exist; their pictures were generated by an artificial intelligence program | Image sources: left, right. Public domain

Deepfakes are digitally manipulated videos and pictures that produce images and sounds that appear to be real (Shao, 2019). They can be used to spread misinformation and influence voters. Researchers and cybersecurity experts warn that it is possible to manipulate digital content - facial expressions, voice, lip movements - so that was it being seen is "indistinguishable from reality to human eyes and ears" (Patrini, et. al., 2018). You can learn more from the book Deepfakes: The Coming Infocalypse by Nina Schick (2020).

For example, you can watch a video of George W. Bush, Donald Trump, and Barack Obama saying things that they never would (and never did) say, but that looks authentic (See the link here to Watch a man manipulate George Bush's face in real time). Journalist Michael Tomasky, writing about the 2020 election outcomes in the New York Review of Books, cited a New York Times report that fake videos of Joe Biden "admitting to voter fraud" had been viewed 17 million times before Americans voted on election day (What Did the Democrats Win?, December 17, 2020, p. 36).

Video \(\PageIndex{1}\): Deepfakes: Can You Spot a Phony Video? by Above the Noise

To recognize deepfakes, technology experts advise viewers to look for face discolorations, poor lightning, badly synced sound and video, and blurriness between face, hair and neck (Deepfake Video Explained: What They Are and How to Recognize Them, Salon, September 15, 2019). To combat deepfakes, Dutch researchers have proposed that organizations make digital forgery more difficult with techniques that are now used to identify real currency from fake money and to invest in building fake detection technologies (Patrini, et.al., 2018).

The presence of fake images are an enormous problem for today's social media companies. On the one hand, they are committed to allowing people to freely share materials. On the other hand, they face a seemingly endless flow of Photoshopped materials that have potentially harmful impacts on people and policies. In 2019 alone, reported The Washington Post, Facebook eliminated some three billion fake accounts during one six-month time period. You can learn about Facebook's current efforts at regulating fake content by linking to its regularly updated Community Standards Enforcement Report.

Photo Tampering in History

Photo tampering for political or commercial purposes happened long before modern-day digital tools made possible deep fakes and other cleverly manipulated images. The Library of Congress has documented how a famous photo of Abraham Lincoln is a composite of Lincoln's head superimposed on the body of the southern politician and former vice-president John C. Calhoun. In the early decades of the 20th century, the photographer Edward S. Curtis, who took more than 40,000 pictures of Native Americans over 30 years, staged and retouched his photos to try and show native life and culture before the arrival of Europeans. The Library of Congress has the famous photos in which Curtis removed a clock from between two Native men who were sitting in a hunting lodge dressed in traditional clothing that they hardly ever wore at the time (Jones, 2015). It has been established that the Depression-era photographer Dorothea Lange staged her iconic "Migrant Mother" photograph, although the staging captured the depths of poverty and sacrifice faced by so many displaced Americans during the 1930s. You can analyze this photo in more detail in this site from The Kennedy Center.

You can find more examples of fake photos in the collection Photo Tampering Through History and at the Hoax Museum's Hoax Photo Archive.

Suggested Learning Activities

- Draw an editorial cartoon

- Show the video Can You Spot a Phony Video? from Above the Noise, KQED San Francisco.

- Then, ask students to create an editorial cartoon about deepfakes.

- Write an op-ed commentary

- Write an Op-Ed commentary about fake profiles and fake images on social media and how that impacts people's political views.

- Create a fake photo

- Take a public domain historical photo and edit it using SumoPaint (free online) or Photoshop to change the context or meaning of the image.

- Showcase the fake and real photos side by side and ask students to vote on which one is real and justify their reasoning.

7.6.3 ENGAGE: Should Facebook and Other Technology Companies Regulate Political Content on their Social Media Platforms?

Social media and technology companies generate huge amounts of revenue from advertisements on their sites. 98.5% of Facebook's $55.8 billion in revenue in 2018 was from digital ads (Investopedia, 2020). Like Facebook, YouTube earns most of its revenue from ads through sponsored videos, ads embedded in videos, and sponsored content on YouTube's landing page (How Does YouTube Make Money?). With all this money to be made, selling space for politically-themed ads has become a major part of social media companies' business models.

Political Ads

Political ads are a huge part of the larger problem of fake news on social media platforms like Facebook. Researchers found that "politically relevant disinformation" reached over 158 million views in the first 10 months of 2019, enough to reach every registered voter in the country at least once (Ingram, 2019, para. 2). Nearly all fake news (91%) is negative and a majority (62%) is about Democrats and liberals (Legum, 2019, para. 5).

Figure \(\PageIndex{5}\): Social media mobile apps by Pixelkult from Pixabay

But political ads are complicated matters, especially when the advertisements themselves may not be factually accurate or are posted by extremist political groups promoting hateful and anti-democratic agendas. In late 2019, Twitter announced it will stop accepting political ads in advance of the 2020 Presidential election (CNN Business, 2019). Pinterest, TikTok, and Twitch also have policies blocking political ads—although 2020 Presidential candidates, including Donald Trump and Bernie Sanders, have channels on Twitch. Early in 2020, YouTube announced that it intends to remove from its site misleading content that can cause "serious risk of egregious harm." More than 500 hours of video are uploaded to YouTube every minute.

Facebook has made changes to its policy about who can run political ads on the site, but stopped short of banning or fact-checking political content. An individual or organization must now be "authorized" to post material on the site. Ads now include text telling readers who paid for it and that the material is "sponsored" (meaning paid for). The company has maintained a broad definition of what counts as political content, stating that political refers to topics of "public importance" such as social issues, elections, or politics.

Read official statements by Facebook about online content, politics, and political ads:

Misinformation about Politics and Public Health

In addition to political ads, there is a huge question of what to do about the deliberate posting of misinformation and outright lies on social media by political leaders and unscrupulous individuals. The CEOs of technology firms have been reluctant to fact check statements by politicians, fearing their companies would be accused of censoring the free flow of information in democratic societies.

On January 8, 2021, two days after a violent rampage by a pro-Trump mob at the nation's Capitol, Twitter took the extraordinary step of permanently suspending the personal account of Donald Trump, citing the risk of further incitement of violence. You can read the text of the ban here: Permanent Suspension of @realDonaldTrump. Google and Apple soon followed by removing the right-wing site Parler from their app stores (Parler is seen as an alternative platform for extremist viewpoints and harmful misinformation).

Social media platforms had already begun removing or labeling tweets by the President as containing false and misleading information. At the beginning of August 2020, Facebook and Twitter took down a video of the President claiming children were "almost immune" to coronavirus as a violation of their dangerous COVID-19 misinformation policies (NPR, August 5, 2020). Earlier in 2020, Twitter for the first time added a fact check to one of the President's posts about mail-in voting.

Misinformation and lies have been an ongoing feature of Trump's online statements as President and former President. The Washington Post Fact Checker reported that as of November, 5, 2020, Trump had made 29,508 false or misleading statements in 1,386 days in office as President. Then in Fall 2021, after analyzing 38 million English language articles about the pandemic, researchers at Cornell University declared that Trump was the largest driver of COVID misinformation (Coronavirus Misinformation: Quanitfying Sources and Themes in COVID-19 infodemic).

During this same time period, Facebook was also dealing with a major report from the international technology watchdog organization Avaaz that held that the spread of health-related misinformation on Facebook was a major threat to users health and well-being (Facebook's Algorithm: A Major Threat to Public Health, August 19, 2020). Avaaz researchers found that only 16% of health misinformation on Facebook carried a warning label, estimating that misinformation had received an estimated 3.8 billion views in the past year. Facebook responded by claiming it placed warning labels on 98 million pieces of COVID-19 misinformation.

The extensive reach of social media raises the question of just how much influence should Facebook, Twitter, and other powerful technology companies have on political information, elections and/or public policy?

Policymakers and citizens alike must decide whether Facebook and other social media companies are organizations like the telephone company which does not monitor what is being said or are they a media company, like a newspaper or magazine, that has a responsibility to monitor and control the truthfulness of what it posts online.

Suggested Learning Activities

- Design a political advertisement to post online

- Students design a political ad to post on different social media sites: Facebook, Twitter, Snapchat, and TikTok.

- As a class, vote on the most influential ads.

- Discuss as a group what made the ad so influential.

- State your view

- Students write an editorial or op-ed that responds to one or more of the following prompts:

- What responsibility do technology companies have to evaluate the political content that appears on their social media platforms?

- What responsibility do major companies and firms have when ads for their products run on the YouTube channels or Twitter feeds of extremist political groups? Should they pull those ads from those sites?

- Should technology companies post fact-checks of ads running on their platforms?

Online Resources for Political Content on Social Media Sites

Standard 7.6 Conclusion

To support media literacy learning, INVESTIGATE asked students to analyze the point of view and evaluate the claims of an opinion piece about a public issue—many of which are published on social media platforms. UNCOVER explored the emergence of deepfakes and fake profiles as features of political messaging. ENGAGE examined issues related to regulating the political content posted on Facebook and other social media sites. These modules highlight the complexity that under the principle of free speech on which our democratic system is based, people are free to express their views. At the same time, hateful language, deliberately false information, and extremist political views and policies cannot be accepted as true and factual by a civil society and its online media.