6.3: The nature of lexical meaning

- Last updated

- Save as PDF

- Page ID

- 199941

- Catherine Anderson, Bronwyn Bjorkman, Derek Denis, Julianne Doner, Margaret Grant, Nathan Sanders, and Ai Taniguchi

- eCampusOntario

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)Video Part 1:

Video Part 2:

Video Part 1:

Video Part 2:

Lexical knowledge vs. world knowledge

We are now ready to address the big question of this chapter: what is lexical meaning, anyway? The nature of lexical meaning is still under considerable debate. We will outline a few theories of lexical meaning here.

To appreciate what a difficult question “what is word meaning?” is, let’s take a look at an example first: the English word chair (the one that refers to a piece of furniture). There are lots of things that you know about chairs, based on your life experience. In other words, you have a lot of world knowledge (sometimes also called encyclopedic knowledge) about chairs. World knowledge is a part of your general cognition, which is the collection of mental processes used for gaining new knowledge. Your concept of chair that you have in your mind is a subset of this world knowledge. Concepts are building blocks of thought, so your concept of chair is your abstract understanding of what a chair is. The linguistic expression chair has some sort of connection with some group of concepts; this connection is what lexical meaning, or sense, is.

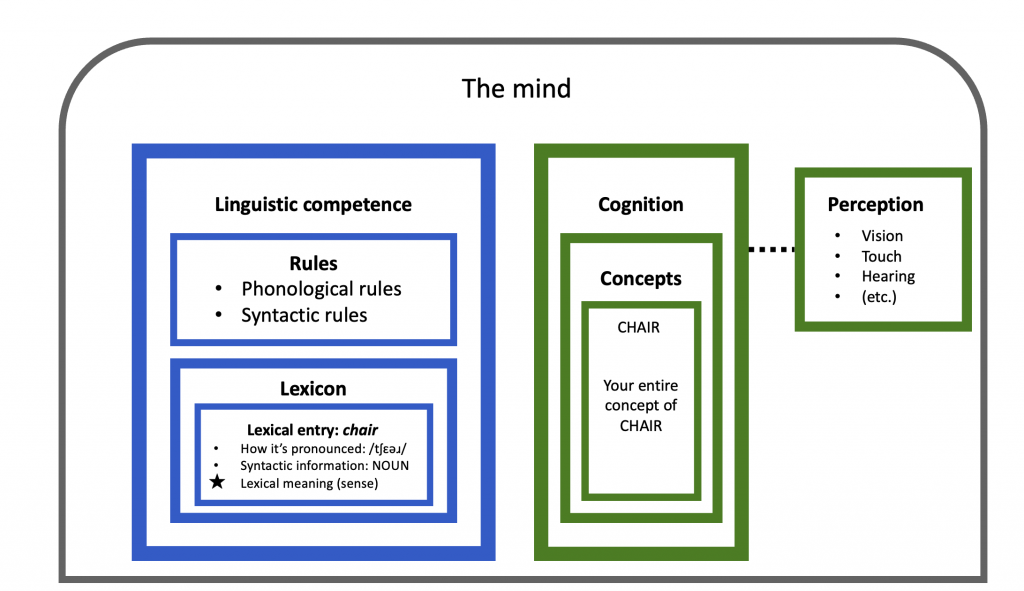

Now, the question is what the relationship between the lexical entry for chair and your concept of chairs is. One basic question we must ask is whether lexical meaning and concepts are separate things, or whether lexical meanings are concepts. If we say that they are separate things, the architecture of the mind might look like something like Figure \(\PageIndex{1}\). The star in the image indicates what “sense” is under this theory.

Under this approach, the lexical entry for chair would come with its phonological information (how it’s pronounced or signed), its syntactic information (syntactic category), and its semantic information: the lexical meaning, or sense. One way to think about it is that chair would come with a “definition” of sorts. Earlier works in lexical semantics tended to have this kind of “meanings as definitions” kind of approach, including Jerrold J. Katz and colleagues’ work from the 1960s and 1970s (Katz and Fodor 1962, Katz and Postal 1964, Katz 1972). This approach assumes that this definition is purely linguistic in nature. This definition would be consistent with your concept of chairs: what you know about chairs informs the definition of it. But strictly speaking, the meaning itself is not a concept. If we assume a separation of lexical knowledge and world knowledge like this, we might think that maybe things like “it’s a countable object”, “it’s for sitting on”, “it has a back”, “it has a seat”, and so on would be included a part of the lexical knowledge. Non-lexical world knowledge of chairs might include things like “you can stand on it”, “it’s often made out of wood,” “it can be bought”, “people usually buy matching chairs for their kitchen table”, “IKEA sells chairs,” “there’s a game where you play music and walk around chairs, and you have to sit in one when the music stops”, “I love chairs”, and so on. By traditional standards, non-lexical knowledge is usually thought to include things like subjective feelings evoked, and properties that are described with non-universal qualifications like “usually” and “often”. However, what’s considered lexical knowledge vs. not is a hard distinction to draw, and there is not a consensus about what counts as linguistic knowledge vs. world knowledge. Things like “people usually buy matching chairs for their kitchen table” certainly feels like it’s not a part of the “definition” of chair, but what about something like “it usually has four legs”?

Some linguists would argue that only the necessary and sufficient conditions for chair would be a part of its lexical meaning — only enough information to distinguish it from other classes of objects. To use the terminology from 7.3, the lexical meaning would only include things that the statement x is a chair would entail. So if x is a chair, then the necessary and sufficient conditions of chair might be describable as the following “check list”:

- the purpose of x is to seat one person;

- x has a seat;

- x has a back.

To illustrate another example, the necessary and sufficient conditions of doe might be:

- x is a deer (a kind of animal);

- x is an adult;

- x is female.

These are called “necessary and sufficient conditions” because these conditions are necessary for distinguishing the doe category from other categories, and these conditions are also sufficient for the distinction (i.e., no more conditions need to be added). So for doe, it’s necessary that we specify “x is a deer”: without it, we would incorrectly include other adult female animals in the doe category, such as cows. “x is female” is also necessary; without it, we would incorrectly include other adult deer, like bucks. Collectively, these four conditions are sufficient: we don’t need additional conditions like “x lives in a forest”; some does live in mountains. The listed conditions are sufficient because that’s the minimum number of conditions needed for distinguishing does from non-does.

The limitation of this “checklist” approach to lexical meaning is that the boundary between categories of things is not as sharp as the theory makes it out to be. Chair and doe might be relatively intuitive categories (well, personally, I have uncertainties about a chair requiring a back; try an online image search for “backless chair” in quotation marks, and see what you think!). But what about other things? What makes something a jacket vs. a coat? What does it mean for something to spin vs. rotate? How much moisture is required for you to call something moist? Is a hotdog a sandwich? Try searching the phrase “the cube rule” on the internet to see some people’s comically unsuccessful attempts at coming up with necessary and sufficient conditions for certain food categories.

Experiments show that people often do not have sharp judgments about categories of things. Consider the images in Figure \(\PageIndex{2}\) below, which are inspired by the stimuli from Labov (1973)’s study.

Your task is this: categorise each of the objects labeled 1 through 5 as a cup or a bowl. Most people agree that 1 is a cup, while 5 is a bowl. People are usually much less confident about the objects in 2 to 4, especially 3, and there tends to be disagreement about them. If we hypothesise that in our mental lexicon, there are clear necessary and sufficient conditions listed for cup, we should be able to categorise 1 through 5 as a cup or not, no problem. The fact that we cannot suggests that there’s more to meaning than listing minimal requirements for it. Interestingly, participants in an experiment like this find information like “what goes into this thing” helpful in the categorisation process. For example, if the object in 3 had mac and cheese in it, people would likely say that it’s a bowl. If it had tea in it, people are more willing to say that it’s a cup. It seems that category boundaries are fuzzy, and a lot more world knowledge goes into the lexical meaning of words than just “necessary and sufficient” conditions.

Are lexical meanings concepts?

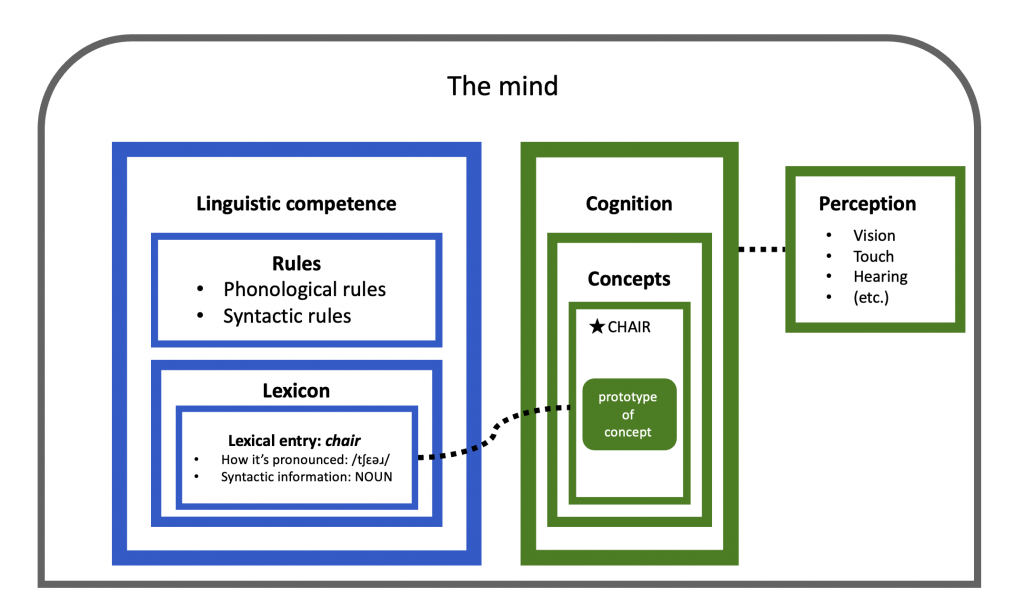

The first theory of lexical meaning that we examined (in Figure \(\PageIndex{3}\)) assumed that lexical meaning is a distinct thing from concepts. A competing theory of lexical meaning is that lexical meanings are concepts. In other words, in this approach, the listeme chair in the lexicon does not have a “definition” in the lexical entry — the concept that is associated with chair is the “meaning”. Figure 7.6 illustrates one way in which this kind of approach can work. Once again, the star in the diagram indicates what the “sense” of the word is under this approach. Try comparing it with Figure \(\PageIndex{3}\) from earlier.

This version of the “concepts as meaning” theory is what we might call the maximalist approach: literally, the “meaning” of chair is every single thing you know about chairs, including things like “IKEA sells chairs” and “there’s a game where you play music and walk around chairs, and you have to sit in one when the music stops”. Ray Jackendoff (1976)’s Conceptual Semantics (CS) is one such theory. In Conceptual Semantics, only the phonology and the syntax of the listeme resides natively in the lexicon. The lexical entry doesn’t contain a separate semantics of the listeme — the meaning is the conceptual representation in your cognition that the listeme is tied to. One reason for theorising that our language faculty has access to our general cognitive faculty is the fact that language is something that we use to respond to things we perceive. We describe things we see, touch, hear and so on.

A more intermediate approach might lie somewhere in between the “meaning as definition” approach and the “meaning as concepts” approach. In this kind of approach, listemes do have a semantic representation in the lexical entry in the lexicon (as in Figure \(PageIndex{3}\)), but the lexical meaning has access to the cognitive conceptual module in limited ways. Here is something all semanticists can agree on: there are linguistic phenomena that are sensitive to certain concepts.

For example, I started a meal in English can mean ‘I started eating a meal’ or ‘I started preparing a meal’, while I started a book means ‘I started reading a book’ or ‘I started writing a book.’ The first meaning for each word refers to the intended purpose of that object, and the second meaning points to how that object came into being. We can see from data like this words activate concepts like “purpose of the object” and “how the object came into being”. One way to analyse this in terms of the architecture of the mind is that the lexical meaning of meal, book, chair, cup, etc. (and all nouns, in fact) on the linguistic competence side has a template that says “specify the intended purpose of this object” and “specify how this object comes into being”. Then, it retrieves the needed information from your concepts on the cognition side. This is roughly the approach that James Pustejovsky’s Generative Lexicon Theory (1995) takes, among others.

Regardless of whether you think lexical meanings are concepts or not, one thing that neither approach has explained so far is prototype effects. We already saw in the “cup or bowl?” experiment that some things are “clearly” cups or “clearly” bowls, and some things are in the grey area. Even if you couldn’t tell me what exactly makes

a cup a cup, you probably have intuitions about what a typical cup looks like: for many Canadians, a prototypical cup is probably like the one in Figure 7.8. A prototypical member of a category is very central to that category; a prototypical cup is a very cuppy cup. If I asked you to imagine a chair, what do you immediately think of? Many of you probably imagined something like Figure 7.9 (a prototypical chair), rather than something like Figure \(\PageIndex{6}\) (a non-prototypical chair).

If I asked you to name 5 bird species, what would you write down? Typically, things like robin and sparrow are at the top of the list for many people, but birds like penguin are not named as frequently. These kinds of experiments, made famous by Eleanor Rosch, suggest that our minds organise concepts by prototypicality (Rosch 1973, 1975, among others).

If we assume the “meanings are concepts” approach to lexical meaning, then we might modify the architecture of the mind to look like this:

Under the kind of theory illustrated in Figure \(\PageIndex{7}\), we would still assume that the lexical meaning of chair is the concept associated with chair, but this concept would have more structure to it. Previously, the concept of chair was an unorganized collection of what you know about chairs. So, “musical chairs is a game that involves chairs” and “sometimes chairs have one leg” would have had equal importance as something like “the purpose of a chair is to seat one person”. Under a prototype theory of concepts, the concept of chair would be organized by prototypicality, with the prototype of the concept being at the “core”. This means that at the “center” of what you know about chairs would be, for example, the fact that a chair is for seating one person, and that it typically has four legs. What’s at the “center” of the concept would have more prominence in the organization of the concept. In other words, what’s at the center would be considered more important for what it means for something to be a chair. Less central information, like how musical chairs is a game that involves chairs, and how sometimes chairs have one leg, would be farther away from this conceptual core. This accounts for why we tend to think of the most prototypical example of a category when asked to name a member of that category.

Language, power, and our relationship with words

Regardless of what theory of lexical meaning we adopt, there is no denying that our individual lived experiences shape our relationship with words. This of course means that our experience in the world affects our understanding of word meaning (e.g., a fashion enthusiast’s understanding of the meaning of purse may be very different from a non-fashion-enthusiast’s!), but there are other effects, too. For example, in one study of 51 veterans, researchers found that veterans with PTSD (Post-Traumatic Stress Disorder) and veterans without PTSD process combat-related words differently (Khanna et al. 2017). Particularly, in a Stroop test where participants have to say the name of the color that a word is written in (instead of reading the word itself), veterans with PTSD (31 of the 51 participants) responded significantly slower to combat-related words than they did to more neutral words and “threatening” words that are unrelated to combat. Veterans without PTSD (20 of the 51 participants) did not show a significant difference in their response time to different categories of words. The researchers also examined the veterans’ brain activity during this kind of task, and found that veterans with PTSD have reduced activity in the part of the brain that regulates emotional regulation. As discussed in Chapter 1, words are powerful, sometimes triggering strong emotional and physiological responses. Words that are non-traumatic to you may have a completely different effect on other people. This is something we might want to be considerate of in thinking about how words are used. The “literal” meaning of the word is not the only thing invoked when a word is uttered, and some words come with a lot of baggage, sometimes personal, sometimes more widespread as cross-generational trauma (especially for marginalized communities). So if someone says “hey, that word is painful for me to hear/see,” let’s try not to dismiss their claim based on just the literal meaning of the word, and based on just your personal perception that the word is unproblematic.

Whatever your theory of lexical meaning you side with, what we do know is that certain pieces of information affect how a word behaves grammatically. For example, I drank the chair sounds distinctly odd compared to I drank the tea — this data suggests that concepts concerning the state of matter of physical objects matter for language. In the sections that follow, we will investigate what other sorts of information language tends to care about across languages. This will help us answer questions about lexical meaning at a more global level: what categories of meanings are there in language?

Check your understanding

Query \(\PageIndex{1}\)

References

Jackendoff, R. (1976). Toward an explanatory semantic representation. Linguistic Inquiry, 7(1):89–150.

Ježek, E. (2016). Lexical information. In The Lexicon: An Introduction. Oxford University Press.

Katz, J. J. (1972). Semantic Theory. Harper and Row, New York.

Katz, J. J. and Fodor, J. A. (1963). The structure of a semantic theory. Language, 39:170–210.

Katz, J. J. and Postal, P. M. (1964). An Integrated Theory of Linguistic Descriptions. MIT Press, Cambridge, MA.

Labov, W. (1973). The boundaries of words and their meanings. In Bailey, C.-J. and Shuy, R. W., editors, New Ways of Analyzing Variation in English, pages 340–371, Washington D.C. Georgetown University Press.

Murphy, M. L. (2010). Lexical Meaning. Cambridge University Press, Cambridge, MA.

Pustejovsky, J. (1995). The Generative Lexicon. MIT Press, Cambridge, MA.

Rosch, E. H. (1973). Natural categories. Cognitive psychology, 4(3), 328-350.

Rosch, E. (1975). Cognitive reference points. Cognitive psychology, 7(4), 532-547.

7.7: Countability

One or more interactive elements has been excluded from this version of the text. You can view them online here: https://ecampusontario.pressbooks.pub/essentialsoflinguistics2/?p=813#oembed-1

A fundamental aspect of nominal meaning is whether the entity is countable or not. Descriptively, nouns that are countable can be pluralized, can appear with numerals, and take the determiner many. All of the nouns (bolded) in (1)-(3) are called count nouns in English because they have these properties.

| (1) | I bought these shirts today. | |

| (2) | Beth needs three chairs in this room. | |

| (3) | There are so many cups on the shelf. |

There is another class of nouns which cannot be pluralized in English, like dirt. Nouns like dirt are called mass nouns. They often point to substances or entities that are otherwise considered to be a homogenous group. For example, rice is also a mass noun in English. In principle individual grains of rice can be counted, but linguistically rice behaves like a mass noun. Mass nouns resist pluralization, cannot take numerals, and takes the determiner much rather than many. This is shown in (4)-(6).

| (4) | a. | * | That is a lot of dirts. |

| b. | That is a lot of dirt. | ||

| (5) | * | Beth needs three muds for this garden. | |

| (6) | a. | * | There are so many rices in the rice cooker. |

| b. | There is so much rice in the rice cooker. |

Conceptually, count nouns are countable in the sense that, for example, if I have one cup on the table and then put another cup on the table, this results in two distinct, separate cup entities where the boundary of each one is perceptible. We say that count nouns are bounded for this reason. Mass nouns like dirt is different because if I have a pile of dirt on the table and add more dirt to it, you still have just one pile of dirt, just larger. So mass nouns are unbounded.

You may have noticed that in (4)-(6), pluralised mass nouns sound acceptable in certain contexts. For example, saying a lot of dirts, three muds, or many rices gives rise to the kind interpretation: a lot of kinds of dirt, three kinds of mud, and many kinds of rice. Like this, mass nouns can often be used in a “count” way.

Besides counting the kinds of the mass noun, another way to make mass nouns countable is to put them into containers. For example, nouns like water and pudding are homogenous substances and therefore are fundamentally a mass noun, but when pluralised they have a fairly natural interpretation in which you are counting the number of containers that contain the substance. This is shown in (7)-(8).

| (7) | a. | There is so much water in the sink. | (mass) | |

| b. | Can we have two waters? | (count, ‘two glasses of water’) | ||

| (8) | a. | There is a lot of pudding in this bowl. | (mass) | |

| b. | There are four puddings in the fridge. | (count, ‘two cups (containers) of pudding’) |

The reverse is possible as well: in some contexts, fundamentally count nouns can be used in “mass” ways. Pumpkin for example is at the basic level a count noun, but if for example a truck carrying a bunch of pumpkins crashed on the highway and the pumpkins got smashed and got everywhere on the road, you can use it in a “mass” way. This is shown in (9)-(10).

| (9) | There are many pumpkins on the truck. | (count) | ||

| (10) | The pumpkin truck crashed on the highway and there was so much pumpkin everywhere. | (mass) |

What we learn from these observations is that nouns lexically encode whether it is a count noun or a mass noun in its lexical entry. However, there also seems to be a rule in English where a mass noun can be converted into a count noun, and vice versa.

Whether a noun is linguistically count or mass varies from language to language. Consider (11) and (12). For example in Halkomelem (Hul’qumi’num), an indigenous language spoken by various First Nations peoples of the British Columbia Coast, fog can be readily pluralized, giving rise to the meaning ‘lots of fog’. In French, singular cheveu is interpreted as ‘a strand of hair,’ while cheveux, which is morphosyntactically marked plural, is interpreted as ‘a mass of hair,’ as in the hair on your head.

| (11) | Halkomelem | ||||

| tsel | kw’éts-lexw | te/ye | shweláthetel | ||

| 1sg.s | see-trans.3o | det/det.pl | fogs | ||

| ‘I’ve seen a lot of fog’ (Wiltschko 2008) | |||||

| (12) | a. | French | |||

| Il y a | un cheveu | dans | ma soupe | ||

| there.is | a hair | in | my soup | ||

| ‘There is a strand of hair in my soup’ | |||||

| (12) | b. | Je | veux | me brosser | les cheveux |

| I | want | myself to.brush | the.pl hairs | ||

| ‘I want to brush my hair’ | |||||

It is also worthwhile to note that some languages like Japanese do not have productive morphosyntactic plural marking. For example, (13) can be interpreted as ‘bring cups’ or ‘bring a cup’ depending on context.

| (13) | Japanese | ||

| koppu | mottekite | ||

| cup | bring | ||

| ‘Bring cups / a cup’ | |||

Some linguists have analysed languages like Japanese as having only mass nouns (Chierchia 1998). This does not mean that you cannot count things ever in Japanese. Japanese has a rich system of noun classifiers. Similar to the way in English hair is counted as ‘one strand of hair,’ ‘two strands of hair,’ etc., Japanese has morphemes that attach to numerals to turn mass nouns into bounded bits. Which morpheme is used depends on the semantic classification of the noun being counted. Consider the data in (14).

| (14) | Japanese | |||

| a. | kami | 3-mai | kudasai | |

| paper | 3-CL | give | ||

| ‘Please give me 3 pieces of paper’ (Classifier: thin sheets) | ||||

| b. | enpitsu | 2-hon | kudasai | |

| pencil | 2-CL | give | ||

| ‘Please give me 2 pencils’ (Classifier: long cylindrical objects) | ||||

| c. | kuruma | 1-dai | kudasai | |

| car | 1-CL | give | ||

| ‘Please give me 1 car’ (Classifier: object with mechanical parts) | ||||

| d. | neko | 12-hiki | kudasai | |

| cat | 12-CL | give | ||

| ‘Please give me 12 cats’ (Classifier: smallish quadripedal animals) | ||||

| e. | tori | 4-wa | kudasai | |

| paper | 4-CL | give | ||

| ‘Please give me 4 birds’ (Classifier: animals with wings) | ||||

| f. | tomato | 5-tsu / 5-ko | kudasai | |

| tomato | 5-CL / 5-CL | give | ||

| ‘Please give me 5 tomatoes’ (Classifier: general) | ||||

The bolded morpheme (glossed as “CL” for “Classifier”) is the classifier in each sentence. The idea is that literally saying something like ‘2 pencils’ (*2 enpitsu or *enpitsu 2) is ungrammatical in Japanese. You must use a classifier when counting things. Nouns in Japanese are categorised into grammatical groups that are roughly semantically-based. For example, “thin, flat objects” forms a category, whose typical member include objects like paper, posters, and pizza. Nouns in this class use the classifier -mai as in (14a). The classifier -hon in (14b) is typically used for long, thin, often cylindrical objects like pencils, pens, and drinking straws. -dai in (14c) is used for typically mechanical objects with perceptible parts (e.g., cars, trucks, and computers), -hiki in (14d) for smaller quadripedal mammals (e.g., dogs, cats, hamsters), and -wa in (14e) for animals with wings (e.g., chicken, sparrow, eagle). -tsu and -ko are the “elsewhere” classifiers that can be used for a more heterogenous group of inanimate nouns that don’t fall into a specific class (e.g., tomatoes, pebbles, cushions, etc.). Some members of a noun class can be surprising: for example, usagi ‘rabbit’ takes the classifier typically used for animals with wings (-wa), likely because their ears are perceived to be like wings. ke:ki ‘cake’, if whole, takes the classifier -dai for “mechanical objects with perceptible parts” and if by the slice, the classifier -kire for “slices” or –pi:su for “pieces”.

How to be a linguist: Context matters!

We see from the discussion of the mass/count distinction that the context matters when you are making (or asking for) acceptability judgments about sentences. We saw in the previous “How to be a linguist” that what’s felicitous or infelicitous is often very informative of the semantics of a word. Here’s another tip. When making (or asking for) acceptability judgments, be very careful about what’s actually well-formed vs. ill-formed. For example, what do you think about (15)?

(15) I swam in vanilla extract.

You might have the intuition that there is something unusual about this sentence — but should we conclude from this that this is a semantically bad sentence? Hold on! Not quite. (15) might sound odd out of the blue because based on your world knowledge, you know that vanilla extract is usually used in small amounts — not a large enough quantity to swim in. However, with a proper context like in (16), this is a perfectly natural thing to say:

(16) (Context: I work at a vanilla extract factory, and while I was examining the quality of the product, I fell in a 4000-gallon tank of vanilla extract.)

I swam in vanilla extract.

The moral of the story is that make sure you think about the context carefully when examining data. Is the sentence bad in all contexts, or just certain ones? In fact, the difference that the context makes may be quite informative in terms of what a linguistic expression means. Consider (17) and (18): same sentence, same emoji, but different context.

(17) (Context: Your friend’s dog did a cute and funny trick at a dog show)

Your dog was so cute 😂

(18) (Context: At the funeral service for your friend’s cute dog that passed away)

#Your dog was so cute 😂

Your dog was so cute without the emoji would be fine in both (17) and (18); the addition of the emoji is what matters. For many readers, the emoji is infelicitous in (18). This tells us something about the meaning of this particular emoji: it doesn’t mean SAD-crying! The context in (17), where the emoji is felicitous, suggests that it means LAUGHING-crying: it perhaps means that something is funny. Try this same-sentence-different-context approach yourself with the upside-down smiley emoji, 🙃. What does it mean? When do you use it? Back up your intuition with an example sentence. Construct a sentence with this emoji, and come up with two different contexts: one in which the emoji is felicitous, and another one in which the emoji is infelicitous. If this emoji is not a part of your lexicon, do the same exercise but ask someone else who uses the emoji for the acceptability judgement: “Is this sentence natural in this context? How about this context?”. Can you infer from their response what sentiment the upside-down smiley indicates? (See “Check your understanding” at the end of this section for a sample answer.)

This kind of approach to meaning may be useful whenever you encounter a new word (either in your first language or additional language(s)!). Instead of just asking “What does this word mean?”, consider asking linguist questions like “In what kinds of contexts do you use this word in? Where can you not use it?”! This will get you a more nuanced picture of the meaning of that word!

Check your understanding

Query \(\PageIndex{1}\)

References

Chierchia, G. (1998). Reference to kinds across language. Natural language semantics, 6(4), 339-405.

Wiltschko, M. (2008). The syntax of non-inflectional plural marking. Natural Language & Linguistic Theory, 26(3), 639-694.

Lexical Semantics, from Sarah Harmon

Video Script

Catherine Anderson does a really great job of setting up lexical semantics, but there's a few more pieces that I want to cover. One has to do with these connections between reference, so the lexicon or phrase, and the meaning they are associated with.

Almost assuredly you have heard of synonyms before. They are the quintessential proof that language is arbitrary, because if you have similar or same terms for the similar terms for the same meaning, that's arbitrariness.

Antonyms, you almost assuredly have heard of them before. There are three different kinds of antonymy: complimentary, gradable, and relational. Complimentary antonyms are this or that: on/off, alive/dead (sorry, zombie fans, there's nothing in between!). Gradable antonyms are the opposite; they are along a gradation or scale: hot/cold but that there is a whole bunch of other temperatures in between. Relational antonymy does have to do with some kind of relationship: employer/employee, parent/child, teacher/student.

Homophones; you may have heard them as homonyms before. Homophones is what we say in linguistics. They have the same sound, but two completely unrelated backgrounds or origins. In morphology I talked about this before, when I talked about the word bank; that lexicon has two different entries and they're unrelated. One is the financial institution, and the other is the side of a river. Seal, is another one, whether you're talking about the animal or the thing you use to close a document or paper. That is a homophone because they have two completely different meanings and they're completely different origins.

There's three more than I want to go through that you may have heard of before, but maybe not. The first is polysemy. Polysemy is different than homophony; remember homophony has to do with different origins. Polysemy, as the name implies, means they have the same origin just multiple levels of meaning. poly- = multiple, -semy = meaning. If I say the English term sheet, you think of something flat of something thin and flexible or pliable. A sheet of paper; a sheet of linen.

A hypernym is a greater subset. Instead of talking about a specific aspect of something like a red, blue, pink, or purple thing, you talk about a color: ‘oh it's got a lot of color’. That's a hyponym. Instead of talking about a tiger, lion, cheetah, leopard, lynx, or bobcat, you talk about felines. That's a hyponym.

A metonym is a word substitute. Instead of talking about the King of Spain or the Queen of England, you talk about the Spanish crown, the English crown; that term crown is a metonym. Instead of talking about the action during the baseball game, you say the action on the diamond. That is a metonym because the diamond is the shape of the infield of a baseball field.

All six of these areas include some aspect of compositionality, but especially arbitrariness. Whether it's the actual origin or the usage in any given moment, when you're using these six lexical semantic categories, you're talking about arbitrariness, the arbitrary combination of the term, and the meaning.