1.2: Research Methods in Social Psychology

- Last updated

- Save as PDF

- Page ID

- 11928

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)Kwantlen Polytechnic University

Social psychologists are interested in the ways that other people affect thought, emotion, and behavior. To explore these concepts requires special research methods. Following a brief overview of traditional research designs, this module introduces how complex experimental designs, field experiments, naturalistic observation, experience sampling techniques, survey research, subtle and nonconscious techniques such as priming, and archival research and the use of big data may each be adapted to address social psychological questions. This module also discusses the importance of obtaining a representative sample along with some ethical considerations that social psychologists face.

learning objectives

- Describe the key features of basic and complex experimental designs.

- Describe the key features of field experiments, naturalistic observation, and experience sampling techniques.

- Describe survey research and explain the importance of obtaining a representative sample.

- Describe the implicit association test and the use of priming.

- Describe use of archival research techniques.

- Explain five principles of ethical research that most concern social psychologists.

Introduction

Are you passionate about cycling? Norman Triplett certainly was. At the turn of last century he studied the lap times of cycling races and noticed a striking fact: riding in competitive races appeared to improve riders’ times by about 20-30 seconds every mile compared to when they rode the same courses alone. Triplett suspected that the riders’ enhanced performance could not be explained simply by the slipstream caused by other cyclists blocking the wind. To test his hunch, he designed what is widely described as the first experimental study in social psychology (published in 1898!)—in this case, having children reel in a length of fishing line as fast as they could. The children were tested alone, then again when paired with another child. The results? The children who performed the task in the presence of others out-reeled those that did so alone.

Although Triplett’s research fell short of contemporary standards of scientific rigor (e.g., he eyeballed the data instead of measuring performance precisely; Stroebe, 2012), we now know that this effect, referred to as “social facilitation,” is reliable—performance on simple or well-rehearsed tasks tends to be enhanced when we are in the presence of others (even when we are not competing against them). To put it another way, the next time you think about showing off your pool-playing skills on a date, the odds are you’ll play better than when you practice by yourself. (If you haven’t practiced, maybe you should watch a movie instead!)

Research Methods in Social Psychology

One of the things Triplett’s early experiment illustrated is scientists’ reliance on systematic observation over opinion, or anecdotal evidence. The scientific method usually begins with observing the world around us (e.g., results of cycling competitions) and thinking of an interesting question (e.g., Why do cyclists perform better in groups?). The next step involves generating a specific testable prediction, or hypothesis (e.g., performance on simple tasks is enhanced in the presence of others). Next, scientists must operationalize the variables they are studying. This means they must figure out a way to define and measure abstract concepts. For example, the phrase “perform better” could mean different things in different situations; in Triplett’s experiment it referred to the amount of time (measured with a stopwatch) it took to wind a fishing reel. Similarly, “in the presence of others” in this case was operationalized as another child winding a fishing reel at the same time in the same room. Creating specific operational definitions like this allows scientists to precisely manipulate the independent variable, or “cause” (the presence of others), and to measure the dependent variable, or “effect” (performance)—in other words, to collect data. Clearly described operational definitions also help reveal possible limitations to studies (e.g., Triplett’s study did not investigate the impact of another child in the room who was not also winding a fishing reel) and help later researchers replicate them precisely.

Laboratory Research

As you can see, social psychologists have always relied on carefully designed laboratory environments to run experiments where they can closely control situations and manipulate variables (see the NOBA module on Research Designs for an overview of traditional methods). However, in the decades since Triplett discovered social facilitation, a wide range of methods and techniques have been devised, uniquely suited to demystifying the mechanics of how we relate to and influence one another. This module provides an introduction to the use of complex laboratory experiments, field experiments, naturalistic observation, survey research, nonconscious techniques, and archival research, as well as more recent methods that harness the power of technology and large data sets, to study the broad range of topics that fall within the domain of social psychology. At the end of this module we will also consider some of the key ethical principles that govern research in this diverse field.

The use of complex experimental designs, with multiple independent and/or dependent variables, has grown increasingly popular because they permit researchers to study both the individual and joint effects of several factors on a range of related situations. Moreover, thanks to technological advancements and the growth of social neuroscience, an increasing number of researchers now integrate biological markers (e.g., hormones) or use neuroimaging techniques (e.g., fMRI) in their research designs to better understand the biological mechanisms that underlie social processes.

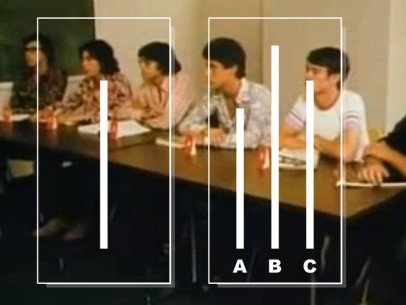

We can dissect the fascinating research of Dov Cohen and his colleagues (1996) on “culture of honor” to provide insights into complex lab studies. A culture of honor is one that emphasizes personal or family reputation. In a series of lab studies, the Cohen research team invited dozens of university students into the lab to see how they responded to aggression. Half were from the Southern United States (a culture of honor) and half were from the Northern United States (not a culture of honor; this type of setup constitutes a participant variable of two levels). Region of origin was independent variable #1. Participants also provided a saliva sample immediately upon arriving at the lab; (they were given a cover story about how their blood sugar levels would be monitored over a series of tasks).

The participants completed a brief questionnaire and were then sent down a narrow corridor to drop it off on a table. En route, they encountered a confederate at an open file cabinet who pushed the drawer in to let them pass. When the participant returned a few seconds later, the confederate, who had re-opened the file drawer, slammed it shut and bumped into the participant with his shoulder, muttering “asshole” before walking away. In a manipulation of an independent variable—in this case, the insult—some of the participants were insulted publicly (in view of two other confederates pretending to be doing homework) while others were insulted privately (no one else was around). In a third condition—the control group—participants experienced a modified procedure in which they were not insulted at all.

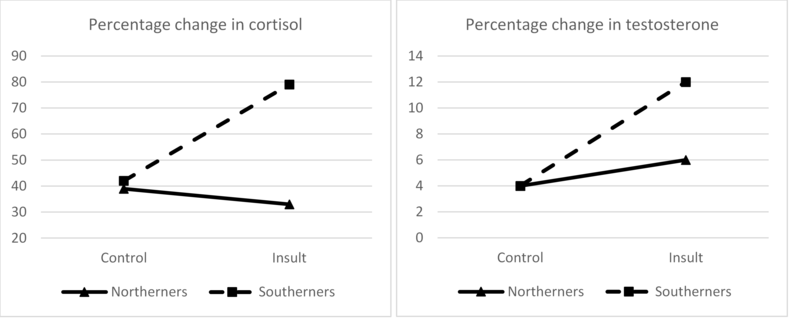

Although this is a fairly elaborate procedure on its face, what is particularly impressive is the number of dependent variables the researchers were able to measure. First, in the public insult condition, the two additional confederates (who observed the interaction, pretending to do homework) rated the participants’ emotional reaction (e.g., anger, amusement, etc.) to being bumped into and insulted. Second, upon returning to the lab, participants in all three conditions were told they would later undergo electric shocks as part of a stress test, and were asked how much of a shock they would be willing to receive (between 10 volts and 250 volts). This decision was made in front of two confederates who had already chosen shock levels of 75 and 25 volts, presumably providing an opportunity for participants to publicly demonstrate their toughness. Third, across all conditions, the participants rated the likelihood of a variety of ambiguously provocative scenarios (e.g., one driver cutting another driver off) escalating into a fight or verbal argument. And fourth, in one of the studies, participants provided saliva samples, one right after returning to the lab, and a final one after completing the questionnaire with the ambiguous scenarios. Later, all three saliva samples were tested for levels of cortisol (a hormone associated with stress) and testosterone (a hormone associated with aggression).

The results showed that people from the Northern United States were far more likely to laugh off the incident (only 35% having anger ratings as high as or higher than amusement ratings), whereas the opposite was true for people from the South (85% of whom had anger ratings as high as or higher than amusement ratings). Also, only those from the South experienced significant increases in cortisol and testosterone following the insult (with no difference between the public and private insult conditions). Finally, no regional differences emerged in the interpretation of the ambiguous scenarios; however, the participants from the South were more likely to choose to receive a greater shock in the presence of the two confederates.

Field Research

Because social psychology is primarily focused on the social context—groups, families, cultures—researchers commonly leave the laboratory to collect data on life as it is actually lived. To do so, they use a variation of the laboratory experiment, called a field experiment. A field experiment is similar to a lab experiment except it uses real-world situations, such as people shopping at a grocery store. One of the major differences between field experiments and laboratory experiments is that the people in field experiments do not know they are participating in research, so—in theory—they will act more naturally. In a classic example from 1972, Alice Isen and Paula Levin wanted to explore the ways emotions affect helping behavior. To investigate this they observed the behavior of people at pay phones (I know! Pay phones!). Half of the unsuspecting participants (determined by random assignment) found a dime planted by researchers (I know! A dime!) in the coin slot, while the other half did not. Presumably, finding a dime felt surprising and lucky and gave people a small jolt of happiness. Immediately after the unsuspecting participant left the phone booth, a confederate walked by and dropped a stack of papers. Almost 100% of those who found a dime helped to pick up the papers. And what about those who didn’t find a dime? Only 1 out 25 of them bothered to help.

In cases where it’s not practical or ethical to randomly assign participants to different experimental conditions, we can use naturalistic observation—unobtrusively watching people as they go about their lives. Consider, for example, a classic demonstration of the “basking in reflected glory” phenomenon: Robert Cialdini and his colleagues used naturalistic observation at seven universities to confirm that students are significantly more likely to wear clothing bearing the school name or logo on days following wins (vs. draws or losses) by the school’s varsity football team (Cialdini et al., 1976). In another study, by Jenny Radesky and her colleagues (2014), 40 out of 55 observations of caregivers eating at fast food restaurants with children involved a caregiver using a mobile device. The researchers also noted that caregivers who were most absorbed in their device tended to ignore the children’s behavior, followed by scolding, issuing repeated instructions, or using physical responses, such as kicking the children’s feet or pushing away their hands.

A group of techniques collectively referred to asexperience sampling methods represent yet another way of conducting naturalistic observation, often by harnessing the power of technology. In some cases, participants are notified several times during the day by a pager, wristwatch, or a smartphone app to record data (e.g., by responding to a brief survey or scale on their smartphone, or in a diary). For example, in a study by Reed Larson and his colleagues (1994), mothers and fathers carried pagers for one week and reported their emotional states when beeped at random times during their daily activities at work or at home. The results showed that mothers reported experiencing more positive emotional states when away from home (including at work), whereas fathers showed the reverse pattern. A more recently developed technique, known as the electronically activated recorder, or EAR, does not even require participants to stop what they are doing to record their thoughts or feelings; instead, a small portable audio recorder or smartphone app is used to automatically record brief snippets of participants’ conversations throughout the day for later coding and analysis. For a more in-depth description of the EAR technique and other experience-sampling methods, see the NOBA module on Conducting Psychology Research in the Real World.

Survey Research

In this diverse world, survey research offers itself as an invaluable tool for social psychologists to study individual and group differences in people’s feelings, attitudes, or behaviors. For example, the World Values Survey II was based on large representative samples of 19 countries and allowed researchers to determine that the relationship between income and subjective well-being was stronger in poorer countries (Diener & Oishi, 2000). In other words, an increase in income has a much larger impact on your life satisfaction if you live in Nigeria than if you live in Canada. In another example, a nationally-representative survey in Germany with 16,000 respondents revealed that holding cynical beliefs is related to lower income (e.g., between 2003-2012 the income of the least cynical individuals increased by $300 per month, whereas the income of the most cynical individuals did not increase at all). Furthermore, survey data collected from 41 countries revealed that this negative correlation between cynicism and income is especially strong in countries where people in general engage in more altruistic behavior and tend not to be very cynical (Stavrova & Ehlebracht, 2016).

Of course, obtaining large, cross-cultural, and representative samples has become far easier since the advent of the internet and the proliferation of web-based survey platforms—such as Qualtrics—and participant recruitment platforms—such as Amazon’s Mechanical Turk. And although some researchers harbor doubts about the representativeness of online samples, studies have shown that internet samples are in many ways more diverse and representative than samples recruited from human subject pools (e.g., with respect to gender; Gosling et al., 2004). Online samples also compare favorably with traditional samples on attentiveness while completing the survey, reliability of data, and proportion of non-respondents (Paolacci et al., 2010).

Subtle/Nonconscious Research Methods

The methods we have considered thus far—field experiments, naturalistic observation, and surveys—work well when the thoughts, feelings, or behaviors being investigated are conscious and directly or indirectly observable. However, social psychologists often wish to measure or manipulate elements that are involuntary or nonconscious, such as when studying prejudicial attitudes people may be unaware of or embarrassed by. A good example of a technique that was developed to measure people’s nonconscious (and often ugly) attitudes is known as the implicit association test (IAT) (Greenwald et al., 1998). This computer-based task requires participants to sort a series of stimuli (as rapidly and accurately as possible) into simple and combined categories while their reaction time is measured (in milliseconds). For example, an IAT might begin with participants sorting the names of relatives (such as “Niece” or “Grandfather”) into the categories “Male” and “Female,” followed by a round of sorting the names of disciplines (such as “Chemistry” or “English”) into the categories “Arts” and “Science.” A third round might combine the earlier two by requiring participants to sort stimuli into either “Male or Science” or “Female and Arts” before the fourth round switches the combinations to “Female or Science” and “Male and Arts.” If across all of the trials a person is quicker at accurately sorting incoming stimuli into the compound category “Male or Science” than into “Female or Science,” the authors of the IAT suggest that the participant likely has a stronger association between males and science than between females and science. Incredibly, this specific gender-science IAT has been completed by more than half a million participants across 34 countries, about 70% of whom show an implicit stereotype associating science with males more than with females (Nosek et al., 2009). What’s more, when the data are grouped by country, national differences in implicit stereotypes predict national differences in the achievement gap between boys and girls in science and math. Our automatic associations, apparently, carry serious societal consequences.

Another nonconscious technique, known as priming, is often used to subtly manipulate behavior by activating or making more accessible certain concepts or beliefs. Consider the fascinating example of terror management theory (TMT), whose authors believe that human beings are (unconsciously) terrified of their mortality (i.e., the fact that, some day, we will all die; Pyszczynski et al., 2003). According to TMT, in order to cope with this unpleasant reality (and the possibility that our lives are ultimately essentially meaningless), we cling firmly to systems of cultural and religious beliefs that give our lives meaning and purpose. If this hypothesis is correct, one straightforward prediction would be that people should cling even more firmly to their cultural beliefs when they are subtly reminded of their own mortality.

In one of the earliest tests of this hypothesis, actual municipal court judges in Arizona were asked to set a bond for an alleged prostitute immediately after completing a brief questionnaire. For half of the judges the questionnaire ended with questions about their thoughts and feelings regarding the prospect of their own death. Incredibly, judges in the experimental group that were primed with thoughts about their mortality set a significantly higher bond than those in the control group ($455 vs. $50!)—presumably because they were especially motivated to defend their belief system in the face of a violation of the law (Rosenblatt et al., 1989). Although the judges consciously completed the survey, what makes this a study of priming is that the second task (sentencing) was unrelated, so any influence of the survey on their later judgments would have been nonconscious. Similar results have been found in TMT studies in which participants were primed to think about death even more subtly, such as by having them complete questionnaires just before or after they passed a funeral home (Pyszczynski et al., 1996).

To verify that the subtle manipulation (e.g., questions about one’s death) has the intended effect (activating death-related thoughts), priming studies like these often include a manipulation check following the introduction of a prime. For example, right after being primed, participants in a TMT study might be given a word fragment task in which they have to complete words such as COFF_ _ or SK _ _ L. As you might imagine, participants in the mortality-primed experimental group typically complete these fragments as COFFIN and SKULL, whereas participants in the control group complete them as COFFEE and SKILL.

The use of priming to unwittingly influence behavior, known as social or behavioral priming (Ferguson & Mann, 2014), has been at the center of the recent “replication crisis” in Psychology (see the NOBA module on replication). Whereas earlier studies showed, for example, that priming people to think about old age makes them walk slower (Bargh, Chen, & Burrows, 1996), that priming them to think about a university professor boosts performance on a trivia game (Dijksterhuis & van Knippenberg, 1998), and that reminding them of mating motives (e.g., sex) makes them more willing to engage in risky behavior (Greitemeyer, Kastenmüller, & Fischer, 2013), several recent efforts to replicate these findings have failed (e.g., Harris et al., 2013; Shanks et al., 2013). Such failures to replicate findings highlight the need to ensure that both the original studies and replications are carefully designed, have adequate sample sizes, and that researchers pre-register their hypotheses and openly share their results—whether these support the initial hypothesis or not.

Archival Research

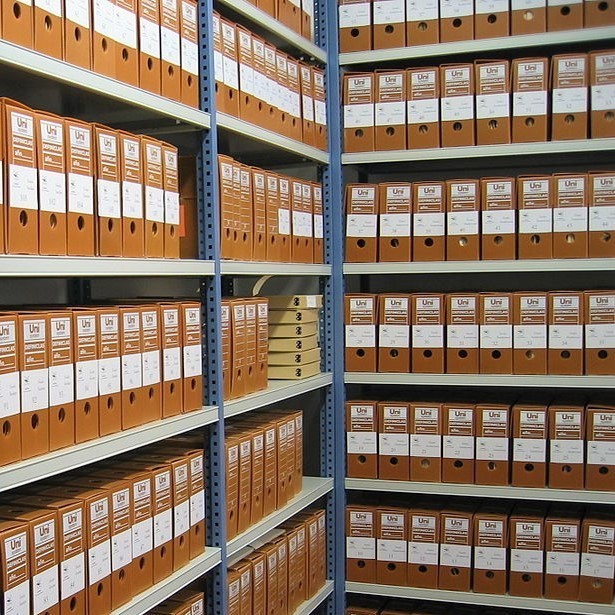

Imagine that a researcher wants to investigate how the presence of passengers in a car affects drivers’ performance. She could ask research participants to respond to questions about their own driving habits. Alternately, she might be able to access police records of the number of speeding tickets issued by automatic camera devices, then count the number of solo drivers versus those with passengers. This would be an example of archival research. The examination of archives, statistics, and other records such as speeches, letters, or even tweets, provides yet another window into social psychology. Although this method is typically used as a type of correlational research design—due to the lack of control over the relevant variables—archival research shares the higher ecological validity of naturalistic observation. That is, the observations are conducted outside the laboratory and represent real world behaviors. Moreover, because the archives being examined can be collected at any time and from many sources, this technique is especially flexible and often involves less expenditure of time and other resources during data collection.

Social psychologists have used archival research to test a wide variety of hypotheses using real-world data. For example, analyses of major league baseball games played during the 1986, 1987, and 1988 seasons showed that baseball pitchers were more likely to hit batters with a pitch on hot days (Reifman et al., 1991). Another study compared records of race-based lynching in the United States between 1882-1930 to the inflation-adjusted price of cotton during that time (a key indicator of the Deep South’s economic health), demonstrating a significant negative correlation between these variables. Simply put, there were significantly more lynchings when the price of cotton stayed flat, and fewer lynchings when the price of cotton rose (Beck & Tolnay, 1990; Hovland & Sears, 1940). This suggests that race-based violence is associated with the health of the economy.

More recently, analyses of social media posts have provided social psychologists with extremely large sets of data (“big data”) to test creative hypotheses. In an example of research on attitudes about vaccinations, Mitra and her colleagues (2016) collected over 3 million tweets sent by more than 32 thousand users over four years. Interestingly, they found that those who held (and tweeted) anti-vaccination attitudes were also more likely to tweet about their mistrust of government and beliefs in government conspiracies. Similarly, Eichstaedt and his colleagues (2015) used the language of 826 million tweets to predict community-level mortality rates from heart disease. That’s right: more anger-related words and fewer positive-emotion words in tweets predicted higher rates of heart disease.

In a more controversial example, researchers at Facebook attempted to test whether emotional contagion—the transfer of emotional states from one person to another—would occur if Facebook manipulated the content that showed up in its users’ News Feed (Kramer et al., 2014). And it did. When friends’ posts with positive expressions were concealed, users wrote slightly fewer positive posts (e.g., “Loving my new phone!”). Conversely, when posts with negative expressions were hidden, users wrote slightly fewer negative posts (e.g., “Got to go to work. Ugh.”). This suggests that people’s positivity or negativity can impact their social circles.

The controversial part of this study—which included 689,003 Facebook users and involved the analysis of over 3 million posts made over just one week—was the fact that Facebook did not explicitly request permission from users to participate. Instead, Facebook relied on the fine print in their data-use policy. And, although academic researchers who collaborated with Facebook on this study applied for ethical approval from their institutional review board (IRB), they apparently only did so after data collection was complete, raising further questions about the ethicality of the study and highlighting concerns about the ability of large, profit-driven corporations to subtly manipulate people’s social lives and choices.

Research Issues in Social Psychology

The Question of Representativeness

Along with our counterparts in the other areas of psychology, social psychologists have been guilty of largely recruiting samples of convenience from the thin slice of humanity—students—found at universities and colleges (Sears, 1986). This presents a problem when trying to assess the social mechanics of the public at large. Aside from being an overrepresentation of young, middle-class Caucasians, college students may also be more compliant and more susceptible to attitude change, have less stable personality traits and interpersonal relationships, and possess stronger cognitive skills than samples reflecting a wider range of age and experience (Peterson & Merunka, 2014; Visser, Krosnick, & Lavrakas, 2000). Put simply, these traditional samples (college students) may not be sufficiently representative of the broader population. Furthermore, considering that 96% of participants in psychology studies come from western, educated, industrialized, rich, and democratic countries (so-called WEIRD cultures; Henrich, Heine, & Norenzayan, 2010), and that the majority of these are also psychology students, the question of non-representativeness becomes even more serious.

Of course, when studying a basic cognitive process (like working memory capacity) or an aspect of social behavior that appears to be fairly universal (e.g., even cockroaches exhibit social facilitation!), a non-representative sample may not be a big deal. However, over time research has repeatedly demonstrated the important role that individual differences (e.g., personality traits, cognitive abilities, etc.) and culture (e.g., individualism vs. collectivism) play in shaping social behavior. For instance, even if we only consider a tiny sample of research on aggression, we know that narcissists are more likely to respond to criticism with aggression (Bushman & Baumeister, 1998); conservatives, who have a low tolerance for uncertainty, are more likely to prefer aggressive actions against those considered to be “outsiders” (de Zavala et al., 2010); countries where men hold the bulk of power in society have higher rates of physical aggression directed against female partners (Archer, 2006); and males from the southern part of the United States are more likely to react with aggression following an insult (Cohen et al., 1996).

Ethics in Social Psychological Research

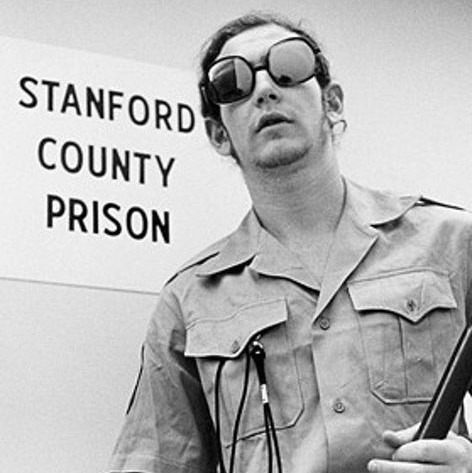

For better or worse (but probably for worse), when we think about the most unethical studies in psychology, we think about social psychology. Imagine, for example, encouraging people to deliver what they believe to be a dangerous electric shock to a stranger (with bloodcurdling screams for added effect!). This is considered a “classic” study in social psychology. Or, how about having students play the role of prison guards, deliberately and sadistically abusing other students in the role of prison inmates. Yep, social psychology too. Of course, both Stanley Milgram’s (1963) experiments on obedience to authority and the Stanford prison study (Haney et al., 1973) would be considered unethical by today’s standards, which have progressed with our understanding of the field. Today, we follow a series of guidelines and receive prior approval from our institutional research boards before beginning such experiments. Among the most important principles are the following:

- Informed consent: In general, people should know when they are involved in research, and understand what will happen to them during the study (at least in general terms that do not give away the hypothesis). They are then given the choice to participate, along with the freedom to withdraw from the study at any time. This is precisely why the Facebook emotional contagion study discussed earlier is considered ethically questionable. Still, it’s important to note that certain kinds of methods—such as naturalistic observation in public spaces, or archival research based on public records—do not require obtaining informed consent.

- Privacy: Although it is permissible to observe people’s actions in public—even without them knowing—researchers cannot violate their privacy by observing them in restrooms or other private spaces without their knowledge and consent. Researchers also may not identify individual participants in their research reports (we typically report only group means and other statistics). With online data collection becoming increasingly popular, researchers also have to be mindful that they follow local data privacy laws, collect only the data that they really need (e.g., avoiding including unnecessary questions in surveys), strictly restrict access to the raw data, and have a plan in place to securely destroy the data after it is no longer needed.

- Risks and Benefits: People who participate in psychological studies should be exposed to risk only if they fully understand the risks and only if the likely benefits clearly outweigh those risks. The Stanford prison study is a notorious example of a failure to meet this obligation. It was planned to run for two weeks but had to be shut down after only six days because of the abuse suffered by the “prison inmates.” But even less extreme cases, such as researchers wishing to investigate implicit prejudice using the IAT, need to be considerate of the consequences of providing feedback to participants about their nonconscious biases. Similarly, any manipulations that could potentially provoke serious emotional reactions (e.g., the culture of honor study described above) or relatively permanent changes in people’s beliefs or behaviors (e.g., attitudes towards recycling) need to be carefully reviewed by the IRB.

- Deception: Social psychologists sometimes need to deceive participants (e.g., using a cover story) to avoid demand characteristics by hiding the true nature of the study. This is typically done to prevent participants from modifying their behavior in unnatural ways, especially in laboratory or field experiments. For example, when Milgram recruited participants for his experiments on obedience to authority, he described it as being a study of the effects of punishment on memory! Deception is typically only permitted (a) when the benefits of the study outweigh the risks, (b) participants are not reasonably expected to be harmed, (c) the research question cannot be answered without the use of deception, and (d) participants are informed about the deception as soon as possible, usually through debriefing.

- Debriefing: This is the process of informing research participants as soon as possible of the purpose of the study, revealing any deceptions, and correcting any misconceptions they might have as a result of participating. Debriefing also involves minimizing harm that might have occurred. For example, an experiment examining the effects of sad moods on charitable behavior might involve inducing a sad mood in participants by having them think sad thoughts, watch a sad video, or listen to sad music. Debriefing would therefore be the time to return participants’ moods to normal by having them think happy thoughts, watch a happy video, or listen to happy music.

Conclusion

As an immensely social species, we affect and influence each other in many ways, particularly through our interactions and cultural expectations, both conscious and nonconscious. The study of social psychology examines much of the business of our everyday lives, including our thoughts, feelings, and behaviors we are unaware or ashamed of. The desire to carefully and precisely study these topics, together with advances in technology, has led to the development of many creative techniques that allow researchers to explore the mechanics of how we relate to one another. Consider this your invitation to join the investigation.

Outside Resources

- Article: Do research ethics need updating for the digital age? Questions raised by the Facebook emotional contagion study.

- http://www.apa.org/monitor/2014/10/r...ch-ethics.aspx

- Article: Psychology is WEIRD. A commentary on non-representative samples in Psychology.

- http://www.slate.com/articles/health...n_college.html

- Web: Linguistic Inquiry and Word Count. Paste in text from a speech, article, or other archive to analyze its linguistic structure.

- www.liwc.net/tryonline.php

- Web: Project Implicit. Take a demonstration implicit association test

- https://implicit.harvard.edu/implicit/

- Web: Research Randomizer. An interactive tool for random sampling and random assignment.

- https://www.randomizer.org/

Discussion Questions

- What are some pros and cons of experimental research, field research, and archival research?

- How would you feel if you learned that you had been a participant in a naturalistic observation study (without explicitly providing your consent)? How would you feel if you learned during a debriefing procedure that you have a stronger association between the concept of violence and members of visible minorities? Can you think of other examples of when following principles of ethical research create challenging situations?

- Can you think of an attitude (other than those related to prejudice) that would be difficult or impossible to measure by asking people directly?

- What do you think is the difference between a manipulation check and a dependent variable?

Vocabulary

- Anecdotal evidence

- An argument that is based on personal experience and not considered reliable or representative.

- Archival research

- A type of research in which the researcher analyses records or archives instead of collecting data from live human participants.

- Basking in reflected glory

- The tendency for people to associate themselves with successful people or groups.

- Big data

- The analysis of large data sets.

- Complex experimental designs

- An experiment with two or more independent variables.

- Confederate

- An actor working with the researcher. Most often, this individual is used to deceive unsuspecting research participants. Also known as a “stooge.”

- Correlational research

- A type of descriptive research that involves measuring the association between two variables, or how they go together.

- Cover story

- A fake description of the purpose and/or procedure of a study, used when deception is necessary in order to answer a research question.

- Demand characteristics

- Subtle cues that make participants aware of what the experimenter expects to find or how participants are expected to behave.

- Dependent variable

- The variable the researcher measures but does not manipulate in an experiment.

- Ecological validity

- The degree to which a study finding has been obtained under conditions that are typical for what happens in everyday life.

- Electronically activated recorder (EAR)

- A methodology where participants wear a small, portable audio recorder that intermittently records snippets of ambient sounds around them.

- Experience sampling methods

- Systematic ways of having participants provide samples of their ongoing behavior. Participants' reports are dependent (contingent) upon either a signal, pre-established intervals, or the occurrence of some event.

- Field experiment

- An experiment that occurs outside of the lab and in a real world situation.

- Hypothesis

- A logical idea that can be tested.

- Implicit association test (IAT)

- A computer-based categorization task that measures the strength of association between specific concepts over several trials.

- Independent variable

- The variable the researcher manipulates and controls in an experiment.

- Laboratory environments

- A setting in which the researcher can carefully control situations and manipulate variables.

- Manipulation check

- A measure used to determine whether or not the manipulation of the independent variable has had its intended effect on the participants.

- Naturalistic observation

- Unobtrusively watching people as they go about the business of living their lives.

- Operationalize

- How researchers specifically measure a concept.

- Participant variable

- The individual characteristics of research subjects - age, personality, health, intelligence, etc.

- Priming

- The process by which exposing people to one stimulus makes certain thoughts, feelings or behaviors more salient.

- Random assignment

- Assigning participants to receive different conditions of an experiment by chance.

- Samples of convenience

- Participants that have been recruited in a manner that prioritizes convenience over representativeness.

- Scientific method

- A method of investigation that includes systematic observation, measurement, and experiment, and the formulation, testing, and modification of hypotheses.

- Social facilitation

- When performance on simple or well-rehearsed tasks is enhanced when we are in the presence of others.

- Social neuroscience

- An interdisciplinary field concerned with identifying the neural processes underlying social behavior and cognition.

- Social or behavioral priming

- A field of research that investigates how the activation of one social concept in memory can elicit changes in behavior, physiology, or self-reports of a related social concept without conscious awareness.

- Survey research

- A method of research that involves administering a questionnaire to respondents in person, by telephone, through the mail, or over the internet.

- Terror management theory (TMT)

- A theory that proposes that humans manage the anxiety that stems from the inevitability of death by embracing frameworks of meaning such as cultural values and beliefs.

- WEIRD cultures

- Cultures that are western, educated, industrialized, rich, and democratic.

References

- Archer, J. (2006). Cross-cultural differences in physical aggression between partners: A social-role analysis. Personality and Social Psychology Review, 10(2), 133-153. doi: 10.1207/s15327957pspr1002_3

- Bargh, J. A., Chen, M., & Burrows, L. (1996). Automaticity of social behavior: Direct effects of trait construct and stereotype activation on action. Journal of Personality and Social Psychology, 71(2), 230-244. http://dx.doi.org/10.1037/0022-3514.71.2.230

- Beck, E. M., & Tolnay, S. E. (1990). The killing fields of the Deep South: The market for cotton and the lynching of Blacks, 1882-1930. American Sociological Review, 55(4), 526-539.

- Bushman, B. J., & Baumeister, R. F. (1998). Threatened egotism, narcissism, self-esteem, and direct and displaced aggression: does self-love or self-hate lead to violence? Journal of Personality and Social Psychology, 75(1), 219-229. http://dx.doi.org/10.1037/0022-3514.75.1.219

- Cialdini, R. B., Borden, R. J., Thorne, A., Walker, M. R., Freeman, S., & Sloan, L. R. (1976). Basking in reflected glory: Three (football) field studies. Journal of Personality and Social Psychology, 34(3), 366-375. http://dx.doi.org/10.1037/0022-3514.34.3.366

- Cohen, D., Nisbett, R. E., Bowdle, B. F. & Schwarz, N. (1996). Insult, aggression, and the southern culture of honor: An "experimental ethnography." Journal of Personality and Social Psychology, 70(5), 945-960. http://dx.doi.org/10.1037/0022-3514.70.5.945

- Diener, E., & Oishi, S. (2000). Money and happiness: Income and subjective well-being across nations. In E. Diener & E. M. Suh (Eds.), Culture and subjective well-being (pp. 185-218). Cambridge, MA: MIT Press.

- Dijksterhuis, A., & van Knippenberg, A. (1998). The relation between perception and behavior, or how to win a game of trivial pursuit. Journal of Personality and Social Psychology, 74(4), 865-877. http://dx.doi.org/10.1037/0022-3514.74.4.865

- Eichstaedt, J. C., Schwartz, H. A., Kern, M. L., Park, G., Labarthe, D. R., Merchant, R. M., & Sap, M. (2015). Psychological language on twitter predicts county-level heart disease mortality. Psychological Science, 26(2), 159–169. doi: 10.1177/0956797614557867

- Ferguson, M. J., & Mann, T. C. (2014). Effects of evaluation: An example of robust “social” priming. Social Cognition, 32, 33-46. doi: 10.1521/soco.2014.32.supp.33

- Gosling, S. D., Vazire, S., Srivastava, S., & John, O. P. (2004). Should we trust web-based studies? A comparative analysis of six preconceptions about internet questionnaires. American Psychologist, 59(2), 93-104. http://dx.doi.org/10.1037/0003-066X.59.2.93

- Greenwald, A. G., McGhee, D. E., & Schwartz, J. L. K. (1998). Measuring individual differences in implicit cognition: The implicit association test. Journal of Personality and Social Psychology, 74(6), 1464-1480. http://dx.doi.org/10.1037/0022-3514.74.6.1464

- Greitemeyer, T., Kastenmüller, A., & Fischer, P. (2013). Romantic motives and risk-taking: An evolutionary approach. Journal of Risk Research, 16, 19-38. doi: 10.1080/13669877.2012.713388

- Haney, C., Banks, C., & Zimbardo, P. (1973). Interpersonal dynamics in a simulated prison. International Journal of Criminology and Penology, 1, 69-97.

- Harris, C. R., Coburn, N., Rohrer, D., & Pashler, H. (2013). Two failures to replicate high-performance-goal priming effects. PLoS ONE, 8(8): e72467. doi:10.1371/journal.pone.0072467

- Henrich, J., Heine, S., & Norenzayan, A. (2010). The weirdest people in the world? Behavioral and Brain Sciences, 33(2-3), 61-83. http://dx.doi.org/10.1017/S0140525X0999152X

- Hovland, C. I., & Sears, R. R. (1940). Minor studies of aggression: VI. Correlation of lynchings with economic indices. The Journal of Psychology, 9(2), 301-310. doi: 10.1080/00223980.1940.9917696

- Isen, A. M., & Levin, P. F. (1972). Effect of feeling good on helping: Cookies and kindness. Journal of Personality and Social Psychology, 21(3), 384-388. http://dx.doi.org/10.1037/h0032317

- Kramer, A. D. I., Guillory, J. E., & Hancock, J. T. (2014). Experimental evidence of massive-scale emotional contagion through social networks. Proceedings of the National Academy of Sciences, 111(24), 8788-8790. doi: 10.1073/pnas.1320040111

- Larson, R. W., Richards, M. H., & Perry-Jenkins, M. (1994). Divergent worlds: the daily emotional experience of mothers and fathers in the domestic and public spheres. Journal of Personality and Social Psychology, 67(6), 1034-1046.

- Milgram, S. (1963). Behavioral study of obedience. Journal of Abnormal and Social Psychology, 67(4), 371–378. doi: 10.1037/h0040525

- Mitra, T., Counts, S., & Pennebaker, J. W. (2016). Understanding anti-vaccination attitudes in social media. Presentation at the Tenth International AAAI Conference on Web and Social Media. Retrieved from comp.social.gatech.edu/papers...cine.mitra.pdf

- Nosek, B. A., Smyth, F. L., Sriram, N., Lindner, N. M., Devos, T., Ayala, A., ... & Kesebir, S. (2009). National differences in gender–science stereotypes predict national sex differences in science and math achievement. Proceedings of the National Academy of Sciences, 106(26), 10593-10597. doi: 10.1073/pnas.0809921106

- Paolacci, G., Chandler, J., & Ipeirotis, P. G. (2010). Running experiments on Amazon Mechanical Turk. Judgment and Decision Making, 51(5), 411-419.

- Peterson, R. A., & Merunka, D. R. (2014). Convenience samples of college students and research reproducibility. Journal of Business Research, 67(5), 1035-1041. doi: 10.1016/j.jbusres.2013.08.010

- Pyszczynski, T., Solomon, S., & Greenberg, J. (2003). In the wake of 9/11: The psychology of terror. Washington, DC: American Psychological Association.

- Pyszczynski, T., Wicklund, R. A., Floresku, S., Koch, H., Gauch, G., Solomon, S., & Greenberg, J. (1996). Whistling in the dark: Exaggerated consensus estimates in response to incidental reminders of mortality. Psychological Science, 7(6), 332-336. doi: 10.111/j.1467-9280.1996.tb00384.x

- Radesky, J. S., Kistin, C. J., Zuckerman, B., Nitzberg, K., Gross, J., Kaplan-Sanoff, M., Augustyn, M., & Silverstein, M. (2014). Patterns of mobile device use by caregivers and children during meals in fast food restaurants. Pediatrics, 133(4), e843-849. doi: 10.1542/peds.2013-3703

- Reifman, A. S., Larrick, R. P., & Fein, S. (1991). Temper and temperature on the diamond: The heat-aggression relationship in major league baseball. Personality and Social Psychology Bulletin, 17(5), 580-585. http://dx.doi.org/10.1177/0146167291175013

- Rosenblatt, A., Greenberg, J., Solomon, S., Pyszczynski. T, & Lyon, D. (1989). Evidence for terror management theory I: The effects of mortality salience on reactions to those who violate or uphold cultural values. Journal of Personality and Social Psychology, 57(4), 681-690. http://dx.doi.org/10.1037/0022-3514.57.4.681

- Sears, D. O. (1986). College sophomores in the laboratory: Influences of a narrow data base on social psychology’s view of human nature. Journal of Personality and Social Psychology, 51(3), 515-530. http://dx.doi.org/10.1037/0022-3514.51.3.515

- Shanks, D. R., Newell, B. R., Lee, E. H., Balakrishnan, D., Ekelund L., Cenac Z., … Moore, C. (2013). Priming intelligent behavior: An elusive phenomenon. PLoS ONE, 8(4): e56515. doi:10.1371/journal.pone.0056515

- Stavrova, O., & Ehlebracht, D. (2016). Cynical beliefs about human nature and income: Longitudinal and cross-cultural analyses. Journal of Personality and Social Psychology, 110(1), 116-132. http://dx.doi.org/10.1037/pspp0000050

- Stroebe, W. (2012). The truth about Triplett (1898), but nobody seems to care. Perspectives on Psychological Science, 7(1), 54-57. doi: 10.1177/1745691611427306

- Triplett, N. (1898). The dynamogenic factors in pacemaking and competition. American Journal of Psychology, 9, 507-533.

- Visser, P. S., Krosnick, J. A., & Lavrakas, P. (2000). Survey research. In H. T. Reis & C. M. Judd (Eds.), Handbook of research methods in social psychology (pp. 223-252). New York: Cambridge University Press.

- de Zavala, A. G., Cislak, A., & Wesolowska, E. (2010). Political conservatism, need for cognitive closure, and intergroup hostility. Political Psychology, 31(4), 521-541. doi: 10.1111/j.1467-9221.2010.00767.x