9.4: Quality Assurance by Design

- Last updated

- Save as PDF

- Page ID

- 88196

Involving all Stakeholders in The Process of Design

Pedagogical heuristics: When designing systems for e-learning, we must first determine the goal, the intention, and specifications by collecting the relevant information. As a result, learners will be free to justify why they use the applications and their reasons will need to match the organization’s intentions. On an operational level, we can use several evaluation frameworks, known as pedagogical heuristics. Heuristics provide a map to work with, without extensive users’ evaluations. Norman (1998), Shneiderman (2002, 2005, 2006), and Nielsen (cited on his website, not dated) tried to help designers and evaluators design systems for the users by providing general guidelines. Norman proposed “seven principles for transforming difficult tasks into simple ones”:

- Use both knowledge in the world and knowledge in the head.

- Simplify the structure of tasks.

- Make things visible: bridge the gulfs of execution and evaluation.

- Get mappings right.

- Exploit the power of constraints, both natural and artificial.

- Design for error.

- When all else fails, standardize.

A second set of heuristics comes from Shneiderman’s Eight Golden Rules:

- Strive for consistency.

- Enable frequent users to use shortcuts.

- Offer informative feedback.

- Design dialogues to yield closure.

- Offer simple error handling.

- Permit easy reversal of actions.

- Support internal locus of control.

- Reduce short-term memory load.

Both sets of rules can be used as evaluation tools and as usability heuristics.

Nielsen (n.d.) proposed other usability heuristics for user interface design. His are more widely used:

- visibility of system status

- match between system and the real world

- user control and freedom

- consistency and standards

- error prevention

- recognition rather than recall

- flexibility and efficiency of use

- aesthetic and minimalist design

- help users recognize, diagnose, and recover from errors

- help and documentation.

His heuristics mostly refer to information provision interfaces and do not explicitly support learning in communities using social software platforms. New heuristics to support the social nature of the systems are needed after the migration of the socio-technical environments on the Internet. For example, whereas Suleiman (1998) suggested a check of user control, user communication, and technological boundary for computer-mediated communication, Preece (2000) proposed usability for online communities supports navigation, access, information design, and dialogue support.

Pedagogical usability (PU): When e-learning started to be widely used in mid 1990s, new heuristics with a social and pedagogical orientation were needed. With a social perspective in mind, Squires and Preece (1999) provided the first set of heuristics for learning with software Similarly, Hale and French (1999) recommended a set of e-learning design principles for reducing conflict, frustration, and repetition of concepts. They referred to the e-learning technique, positive reinforcement, student participation, organization of knowledge, learning with understanding, cognitive feedback, individual differences, and motivation. To date, learning design is concentrated on information provision and activities management aimed at the individual instead of e-learning communities. Thus there exists an absence of common ground between collaborative learning theories and instructional design. Lambropoulos (2006) therefore proposes seven principles for designing, developing, evaluating, and maintaining e-learning communities. These are: intention, information, interactivity, real-time evaluation, visibility, control, and support. In this way, she stresses the need to bring e-learning and human-computer interaction (HCI) together.

From an HCI viewpoint, new heuristics are needed, and there is room for research. Silius and colleagues (2003) proposed that pedagogical usability (PU) should question whether the tools, contents, interfaces, and tasks provided within the e-learning environments supported e-learners. They constructed evaluation tools using questionnaires. They involved all stakeholders in the process and provided easy ways for e-learning evaluation. Muir and colleagues (2003) also worked on the PU pyramid for e-learning, concentrating on the educational effectiveness and practical efficiency of a course-related website. They stressed that the involvement of all stakeholders in design and evaluation for decision-making was necessary.

One of the great challenges of the 21st century is quality assurance. What quality factors can be measured for effective, efficient, and enjoyable e-learning? It is suggested that this kind of evaluation be part of pedagogical usability. There have been studies investigating issues of e-learning quality: management and design (Pond, 2002); quality that improves design (Johnson et al., 2000); and quality measurement and evaluation, the last recommended by McGorry (2003) in seven constructs to measure and evaluate e-learning programs. These are:

- flexibility

- responsiveness

- student support

- student learning

- student participation in learning

- ease of technology use and technology support

- student satisfaction.

McGorry’s evaluation is learner-centred, both system and e-tutors need to support learners with the ultimate goal of learner satisfaction. Absence of empirical research in the field of the everyday e-learner indicates that methods and tools for interdisciplinary measurements have yet to be considered for the individual. Because existing e-learning evaluation in general is based on past events there remain inherent problems related to understanding e-learning with the use of evaluation for feedback, and decision-making. These problems can be addressed with the integration of instructional design phases under real-time evaluation.

Integration of Instructional Phases: E-learning Engineering

The evolution of the socio-cultural shift in education created a turn in the design of instructional systems and learning management systems. Fenrich (2005) identified practical guidelines for an instructional design process targeted at multimedia solutions. He provided an overall approach involving all the stakeholders in the process of design by covering all their needs. He also employed a project-based approach by dividing the analysis phase into sub-stages, which were: the description of the initial idea, analysis, and planning. By the systematic iteration of activities and evaluation of the first stages in design, Fenrich ensured quality.

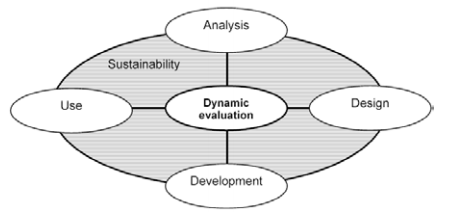

But however well-designed e-learning environments are, they cannot facilitate independent learning without interaction with others (Oliver, 2005). Current learning management systems do not facilitate social and collaborative interactions; they only provide the space for it. Collaborative e-learning can be better supported if there is more information on these interactions. These design problems are related to the collaborative nature of the task, the methods used to inform practice, design competencies, and the actual design process itself. Bannon (1994) suggested that, when designing computer-supported cooperative work, design and use of the system as well as evaluation need to be integrated. It is true that analysis, design, evaluation, and use of systems in e-learning are sustained by the interaction of pedagogy and technology. If this instructional design process is underpinned by real-time evaluation, all design phases can be informed fully and accurately. So, there is still room for feedback of instructional design phases. If this is done and instructional design accepts the integration of all the phases supported by real-time evaluation then this is called instructional engineering:

Instructional engineering (IE) is the process for planning, analysis, design, and delivery of e-learning systems. Paquette (2002, 2003) adopted the interdisciplinary pillars of human-computer interaction This considers the benefits of different stakeholders (actors) by integrating the instructional design concepts and processes, as well as principles, from software engineering and cognitive engineering. Looking at the propositions of Fenrich and Paquette, we suggest there could be two ways to ensure all stakeholders’ benefits. There are identification of key variances and dynamic evaluation.

Identification of key variances: All organizations need to function well without problems. The weakest links should be identified, eliminated, or at least controlled. Working on socio-technical design, Mumford (1983) believed that design needs to identify problems that are endemic to the objectives and tasks of organizations. Intentional variances stem from the organizational purposes and targets. Operational variances predate design, and are the areas the organization has to target. They stem from the operational inadequacies of the old system, and the technical and procedural problems have been built into it inadvertently. “Key variances refer to the same variance in both intentional and operational levels”.

Design and engineering are connected to both intentional (pedagogical) and operational (engineering) approaches. Sometimes there are problems, called variances in socio-technical design. From an educational perspective, Schwier and his colleagues (2006) emphasized the need of intentional (principles or values) and operational approaches (practical implications), and provided an analytical framework of the gaps and discrepancies that instructional designers need to deal with. The identification of a key variance helps the organization to provide added-value outcomes. This is achieved by the use of dynamic evaluation.

Dynamic evaluation: According to Lambropoulos (2006), e-learning evaluation aims to control and provide feedback for decision-making and improvement. It has four characteristics: real-time measurements, formative and summative evaluation, and interdisciplinary research. Dynamic evaluation links and informs design. It also provides immediate evaluation to user interface designers. In addition, it identifies signposts for benchmarking, which makes comparisons between past and present quality indicators feasible (Oliver, 2005). Such dynamic evaluations will enable the evolution of design methods and conceptual developments. The use of several combined methodologies are necessary in online environments. Andrews and colleagues (2003), De Souza and Preece (2004), and Laghos and Zaphiris (2005) are advocates of multilevel research in online, and e-learning environments. Widrick, cited by Parker, claimed that:

“[it] … has long been understood in organizations that when you want to improve something, you first must measure it” (2002 p. 130). Parker (2003 p. 388), does not see that engineering for unified learning environments is feasible: “The engineering (or re-engineering) of systems designed to guarantee that manufacturing processes would meet technical specification might seem to imply a uniformity that may not be possible, or even desirable, in the dynamic and heterogeneous environment of higher education.”

According to Parker, a unified systems design is not possible, or even desirable. The interdisciplinary nature of e-learning, the large number of stakeholders involved, and the uniqueness of the context make e-learning engineering extremely difficult. Nichol and Watson (2003, p. 2) have made a similar observation: “Rarely in the history of education has so much been spent by so many for so long, with so little to show for the blood, sweat and tears expended”. It is contended that e-learning engineering, including dynamic evaluation, may well minimize the cost. User interface designers should recognise the need to limit this process to a period of days or even hours, and still obtain the relevant data needed to influence a re-design (Shneiderman & Plaisant, 2005).

At present, the design process is still vulnerable to the Hawthorn effect (Faulkner, 2000). Laboratory research ignores the distractions of e-learner behaviour in the real world. On the other hand, dynamic evaluation enables the evolution of design methods and conceptual developments (Silius & Tervakari, 2003; Rogers, 2004). Ethnography captures events as they occur in real life, and then uses them for design. It can be a time-based methodology aiming for a description of a process in order to understand the situation and its context, and to provide descriptions of individuals and their tasks (Anderson, 1996). This type of research could be said to be part of dynamic evaluation in e-learning engineering:

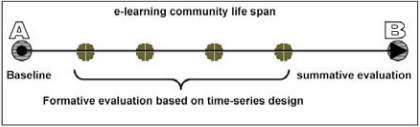

The line from A to B in Figure 9.3 represents the lifespan of an e-learning community. A short or long term e-learning community may have a beginning (A) that is the baseline, and an end (B). Usually, the comparison of data collected in A and B provides the summative evaluation. The success or failure of the e-learning community is apparent where the initial organization’s targets are met. Most times there are differences between what the different stakeholders want or seem to need. (See Cohen’s PhD thesis, Appendix I, 2000.) Formative evaluation can shed light on the individual stages of e-learning and in understanding key variances as they occur. This provides feedback and control for all stakeholders.

To date, most evaluation and research is designed to support summative evaluation. The existing tools and evaluation methods are not designed to aid dynamic evaluation. If new tools can be designed for e-learning engineering, then, quality assurance, assessment, and improvement will control arising problems, and enhance best practices. Current efforts to meet these targets for quality are connected to the dissolution of traditional educational hierarchies and other systems (Pond, 2002).